Evaluative words for wines

« previous post | next post »

There are two basic reasons for the increased interest in "text analytics" and "sentiment analysis": In the first place, there's more and more data available to analyze; and second, the basic techniques are pretty easy.

This is not to deny the benefits of sophisticated statistical and text-processing methods. But algorithmic sophistication adds value to simple-minded baselines that are often pretty good to start with. In particular, simple "bag of words" techniques can be surprisingly effective. I'll illustrate this point with a simple Breakfast Experiment™.

Let's start by looking at the relationship between the words used in wine-tasting notes and the numerical scores representing the perceived quality of the wine. We're interested in tasting notes like these examples from the Beverage Tasting Institute:

Pure golden color. Golden raisin, honeydew, balloon, and flint aromas. A brisk entry leads to an off-dry medium-to-full body of honeyed peach, golden raisin, and rubber eraser flavors. Finishes with a petrol-like mineral, tangy apricot marmalade, and spice fade with pithy fruit tannins.

Hazy, pale golden color. Funky, old canned vegetable and lemon detergent aromas follow through to a bittersweet medium-bodied palate with wet hay, fruit stones, orange drink, and honey candy flavors. Finishes with a tannic citrus peel fade.

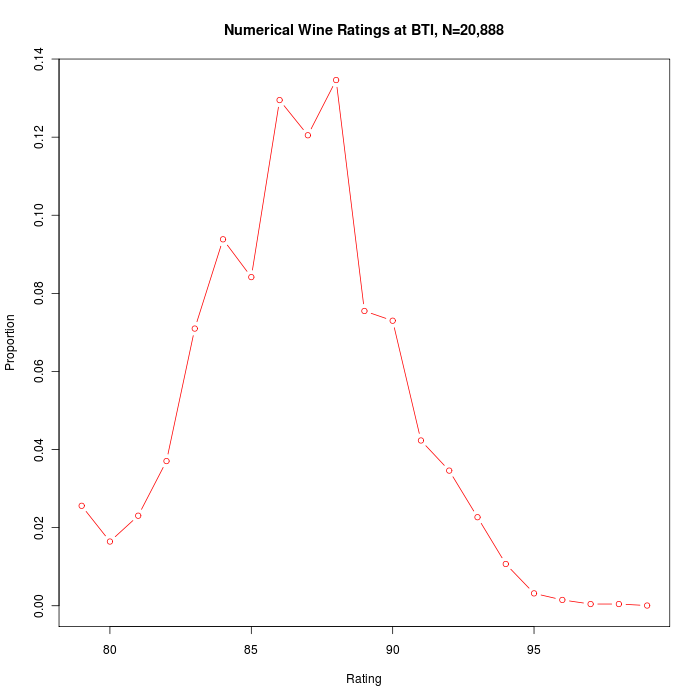

The associated scores run from 79 to 99, with the following distribution in my sample collection:

The simple-minded "bag of words" approach to analyzing such material starts by counting how often each word is associated with a given score. There are 21 possible scores (or at least 21 scores that are actually used in my sample), and 7,303 distinct "lexical tokens" (including wordforms, marks of punctuation, number strings, etc.), so this simple counting exercise gives us a matrix with 7,303 rows and 21 columns.

One trivial thing to do with this "words by scores" matrix is to average the scores in each row. These averages are probably not very reliable for the 3,037 words that only occur once ("arresting", "finesseful", "cod", "meatballs", etc.); and maybe also not reliable for other words that are relatively rare in this sample. So let's limit the exercise to words that occur more than 10 times. In this subset, the 20 rows with the highest average score correspond to the wordforms:

incredibly 93.3846

sensational 93.375

finest 93.2727

fantastic 92.8846

fantastically 92.8421

mignon 92.6154

evolving 92.55

decade 92.4688

decadent 92.4615

class 92.4545

sensationally 92.3

filet 92.2791

superb 92.2083

superbly 92.1905

resonant 92.1667

outstanding 91.8095

exceptionally 91.7692

opulent 91.7647

seamless 91.7564

wonderfully 91.7179

The 20 worst-scoring wordforms are:

detergent 80.3

ungenerous 80.4375

shrill 80.5714

mean 80.6154

? 81.2

odd 81.24

meager 81.3333

sulfur 81.6333

aspirin 81.641

bobbing 81.6905

cardboard 81.7955

limp 81.8276

chemical 81.931

oxidized 82.0714

dull 82.1048

stemmy 82.1321

bite 82.1333

jumbled 82.2

scorched 82.25

tough 82.25

tired 82.2581

The question-mark scores negatively in these reviews because it's almost always associated with but, as in

Bright orangey-brick hue. Aromatically reserved, with a subdued, earthy undertone. A rich entry leads to a rounded, moderately full-bodied palate with a wave of aggressive tannins. Somewhat mean. Try with mid-term cellaring, but?

The exclamation point, unsurprisingly, has a fairly high average score of 90.34.

There are some interesting oddities — for example, plurals generally seem to score a little bit better on average than the corresponding singulars do:

| singular | plural | |

| blueberry | 88.52 | 88.64 |

| apricot | 88.04 | 88.84 |

| banana | 87.81 | 88.75 |

| raisin | 87.71 | 88.16 |

| raspberry | 87.68 | 88.29 |

| berry | 87.50 | 87.86 |

| currant | 87.49 | 89.13 |

| peach | 86.89 | 87.12 |

| tannin | 86.82 | 86.92 |

| stone | 86.79 | 87.86 |

| hint | 87.14 | 87.24 |

| strawberry | 86.77 | 87.20 |

| apple | 86.56 | 86.89 |

| note | 86.44 | 86.92 |

| pear | 86.40 | 87.32 |

| lemon | 86.05 | 86.70 |

| aroma | 85.54 | 86.80 |

| cherry | 86.49 | 87.91 |

| grape | 85.81 | 86.58 |

| pit | 84.28 | 84.86 |

| stem | 82.73 | 83.63 |

(Some go the other way, e.g. flavor 88.57, flavors 86.96; carrot 87.81, carrots 86.83; olive 88.68, olives 87.62. And I haven't done a systematic survey. But in most of the cases that I've looked at this morning, plurals seem to taste better than singulars.)

From the same data, we could calculate some other, equally simple tables of numbers, say the matrix of words by reviews, where the i,j cell holds the number of times that word i occurs in the text of wine-review j.

Given that there are 20,509 reviews in my sample, this is a matrix with 7,303 rows and 20,509 columns. (We wouldn't want to have to do all this counting ourselves, but luckily a few lines of computer code will do the counting and create this matrix in a fraction of a second.)

Like the words-by-scores matrix, this words-by-reviews matrix also supports some really simple applications.

For example, we can approximate the similarity of two wine-reviews by taking the "inner product" of the corresponding columns in the matrix — giving us the counts in one column multiplied by the counts in the other column, all added up. With a bit of weighting thrown in to take account of things how unevenly distributed words are across documents, this "cosine distance" has been a basic technique in document retrieval and text mining for decades.

And again, this matrix of counts is the starting point for some slightly more sophisticated techniques. For example, we could use the association of words with wine-reviews to infer the overall contribution of each word to the vector of wine scores, either overall or for different kinds of grapes or in different price ranges, using various regression techniques.

The technique known as "latent semantic analysis" starts with this same type of "term by document matrix" — a table whose rows are words and whose columns are documents, with the number in row i and column j representing the number of times that word i occurs in document j.

The basic metaphor is that a given word's distribution across documents is a sort of extensional representation of its meaning. But if there are a million documents, then this representation is a million-dimensional vector, which is not a very handy object. Even in our little collection of wine reviews, we've got more than 20,000 counts per word to keep track of. And you'd be right to suspect that the real amount of relevant per-word information in these distributions is a lot less than such large collections of numbers imply…

A mathematically simple technique called singular value decomposition (SVD) gives us a way to find a low-dimensional approximation for any matrix, including specifically such term-by-document matrices. We can use this technique to approximate the information in a word's distribution across documents with a projection onto an arbitrarily short vector, 2 or 20 or 200 numbers rather than 20,000 (or 20 billion) . Obviously, the quality of the approximation improves with the number of numbers that we retain — but typically, the returns (in terms of, say, the effect on estimated distances) start to diminish rapidly.

Furthermore, at least the first few of these numbers (ranked by importance) are often humanly interpretable. And this approach can be a worthwhile way to find interpretable dimensions even if we start with a much smaller space.

On beyond averaging, regression, and eigenanalysis, there are many more elaborate and more sophisticated sorts of analysis we could (and should) do with datasets like this — but again, a simple-minded baseline starts us off in a pretty good place.

Of course, the way the words are put together in text really does matter. A text is more than a "bag of words". All the same, simple word-choice carries enough information to start us at a useful level for many tasks.

And there are almost-equally-simple methods that take account of the sequence and structures of words in texts. But that's a topic for another breakfast.

(For some prior art on this particular topic, see "…with just a hint of Naive Bayes in the nose", 2/23/2011.)

Rick Saling said,

April 7, 2012 @ 9:49 am

Has this kind of analysis been done with opinion / analysis articles about current events? I'd be interested in how terms cluster, and whether the clusters correspond to political ideologies.

My own mental map of the world is that on any controversial issue, be it the Vietnam War, the Civil Rights movement, Israel/Palestine, opinions tend to cluster into two poles (right vs left), with an unstable grouping ("well-meaning liberals") who try to split the difference. I can usually quickly classify articles on this basis. My intuition tells me that these three groupings use different words.

But is this accurate? How does the word usage actually cluster? It would be very interesting to see if my intuitive view is actually valid or not: i.e. that word usage alone is enough to separate the three groupings (and is it really only 3?)

Another issue is: do these clusters exist from the very beginning? Or is there a process of polarization that goes on?

[(myl) All excellent questions. Most of them have been studied to some extent, though I don't know of any work evaluating the interesting idea that there might be "a process of [lexical] polarization" as debates on a topic develop. I think you'll find that there's more than one meaningful dimension involved, here as in other ways of looking at political (and other) opinions. More on this another day.]

Robert Coren said,

April 7, 2012 @ 9:51 am

Please tell me that those quotations, at least the first two, are parodies. "flint aromas"? "rubber eraser flavors"? "wet hay, fruit stones, orange drink"? Seriously?

[(myl) No joke, seriously. Don't laugh. At least not too much.]

E W Gilman said,

April 7, 2012 @ 9:59 am

I don't understand the first thing about this analysis, but if Mark Liberman is a wine connoisseur he might subscribe to my son's newsletter, View from the Cellar, where he would I think find further wine descriptors. And in answer to commenter #1, yes, that sort of verbiage does appear in wine reviews. Whenever I happen to read them I don't understand them either. For ancient me a wine either tastes good or it doesn't.

Leslie Katz said,

April 7, 2012 @ 9:59 am

What? Wine and song, but not women?

[(myl) Just wine today. Other topics some other morning.]

Garrett Wollman said,

April 7, 2012 @ 12:21 pm

I'm curious what the, um, "inter-annotator agreement" looks like for wine tasting. Do these words actually even have consistent meanings?

[(myl) According to Gil Morrot, Frédéric Brochet and Denis Dubourdieu, "The Color of Odors", Brain and Language 79(2) 2001 (discussed here), experts can distinguish red wine from white wine by odor alone about 70% of the time. When asked to describe the odor of a white wine dyed to look red, such experts switched from white-wine-associated odor descriptors to red-wine-associated odor descriptors:

In the figure, "wine W" is white wine, while "wine RW" is white wine dyed red.

This is not to say that all wine tasters are making it all up — there are interesting and serious attempts to document and standardize wine talk, e.g. the work Ann Noble's Wine Aroma Wheel, and the Mouth Feel Tasting Wheel by Richard Grawel and others, also discussed in the cited post from 2004.]

Q. Pheevr said,

April 7, 2012 @ 2:51 pm

The singular–plural difference seems rather slight, but if it's a real effect (or, for that matter, even if it isn't), the direction makes intuitive sense to me: plurals, especially for all those fruits, sound more real and more complex. "Blueberries," for instance, calls to mind all the variation in flavour that one would find in an actual pint of fresh blueberries, but "blueberry" sounds more like a single note. Or, to put it another way, fruit sounds tastier if it hasn't been put through Pelletier's Universal Grinder.

Of course, it's easy to come up with that kind of story post hoc (or post hock). But it might be fun to ask people to rate the expected tastiness (or complexity, or price) of wines based on descriptions with singular vs. plural fruits, with everything else nicely controlled for.

Sili said,

April 7, 2012 @ 4:05 pm

I'm surprised by the high scores of words like "detergent", "shrill" and "cardboard".

It seems to me that having a scale that starts at 0 is pointless if even 'bad' wines score in the 80es.

[(myl) Thou sayest. In this collection of ratings, 79 is the lowest ever given, and is equivalent to "Not Recommended".]

Bob Moore said,

April 7, 2012 @ 6:45 pm

The 100-point scale was introduced by the American wine critic Robert Parker, and it deliberately skews high because he wanted it to mirror the numerical grading system used in American public schools of the baby-boomer era, in which anything below 70 or 75 was a failing grade. Curiously mirroring school grading, however, in the 30 years since Parker rose to prominence, there has been grade inflation in wine ratings, too. Different publications and critics who use the 100-point scale may rank a bit higher or lower, but I would say on the whole the rankings tend to mean something close to this:

95-100: great

90-94: very good

85-89: good

80-84: so-so

below 80: pretty bad to truly awful

So, a wine that smelled or tasted *slightly* of detergent could well be on the border between so-so and pretty bad, as an average score of 80.3 would indicate. Keep in mind that there are very few truly bad wines in the market because no-one would buy them, given that you can get so-so wines like "Two buck Chuck" for only a few dollars a bottle.

KWillets said,

April 7, 2012 @ 8:39 pm

You really need to add this to the corpus:

Lazar said,

April 7, 2012 @ 9:15 pm

I don't understand the "but?" thing. What does that usage convey?

D.O. said,

April 7, 2012 @ 9:41 pm

The two examples presented in the OP are pretty much "bags of words" IMHO.

Brian said,

April 9, 2012 @ 11:31 am

I used to edit a wine columnist, and at one point I saw a list of the flavors one is apparently allowed to perceive in wine. ("Peach" vs. "white peach"? I fold.) The most vivid official aroma was "cat urine."

Jerry Friedman said,

April 12, 2012 @ 5:41 pm

@Lazar: I've never seen "but?" like that before, but I take it to mean "but this is questionable, so don't count on anything."

This Week’s Language Blog Roundup | Wordnik ~ all the words said,

April 20, 2012 @ 9:02 am

[…] Language Log, Mark Liberman gave us a taste of evaluative words for wine, such as leesy, “descriptor of a wine that possesses a rich aroma and/or flavor that is a direct […]