Is language "analog"?

« previous post | next post »

David Golumbia's 2009 book The Cultural Logic of Computation argues that "the current vogue for computation" covertly revives an "old belief system — that something like rational calculation might account for every part of the material world, and especially the social and mental worlds". Golumbia believes that this is a bad thing.

I have nothing to say here about the philosophical or cultural impact of computer technology. Rather, I want to address a claim (or perhaps I should call it an assumption) that Golumbia makes about speech and language, which I think is profoundly mistaken.

Golumbia hangs a lot of significance on the word analog, which he uses (without defining it) in the sense "continuously variable", putting it thereby in opposition to the digital representations that modern computers generally require.

His first use of analog occurs in the following context:

Each citizen can work out for himself the State philosophy: "Always obey. The more you obey, the more you will be master, for you will only be obeying pure reason. In other words yourself …

Ever since philosophy assigned itself the role of ground it has been giving the established powers its blessing, and tracing its doctrine of faculties onto the organs of State power. To submit a phenomenon to computation is to striate otherwise-smooth details, analog details, to push them upwards toward the sovereign, to make only high-level control available to the user, and then only those aspects of control that are deemed appropriate by the sovereign (Deleuze 1992).

His other 28 uses of analog present a consistent picture: the world is analog; representing it in digital form is a critical category change that leads to philosophical error and serves the interests of hierarchical social control.

From his introductory chapter:

Human nature is highly malleable; the ability to affect what humans are and how they interact with their environment is one of my main concerns here, specifically along the lines that computerization of the world encourages computerization of human beings. There are nevertheless a set of capacities and concerns that characterize what we mean by human being: human beings typically have the capacity to think; they have the capacity to use one or more (human) languages; they define themselves in social relationship to each other; and they engage in political behavior. These concerns correspond roughly to the chapters that follow. In each case, a rough approximation of my thesis might be that most of the phenomena in each sphere, even if in part characterizable in computational terms, are nevertheless analog in nature. They are gradable and fuzzy; they are rarely if ever exact, even if they can achieve exactness. The brain is analog; languages are analog; society and politics are analog. Such reasoning applies not merely to what we call the "human species" but to much of what we take to be life itself, […]

But crucial aspects of human speech and language are NOT "analog" — are not continuously variable physical (or for that matter spiritual) quantities. This fact has nothing to do with computers — it was as true 100 or 1,000 or 100,000 years ago as it is today, and it's been recognized by every human being who ever looked seriously at the question.

There are three obvious non-analog aspects of speech and language. In linguistic jargon, these are the entities involved in syntax, morphology, and phonology. In more ordinary language, they're phrases, words, and speech sounds.

Let's start in the middle, with words.

In English, we have the words red and blue; in French, rouge and bleu. In neither language is "purple" some kind of sum or average or other interpolation between the two. The pronunciation of a word is infinitely variable; but a word's identity is crisply defined. It's (the psychological equivalent of) a symbol, not a signal — and thereby it's not "gradable and fuzzy", it's not part of some "smooth" continuum of entities, it's an entity that's qualitatively distinct from other entities of the same type.

This is not just some invention of scribes and linguists. Pre-literate children have no trouble with word constancy — they easily recognize when a familiar word recurs. And speakers of a language without a generally-used written form have the same ability.

Nor is this property of words functionally irrelevant — the possibility of communication relies crucially on the fact that each of us knows thousands of words that are distinct, individually recognizable, and generally arbitrary in their relationship to their meanings. No coherent account of the human use of speech and language is possible without recognizing this fact. (We'll also need a story about how words can be made up of the pieces that linguists call "morphemes", and a story about how word-sequences sometimes come to have non-compositional meanings. But none of that will erase the essentially discrete and symbolic nature of the system's elements.)

What about phonology? Again, we know that a spoken "word" is not just an arbitrary class of vocal noises. Each (variety of a) language has a phonological system, whereby a simple combination of a small finite set of basic elements defines the claims that each word makes on articulations and sounds. This phonological principle is what makes alphabetic writing possible.

The inventory of basic elements and the patterns of combination varies from language to language. And the pronunciation of a word or phrase is infinitely variable, and this phonetic variation carries additional information about speakers, attitudes, contexts, and so on. But phonology itself is again a crucially symbolic system — its representations are not "gradable and fuzzy". And if this serves to "striate otherwise-smooth details, analog details" and thereby "to push them upwards toward the sovereign", it's something that happened whenever human spoken language was invented, probably hundreds of thousands of years ago, and certainly long before anyone dreamt of digital computers.

Like the nature of words as abstract symbols, the symbolic nature of phonology is not functionally irrelevant. In a plausible sense of the word "word", an average 18-year-old knows tens of thousands of them. Most of these are learned from experiencing their use in context, often just a handful of times. Experiments by George Miller and others, many years ago, showed that young children can often learn a (made-up) word from one incidental use.

Given the infinitely variable nature of spoken performance, the whole system would be functionally impossible if "learning a word" required us to learn to delimit an arbitrary region of the space of possible vocal noises. The fact that words are spelled out phonologically means that every instance of every spoken word helps us to learn the phonological system, which in turn makes it easy for us to learn — and share with our community — the pronunciation of a very large set of distinctly different word-level symbols.

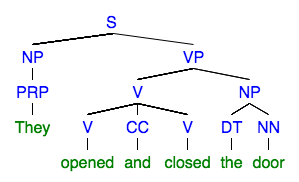

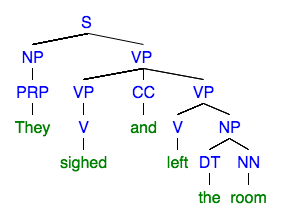

Finally, what about phrase structure? Another crucial and universal fact about human language is that complex messages can be created by combining simpler ones. And this combination is not just one thing after another. Different structural combinations have different meanings — in hierarchical patterns that have nothing to do with relations of social dominance. The difference between conjoined verbs both governing the same direct object ("opened and closed the door") and an intransitive verb conjoined with a verb-object combination ("sighed and left the room") is an example — and like other such distinctions, it's a discrete one, not "fuzzy and gradable":

|

|

There are lots of different ideas about exactly what the relevant distinctions are, much less how to write them down; and under any account, particular word-sequences are often ambiguous. But this is because there are multiple possible structures, and not because these structures are arbitrary "striations" of a continuous space.

That's enough, and too much, for today. But let me note in passing that the idea of "[making] make only high-level control available to the user" has been developed at length in a very different context, with little to do with language and nothing at all to do with computation. I'm referring to the Degrees of Freedom Problem, also known as the Motor Equivalence Problem, first suggested by Karl Lashley ("Integrative Functions of the Cerebral Cortex", 1933), and developed further by Nicolai Bernstein (The Coordination and Regulation of Movement, 1967):

It is clear that the basic difficulties for co-ordination consist precisely in the extreme abundance of degrees of freedom, with which the [nervous] centre is not at first in a position to deal.

Turvey ("Coordination", American Psychologist 1990) observes that

As characteristic expressions of biological systems, coordinations necessarily involve bringing into proper relation multiple and different component parts (e.g., 1014 cellular units in 103 varieties), defined over multiple scales of space and time. The challenge of properly relating many different components is readily illustrated. Muscles act to generate and degenerate kinetic energy in body segments. There are about 792 muscles in the human body that combine to bring about energetic changes at the skeletal joints. Suppose we conceptualize the human body as an aggregate of just hinge joints like the elbow. It would then comprise about 100 mechanical degrees of freedom, each characterizable by two states, position and velocity, to yield a state space of, minimally, 200 dimensions.

and expresses Bernstein's idea succinctly as

Each and every movement comprises a state space of many dimensions; the problem of coordination, therefore, is that of compressing such high-dimensional state spaces into state spaces of very few dimensions.

Or, as Golumbia and Deleuze put it, to "make … high-level control available to the user". But here the goal is not to limit options to "those aspects of control that are deemed appropriate by the sovereign", but simply to make multicellular life possible.

Current thinking about this problem involves interesting properties of non-linear dynamical systems — for an example that starts to bring language-like concepts into the picture, see e.g. Aaron Johnson and Dan Koditschek, "Towards a Vocabulary of Legged Leaping", IEEE ICRA 2013.

Finally, my dreamtime colleague Mikhail Bakhtin would want me to note Golumbia's heteroglossic echo of Frederic Jameson's 1991 book Postmodernism or, The Cultural Logic of Late Capitalism.

Bathrobe said,

July 17, 2016 @ 4:26 pm

"Dreamtime colleague"? Is this a mythological reference?

Guy said,

July 17, 2016 @ 5:03 pm

I don't see how anyone could argue that language is "analog" in any meaningful sense, the assertion is so absurd on its face that it makes me suspect the asserter didn't even think about it except to assume that only machines are "digital".

J.W. Brewer said,

July 17, 2016 @ 5:08 pm

The Deleuzian thing he's riffing on sounds like it might be somewhat parallel to James Scott's notion of "legibility" as set forth in his awesome Seeing Like a State: How Certain Schemes to Improve the Human Condition Have Failed. But Scott is explicit that he's using a metaphor (basically, traditional peasant-type societies are incentivized to be opaque in various ways to their elite-or-otherwise-outsider rulers in order to make intrusions like tax collection, military conscription, compulsory education, etc etc more difficult to implement, whereas the rulers, if they are modernizing/rationalizing/state-building types, want the ruled to be organized in a "legible" fashion that makes such intrusions easier to implement), whereas the use of notions like analog-ness or computation by Golumbia-via-Deleuze may (or may not be, I haven't read them in context) be less explicitly metaphorical and thus more irksome for those for whom the notions have actual meaning and utility in their own scholarly fields.

bks said,

July 17, 2016 @ 6:24 pm

Guy, as someone who spent 25 years attempting to digitize the language of molecular biologists, it seems equally absurd to call language digital. In 1993 I heard Francis Crick challenge a lecture hall replete with Berkeley biologists to define gene. I would venture that the definition has become even more amorphous with time. To wit, this paper from 2007: http://genome.cshlp.org/content/17/6/669.long

I'm not convinced the question is meaningful. I have an analog clock on the wall and a digital clock on my wrist. So is time digital or analog? I don't know.

David Golumbia said,

July 17, 2016 @ 6:32 pm

Mark,

Believe it or not I am very happy to have this conversation, and I agree with quite a few of the sloppy (often Deleuze-influenced) things I wrote in that book (my first, written under a great deal of pressure of various sorts, as these things often are). There's a lot in there I hope to go back to someday, as I'd really want to revise a lot of what I said.

I'll try to work on a more thorough response, but for the time being let me suggest that I agree that I fail to define analog & digital adequately. But the definition you propose, which I'll characterize as "continuous" versus "stepwise" or "binary" variability, isn't the one I'd want to endorse, in part for the very reasons you give. And while there may be nods toward this in the book that sound that way, I don't even think it's what I meant there.

(Celluloid) film is stepwise and not continuous, but it isn't "digital" or "computational" in the most ordinary sense of that term, as opposed to digital video, which is. (there is more to say about this difference but I'll leave this as is for now.) The linguistic phenomena you describe are, to my mind, easily accommodated by this definition of "analog," as are many other natural phenomena that are obviously binary (electric charge on subatomic particles, contrast between light and dark) or stepwise (electron shells). It would be absurd to deny the existence of these phenomena, but whether to categorize them as "analog" or "digital" depends a great deal on our definitions of those terms. Things are, as JW says, getting very metaphorical at this point, and indeed a major part of my book is to look at how computation functions as a metaphor even more than as a literal fact (which I discuss especially with regard to computational theories of mind): but following this usage, I have no problem acknowledging all the phenomena you mention, but continuing to call them "analog." Analog phenomena have binary and stepwise characteristics all over the place, but I don't think that is what is meant when people say "ultimately the mind is a computer."

This is one of my fundamental points of unhappiness with the book these days: I tend to use "computation" as a kind of synonym for "calculation with symbolic tokens" (which is, I think, what Chomsky means when he talks about linguistic operations being computational), but I don't actually think this is a good account of what computers do or what computation means in the Turing machine sense. There is a fundamental transformation of symbolic tokens in digital computation that is different from mere stepwise/binary variability. If I'm wrong about this–and obviously many people disagree–then the problem is that virtually everything is computational at some level or another. I think that loses distinctions we don't want to lose.

There is more to say but I want to mull it over for a while & try to put my thoughts together more before I do.

David

Guy said,

July 17, 2016 @ 7:17 pm

@bks

I'm not sure I understand how ambiguity or abstract see in the word "gene" relates to whether language is "digital" in the ordinary sense, any more than the fact that a particular sequence of ones and zeros could be interpreted in many different ways in different contexts (as a program, a text file, a video file, etc.). Some data expressed in spoken language could be characterized as "digital" – for example, an intonation of surprise can express more or less surprise in an basically continuous way – but those things are generally thought of as being somewhat peripheral to the core of what language is, the "meat" of it is a sequence of words (or phonemes, or what you like) in a phrase structure that can be encoded digitally in bits without loss of information or any kind of "rounding".

bks said,

July 17, 2016 @ 7:29 pm

Guy, you lost me at in the ordinary sense. My fault, I'm sure.

yastreblyansky said,

July 17, 2016 @ 7:36 pm

There's something interesting suggested by the difference between that and the sentence the author intended to write (presumably something like "I agree that quite a few of the things I wrote were sloppy").

Back in the day, when the discussion of syntax involved an absurd assumption that every string either was or wasn't an "acceptable" utterance in a given language, and all working linguists found themselves forced to acknowledge that the difference between "acceptable" and "unacceptable" was squishy in practice, we had a whole range of phenomena in which a theoretical digitality corresponded to a real-world analogueness, and no theoretical way of making sense of it.

Golumbia's comically misconceived sentence is an illustration of how we don't always manage to "say what we mean" and indeed probably never quite do. That points in turn to a more productive way of thinking about it, which would be in terms of the gap between a speaker's intentions and production: I mean, we could have been thinking all along, not in formalist terms of whether a string was acceptable or not, but in functional terms of how far speakers had accomplished their intentions, which you might think of as continuously variable. I.e., signifiant is necessarily digital but maybe signifié could be thought of as analogue.

[(myl) There are three interesting questions here: (1) Are "meanings" (in the sense of things that people intend to communicate) sometimes (or even mostly) "squishy" (e.g. gradient, or distributions over discrete alternatives, or both)? (2) Is "grammaticality" similarly squishy? (3) Are there discretely distinct syntactic structures that play a role in (1) and (2)?

My answers would be "yes", "yes", and "yes".]

Roger Lustig said,

July 17, 2016 @ 10:52 pm

In the most prosaic sense (nothing ordinary about it), didn't Nyquist and Shannon (and all the others) set limits on the level of interest to be accorded the "digital/analog" dichotomy?

[(myl) Yes and no. Even if we digitize all the signals, and agree (if we've done it properly) that no information has been lost, the distinction between signals and symbols remains coherent and relevant.]

Bill Benzon said,

July 17, 2016 @ 11:59 pm

Does anyone know when analog and digital began to discussed in opposition to one another? It's easy enough to think of slide rules as analog devices and the abacus as a digital device, but is that how they were thought of? The Wikipedia entry on analog computer lists a bunch of mechanical, electrical and electronic devices in the late 19th and into the 20th century, but did the people who conceived and used them explicitly conceptualize them as analog in kind?

I've run an ngram query on "analog,digital" both hug the bottom of the chart space until about 1950 and then both start up, with "digital" quickly outstripping "analog." The McCulloch-Pitts neuron dates to the early 1940s and was digital in character. Von Neumann discusses analog and digital in his 1958 Computer and the Brain; indeed, that contrast is one of the central themes of the book, if not THE central theme. How much constrative discussion was there before then?

There's certainly been a lot of such discussion after then. FWIW, when I started elementary accounts of computing and computers in the 1960s analog vs. digital was a standard topic. At some time during the personal computing I began noticing that popular articles no longer mentioned analog computing.

& bks's mention of gene is certainly germane. When did biologists start talking of the genetic "code" and of genes as bearer's of "information"?

What I'm getting at is that it may be a mistake to treat the terms as having a well-settled meaning that we can take as given. That may in fact be true for a substantial range of cases. But that need not imply that our sense of the meanings of these terms is fully settled. Are we still working on it?

[(myl) The original sense-extension of analog, as in "analogy", was in the context of one signal (for instance sound as time functions of air pressure) being represented by another (in that case sound represented analogously by voltage in a wire). And the original sense-extension of digital was in the context of a continuous time-function being represented by sequence of numbers (= "digits"). I would have thought that in both cases, the origins were in engineering discussions of telephone technology, but Nyquist's 1928 paper doesn't use either word in this way, nor does Claude Shannon in 1948. The OED's earliest citations to this sense of analog are in discussion of "analog" vs. "impulse-type" computers, e.g.

1941 J. W. Mauchly Diary 15 Aug. in Ann. Hist. Computing (1984) 6 131/2 Computing machines may be conveniently classified as either ‘analog’ or ‘impulse’ types. The analog devices use some sort of analogue or analogy, such as Ohm's Law.., to effect a solution of a given equation. [Note] I am indebted to Dr. J. V. Atanasoff of Iowa State College for the classification and terminology here explained.

Note that the "analogy" these is not between a continuous signal and a series of numbers, but between an equation to be solved in one (discrete or continuous) domain and the physics of some machine's internal operations.

And the original (to moderns ears rather opaque) technical term for the representation of a signal as a series of numbers was "pulse code modulation".]

peterv said,

July 18, 2016 @ 12:07 am

David Golumbia said:

"I tend to use "computation" as a kind of synonym for "calculation with symbolic tokens" "

Calculation could imply application of an arithmetic operation, so a better word here would be "manipulation". It is also not obvious to me that computation always involves symbolic tokens. For example, in manipulation of the pixels in an image or sequence of images, as in machine learning applied to image quality enhancement, the pixels are not tokens of anything. The image itself may be a token of something non-computational, although it is hard to see of what exactly in the case of an abstract image. However, arguably, only the pixels are being manipulated, not the image directly.

Similar comments would apply to electronic music, online games, or to any computational system which is not intended as a representation of something external to the computational world.

Francis Boyle said,

July 18, 2016 @ 3:18 am

Well, you could argue that the values (variables) being manipulated represent the pixels in the image but I suspect the more important thing here is the algorithmic manipulation of the values not what they can be used/made to represent.

David Marjanović said,

July 18, 2016 @ 4:36 am

Quantum physics is completely clear on this: time is digital, in that the imprecision can never be so small that a unit of time less than the Planck time (some 10^-43 seconds) could be reasonably said to exist. There is no infinitely short moment.

But of course a Planck time is short enough to be indistinguishable from that for the purposes of, well, most applications.

elessorn said,

July 18, 2016 @ 6:13 am

I'm not sure we can come to a very satisfactory conclusion about whether "analog" or "digital" edges its rival in metaphorical aptness as a descriptor for language in all its complexity. After all, as @bks said above, it's not as if Mark Liberman is arguing instead that language is "digital" either. But say we do all agree that upon any more fine-grained inspection the "analog" metaphor breaks down. It's still possible to understand David Golumbia's usage in an ad hoc contrastive sense, simply taking "analog" as the natural antonym of "digital," where "the digital" just means "species of information amenable to satisfactory representation in digital form and manipulation by digital operations," and "the analog" just points to "species of information not so amenable." In which case, from the post above, I'm not sure it's even clear which of those two categories Mark Liberman would place language in.

And there's something going for such a contrastive definition, in the sense that it seems to me to capture a lot of what is up for debate. Nor does it necessarily require one to accept or reject either way Golumbia et al.'s emphasis on DH's allegedly neoliberal aspects, or its function as a form of potential social control. Can "language"–ranging from example sentences to swathes of connected text–be represented in digital form? Clearly yes, even if only in the trivial case of a Word document. But are there representations satisfactory enough that digital manipulations and operations carried out upon them will produce results that have meaning? To the extent that this is doubtful, and DH does not doubt it, we have a potentially huge problem.

What kind of problem? Here I think Mark Liberman's description of the word above is a useful starting-point:

…The pronunciation of a word is infinitely variable; but a word's identity is crisply defined. It's (the psychological equivalent of) a symbol, not a signal — and thereby it's not "gradable and fuzzy", it's not part of some "smooth" continuum of entities, it's an entity that's qualitatively distinct from other entities of the same type……This is not just some invention of scribes and linguists. Pre-literate children have no trouble with word constancy — they easily recognize when a familiar word recurs. And speakers of a language without a generally-used written form have the same ability….

This seems to me to gloss over the properties of words that are least amenable to satisfactory digitization: their meaning structure. Words many be clear, discrete, countable, and (most importantly for DH) labelable. Meanings are not. Digital operations carried out on textual data that only care about word identity are thus wholly unproblematic: concordances and text searches come to mind, as well the LLog investigation into Hemingway's sentence length referred to by @leoboiko in the last thread, or J.W. Brewer's example there of testing researcher intuitions about dictional novelty in, say, a poem of Keats', by massive google-books-level corpus combing. Humanities scholars have been using digital tools like this as long as such tools have been available.

But when it comes to meaning, everything is different, and I think this may be what Golumbia is thinking of in his analog vs. digital contrast. There's not even any need to go over all the reasons for doubting that meanings are at all discrete, or that meaning structures of individual words have any absolute existence outside of their contrast with other words. As long as we can agree that words are the "crisply distinct" anchors to meanings that are much less so, then we can see the problem.

We can label "purple" as word #351678, but the meaning anchored to that word in human usage will not in anyway adhere to that representation, nor accompany it through any number of digital operations. At the same time–granting that this is a subjective judgment–questions of meaning are often the most interesting to literary scholars of any generation, from the 19th century to Digital Humanists today. The result? Studies like the one referred to today in the Atlantic (The 200 Happiest Words in Literature by Adrienne Lafrance):

From the article:

There are six main types of stories in fiction. That’s what computer scientists found after teaching a machine to map the emotional arc of a huge corpus of literature. The overall research they did is fascinating (I wrote about it in greater detail here), but several smaller components of the work are compelling in their own right.

To prepare a machine to carry out a sentiment analysis, for instance, computer scientists had to assign a happiness index to 10,222 individual words. That way, as the machine scanned passages from books, it could assess the emotional arc of the narrative.

But how do you decide how happy a word is?

In this case, researchers at the University of Vermont and the University of Adelaide enlisted the help of the crowd. Using the website Mechanical Turk, where anyone can sign up for odd jobs—many of them related to academic research—researchers asked people to rate the happiness quotient of the words they encountered.

We have discovered that the elements of the periodic table have dependable atomic weights that allow trace analysis of rocks on Earth, Mars, Pluto, anywhere. Because words don't work that way, maybe not even for the microuniverse of one individual's words over the course of a day, the researchers essentially created their own 10,222 element table of English vocabulary, where each word–from "laughter" to "index" to "terrorist", per the article–has a unique sort of universal "happiness weight." The applications or such a Key, once produced, are certainly limitless, but who knows how meaningful? Maybe "analog" isn't the best way to evoke the resistance of meaning to digitization. But I bet what one thinks of DH greatly depends on how one understands precisely projects like this one, whatever we call philosophies of language that make them seem dubious.

Leonid Boytsov said,

July 18, 2016 @ 7:53 am

The idea that the language is not discrete is not absurd. Furthermore, it seems to be become a central assumption in automatic natural language processing.

It is not hard to see that defining the meaning of the word as well as the word itself precisely is hard. There are scientists who think that there is a continuum of word's meanings.

Is it an infinite number of meanings? I don't know, but it's definitely a lot of them FOR A SINGLE SYMBOLIC REPRESENTATION. How the humans deal with this, I don't know, but there are likely regularities that help them. For example, in agglutinative languages the "number of words" can be seen as infinite, but there are strict rules to create new "words".

The fact that we are using a discreete symbolic system to write the language may not mean much. It may be just a (relatively) easy-to-use abstraction.

Jonathan said,

July 18, 2016 @ 9:17 am

'And the original (to moderns ears rather opaque) technical term for the representation of a signal as a series of numbers was "pulse code modulation"'

Not opaque to anyone involved in modern audio: every BluRay player and most audiovisual receivers give the users the option to output PCM.

[(myl) I didn't mean that the term was necessarily unfamiliar, just that it's compositional meaning is obscure: Do you actually know what a "pulse code" is, and what it means to "modulate" it? Or, more specifically, why the representation of a continuous time function as a series of integers representing its sampled values at regularly-spaced time points should be called "pulse code modulation"? Congratulations on your grasp of technological trivia if so!]

Bill Benzon said,

July 18, 2016 @ 10:54 am

Thanks, Mark. So the contrast btw analog and digital was originally made in a relatively narrow technical domain. So when we're trying to figure out whether or not or in what way language is analog or digital we're extending the contrast from a situation where it was relatively well-defined to a very different situation. In the case of language we don't really know what's going on and we're using the analog/digital contrast as a tool for helping us figure it out. And the same is certainly true for nervous systems.

In the case of David's example of celluloid film we have a technology that predates that analog/digital contrast. In the context of the distinction I find it reasonable to think of the discrete presentation frames as digital in character, but I don't off-hand see that the digital concept gives further insight into how the film technology functions. As for digital video or high resolution 'film', the effect on the human nervous system is pretty much the same as that of celluloid film. The frame rate may be different, but in all cases it exceeds the flicker-fusion rate of the visual system so that what we see is continuous motion.

[(myl) FWIW, the distinction in mathematics between "discrete" and "continuous" (in various senses of both) goes back quite a ways, as does the idea of mathematical concepts as symbolically-encoded propositions. But in the end I don't think it's helpful to try to decide on a single binary global classification of issues like whether a function is differentiable, or what it means to describe a band-limited time function as a Fourier series, or whether digitally-encoded music is the same as or different from an analog tape recording, or whether words are discretely encoded in the brain as sounds or as meanings or in whatever other ways. Though all such questions are conceptually inter-related in various ways, each has its own properties, and trying to find one simple metaphor to rule them all is a recipe for confusion.]

cameron said,

July 18, 2016 @ 11:09 am

The question above about time leads back to the source of the distinction David Golumbia makes about between the stepwise and the continuous, and which Golumbia alludes to in the mention of celluloid film. The root of this distinction is in the philosophy of Henri Bergson (one of the major influences on Deleuze). It was Bergson who stressed that time as studied by science was non-continuous and he contrasted the notion of time with the notion of "duration" which was his term for continuity, and difference itself.

John said,

July 18, 2016 @ 11:41 am

Bill Benzon said:

When did biologists start talking of the genetic "code" and of genes as bearer's of "information"?

According to a review I was just reading in a recent New York Review of Books, this happened after WWII, influenced by the development of information theory and cybernetics. From the review:

"Information thinking, Cobb claims, played a crucial part in helping to define what came to be called the "coding problem," a problem that dominated biology in the 1950s and 1960s."

The review is "DNA: 'The Power of the Beautiful Experiment,'" by H. Allen Orr, discussing Matthew Cobb's book "Life's Greatest Secret." Link: http://www.nybooks.com/articles/2016/06/09/dna-power-beautiful-experiment/

Jess Tauber said,

July 18, 2016 @ 12:29 pm

elessorn wrote:

<>

This is unfortunately not true, as chemists discovered to their chagrin. Atomic weights VARY depending upon the contributions of individual isotopes to the total mixture. Chemical, physical, even BIOLOGICAL processes can alter said mixtures. So you won't find identical values for any element the further afield you go. Only atomic NUMBER is constant for any element, representing the total number of positive electronic charges (thus proton count).

Jess Tauber said,

July 18, 2016 @ 12:45 pm

Sorry- for some reason the quote I tried to post didn't show up between the angle brackets- here it is: We have discovered that the elements of the periodic table have dependable atomic weights that allow trace analysis of rocks on Earth, Mars, Pluto, anywhere.

Bill Benzon said,

July 18, 2016 @ 12:57 pm

"But in the end I don't think it's helpful to try to decide on a single binary global classification of issues like … Though all such questions are conceptually inter-related in various ways, each has its own properties, and trying to find one simple metaphor to rule them all is a recipe for confusion."

Yes. That's what I'm thinking at the moment.

Jonathan said,

July 18, 2016 @ 1:41 pm

Mark: I'm old, so I remember "pulse dialing." That, plus a little background in Fourier transforms, suffices to limit the opacity. But I now understand what you meant.

Idran said,

July 18, 2016 @ 2:23 pm

@David Marjanović: That's a common misconception of Planck time, but it doesn't actually say any such thing. All Planck time is is the amount of time it would take light to travel one Planck distance, there's no reason to think it has any physical significance. For example, while you wouldn't be able to even conceptually measure two events separated by less than the Planck time due to fundamental limitations, there's no reason why you couldn't measure two events separated by a non-integral multiple of Planck time. You couldn't measure two events as being 0.76*tP apart, but there would be no physical obstacle to measuring two events as being, say, 13.54*tP apart, which would be impossible if time was actually discretized. And all of physics, even quantum physics, fundamentally assumes that spacetime is continuous.

MikeA said,

July 18, 2016 @ 3:58 pm

A few thoughts from an admitted on-expert.

1) My first exposure to "Pulse Code Modulation" (admittedly in a telephone context) distinguished it from "Pulse Amplitude Modulation"

The latter was sampled, but not quantized. The former was sampled _and_ quantized. It was typically used internally to an electronic switching system to "multiplex" multiple audio streams on a single bus or signal.

2) Audio tape recording is not (IMHO) fully "analog". The recording bias effectively samples the continuous audio signal, producing longer and shorter areas of magnetization in each direction. On playback, the resulting signal is low-pass-filtered to recover (an approximation of) the original signal.

Other than the "multiplexing" part, this is in my mind similar to the PAM above.

3) I consider the "locks" of Babbage's Difference engine to be the distinguishing feature that makes it "digital"(not that he used the word). Because the state of the machine is effectively quantized after every operation (a wheel which could be interpreted as "meaning" 2.87… or 3.15… as an _analog_ to the result of a (mechanical) addition is quantized to "by golly, 3".

To me, the distinguishing feature of "digital" is that it allows robust systems to be made from imperfect components. That was fortunate, as in Babbage's time the Industrial Revolution was becoming very good at making copious quantities of imperfect components. We still are.

[(myl) Your last point is a crucial one, and relevant to the current discussion. The essentially "digital" nature of words is crucial to their function as a reliable means of transmitting messages — interpretations may vary, but linguistic messages can be reliably retained and transmitted as scripture for millennia.]

tangent said,

July 18, 2016 @ 11:57 pm

I think what Golumbia is aiming for is not the discrete/continuous distinction, but the type of relation that computational values have with the world.

In a prototypical analog computer, each voltage represents a "real thing". The position of something, or its speed, or its acceleration. This is the "analogy" the machine makes.

So if I built a digital computer that solves a differential equation by tracking the physical quantities, Golumbia might well consider that analog. It can be built to be indistinguishable from its analog sibling.

If I build a computer using voltages and vacuum tubes, but it operates a perceptron network to compute things like the "positive mood" of an input text, Golumbia might want to call that digital.

Note how this privileges physics! It says first that a position measurement is in the charmed circle of "real" to be made analog of, and even that a /second derivative/ is too.

L said,

July 20, 2016 @ 1:49 pm

"The pronunciation of a word is infinitely variable; but a word's identity is crisply defined. It's (the psychological equivalent of) a symbol, not a signal — and thereby it's not "gradable and fuzzy", it's not part of some "smooth" continuum of entities, it's an entity that's qualitatively distinct from other entities of the same type."

I'm not sure about this at all.

I think it's true for some words. There are an infinite number of ways to pronounce "barometer," but as long as you pronounce it "barometer," it means the same thing. Barometer.

But take as an example the word "yes."

"Does this jacket look good on me?"

"Yes."

There are an infinite number of ways to pronounce "yes," and they deliver a very wide variety of different meanings. Which leads me to think one of the following is true:

(a) although the possible pronunciations are infinite and continuous, the possible meanings (though many) are finite and discrete; language is not analog.

(b) although the pronunciations and the meanings are infinite and continuous, that phenomenon does not fall under "language," but under something else; language is not analog.

[c] the pronunciations and the meanings that go with them are infinite and continuous, and this is a property of language; language is, at least sometimes, analog.

Apologies if this has already been addressed. I admit that some of these comments are a little hard for me to follow.

peterv said,

July 21, 2016 @ 12:14 am

@L

The meanings of a word or statement could be both discrete and infinite. The person (let us call her Alice) answering "Yes" to your question, "Is statement P true?", could be intending to convey any one of an infinite number of meanings, depending on the relationship you have with her. If you tend to disbelieve everything she says, and she knows this about you, she may be bluffing in her answer. But you may know that she is likely to be bluffing, so she may try to double-bluff, etc. Moreover, her answer may not be about the truth or otherwise of the statement, but about what she wants you to think is her belief about the truth or otherwise of the statement.

So here's an infinite list of some of the meanings, all discrete, that Alice could intend to convey with that one word, "Yes":

That P is true.

That Alice believes that P is true.

That Alice desires you to believe that P is true.

That Alice desires that you believe that Alice desires you to believe that P is true.

That Alice desires you to not believe that P is true.

That Alice desires that you believe that Alice desires you to not believe that P is true.

That Alice desires you to believe that P is not true.

That Alice desires that you believe that Alice desires you to believe that P is not true.

And so on, ad infinitum.