Google n-gram apostrophe problem fixed

« previous post | next post »

Will Brockman of Google explains that

There was a problem with apostrophes in the Ngram viewer front end – my fault, and I corrected it yesterday (1/1/2011).

This is admirably quick work, especially on New Year's day (!) — I wrote about problems with apostrophes on Dec. 28 and 29.

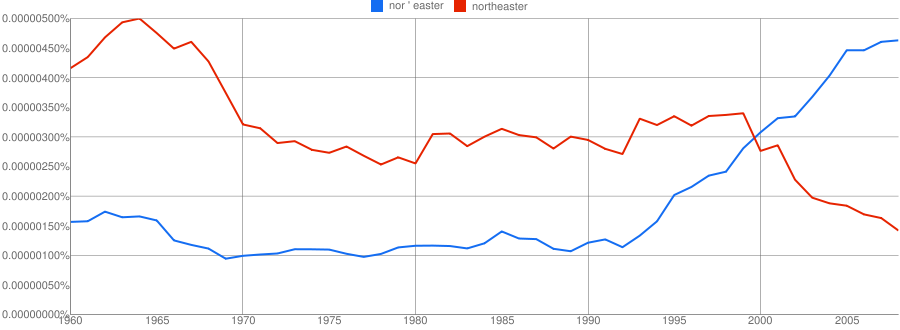

Now we can see the growth of nor'easter relative to northeaster, at least in whatever selection of published books the "American English" corpus includes:

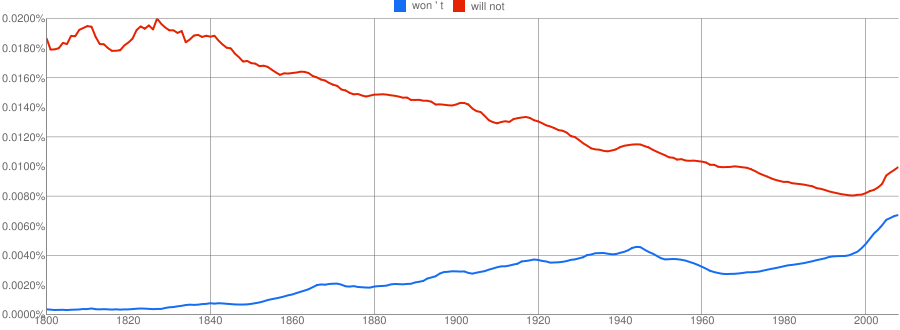

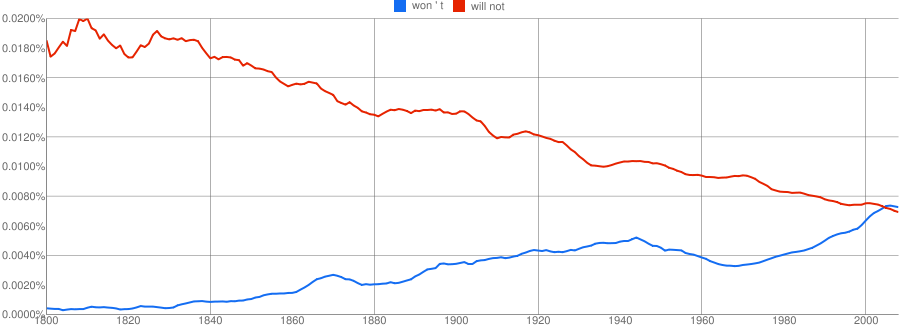

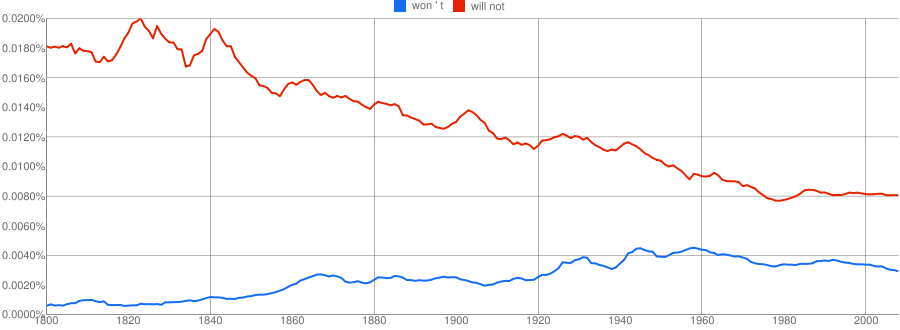

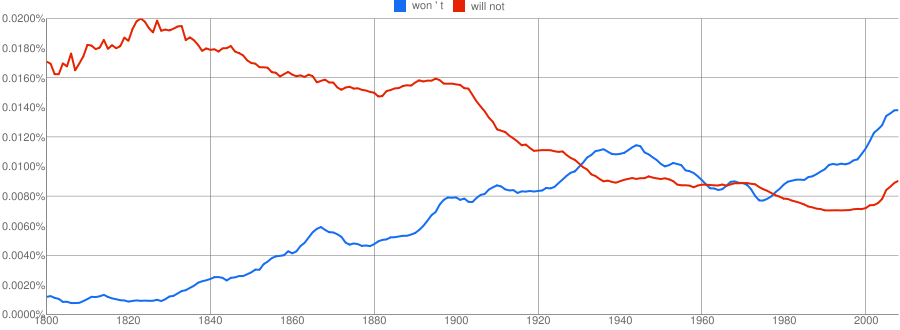

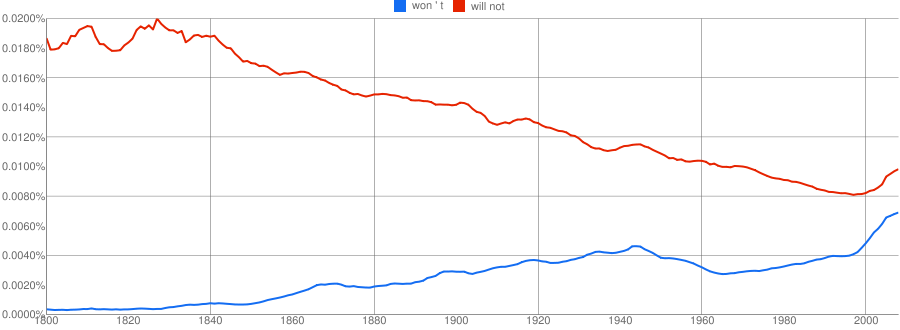

Uncertainty about exactly what's in the corpus is more problematic in considering the historical development of contractions. Consider the graphs for overall won't vs. will not in several sub-corpora — overall English:

The differences kind of make sense, but it's not clear what kind of sense — other differences would have made sense as well, and it's hard to know what these particular differences mean. If we had access to a reasonable sample of the underlying corpus and metadata — even a fraction of the part up to 1922 — we would have a better idea of what any one of these graphs really means about the development of the language. And we could look at things like the differences between narration and dialogue in fiction, or the development of different forms in the spoken parts of plays.

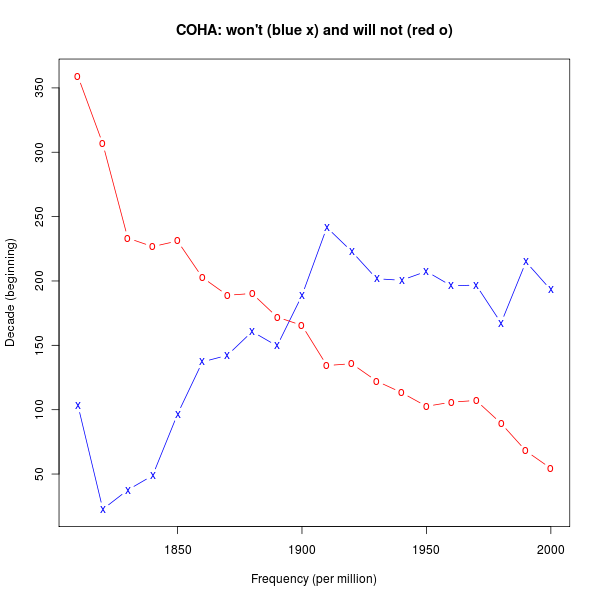

For a point of comparison, here's the overall plot from the COHA corpus, whose shape is (in some ways) different from all of the above:

[One caveat: As I understand it, the "percentages" across the Google n-gram counts are not comparable for different orders of n-grams, because not all 2-grams are retained in the collection, an even smaller percentage of 3-grams are retained, etc.. If this is correct, then since "won't" is a 3-gram while "will not" is a 2-gram, we can't conclude anything from the Google graphs about their frequencies relative to one another. However, the 2-gram [won '] and the 3-gram [won ' t] seem to yield identical results, so perhaps this is wrong.

Update — as Will Brockman explains in a comment below, I was indeed wrong about this. The percentages are calculated with the denominator of the proportion being the total number of n-grams in the book collection being used, not the total in the subset that is published (which for n>=2 is limited to n-grams that occur at least 40 times).]

Twitter Trackbacks for Language Log » Google n-gram apostrophe problem fixed [upenn.edu] on Topsy.com said,

January 2, 2011 @ 6:15 pm

[…] Language Log » Google n-gram apostrophe problem fixed languagelog.ldc.upenn.edu/nll/?p=2882 – view page – cached There was a problem with apostrophes in the Ngram viewer front end – my fault, and I corrected it yesterday (1/1/2011). […]

Mr Fnortner said,

January 2, 2011 @ 8:05 pm

The upward trend in use of nor'easter began in 1993, and the decline of northeaster began in 2000. It appears that it took about seven years for the northeaster crowd to throw in the towel. What's not known is what happened in 1993 to cause the sudden increase in use. Any ideas?

[(myl) Without smoothing, the structure is much less clear. There is probably less here than meets the eye; but it's hard to tell without knowing how many hits in which books are really responsible for the counts.]

Mark Davies said,

January 2, 2011 @ 8:29 pm

>> For a point of comparison, here's the overall plot from the COHA corpus, whose shape is different from all of the above:

Mark, I think you might want to look at the charts again. You've reversed the color of the lines for COHA, which mistakenly makes it appear that the trends are just the opposite of Google Books. In all of the Google Books charts, you have as red (and decrease), and as blue (and increase).

In both COHA and Google Books, has been decreasing almost decade by decade since the mid-1800s, and has been increasing since the 1800s (though a bit more bumpy in the 1900s, in both COHA and Google Books).

[(myl) Sorry for confusing you (and perhaps others). I recognize that the general pattern (of declining "will not" and increasing "won't") is the same across all sources. What I meant was that the COHA graph shows many details that are different from the others: the frequencies crossing before 1900 (which seems plausible to me), "won't" leveling off after that, a rapid fall in "will not" from 1810 to 1830, etc. — patterns that are different in detail from the (already differing) details of the various Google n-grams results.

As for the colors, the graphs are correctly labelled (and interpreted), but you're right that I've reversed the colors (and the labels) in the COHA graph relative to the Google n-gram graphs. I'll fix that.]

Will Brockman said,

January 2, 2011 @ 10:52 pm

Mark,

About comparing results for 2-grams and 3-grams: I also had this question when I started working with this corpus.

The reason we can put those two time-series on the same axes is that we divide the raw counts for a given n-gram, not by "the number of n-grams in the Google n-gram corpus", but "the number of n-grams in the books used for the Google n-gram corpus". So the denominators for 2-gram and 3-gram frequencies are essentially the same, instead of declining for larger n because of the "at least 40 counts" filtering. (Admittedly, each book has one fewer 3-grams than 2-grams, but that difference isn't significant at this scale.)

So that's why, as you note, the queries "won '" and "won ' t" give essentially identical results – as they should.

Craig said,

January 2, 2011 @ 11:12 pm

@Mr Fnortner, perhaps the 1993 uptick is related to 1991's Halloween Nor'easter (the so-called Perfect Storm), which I think should probably be written "Hallowe'en Nor'easter" for consistency. ;)

Ed said,

January 3, 2011 @ 12:23 am

i know the practical answer is "because that's how they've been parsed", but why is "won't" a trigram? it seems like it should be treated as a unigram.

Dave said,

January 3, 2011 @ 2:12 am

For those that find these ngrams as interesting as I do, we've created the following Facebook group to share the ones we've found:

http://www.facebook.com/nteresting.ngrams

Coby Lubliner said,

January 3, 2011 @ 11:25 am

I don't know if any of the corpora allow such a search, but for me the really interesting question is the change in contraction use in expository prose (including journalism) as opposed to quoted dialogue, personal letters, poetry and the like.

I am also curious about 'tis versus it's.

Lucy H said,

January 3, 2011 @ 7:29 pm

Ed,

Based on iching's comment on the "nor'easter" post, referring to COCA/COHA tokenization, it appears that it's fairly standard to treat contractions as something other than unigrams.

Also, it helps deal with weird variants, like you see here: "it 's" is treated exactly the same as "it's ". Of course, this tokenization does NOT handle "would n't" (a 4-gram!) well, but it does make sure that "wouldn 't", "wouldn't", and "wouldn' t" all get treated the same.

I'm curious, though, about what, if any standards exist for tokenizing contractions; I certainly don't understand why the apostrophe is its own token in this system. It looks like COCA tends to treat the second word in the contraction as its own token, apostrophe and all ("won't" -> "wo n't"; "we're" -> "we 're", etc). So there, "won't" is a bigram. Mark Davies, ML, is there a consensus out there on how to tokenize contractions?

Will said,

January 3, 2011 @ 9:14 pm

If you didn't treat the parts of a contraction as separate tokens, then you couldn't do this neat graph:

http://ngrams.googlelabs.com/graph?content=%27+t&year_start=1800&year_end=2008&corpus=5&smoothing=5

Rick S said,

January 4, 2011 @ 1:36 am

What I find interesting in these graphs is the apparent decline in the sum of the frequencies of "won't" and "will not", a decrease of about 40% from a peak around 1820 to a minimum around 2000. The decline is very gradual from 1820 to 1942, then plummets until 1962. After that year, British English continues trending downward until 1980 before stabilizing, while American English begins to creep upward, barely. Remarkably, English Fiction seems immune to whatever is causing this trend.

Does this reveal a cultural phenomenon reflected in printed nonfiction — say, perhaps, an increase in newspaper readership? Or is it some kind of technical artifact? Without access to the source works, it may be impossible to know.

Paul said,

December 31, 2013 @ 3:03 pm

"Didn't" and "did not" are apparently still not distinguished… an attempt to compare them yields a message saying "Replaced didn't with did not to match how we processed the books" and a single double-labelled curve ("did not" above "did not").