Bishnu Atal

« previous post | next post »

Here at Interspeech 2018, the first presentation will be by Bishnu Atal, winner of the ISCA medal for scientific achievement. Though you probably don't know it, Bishnu has had an enormous impact on your life — at least if you ever talk on a cell phone, or listen to music on iTunes or Spotify or Amazon or wherever.

Here's the abstract for his talk — "From Vocoders to Code-Excited Linear Prediction: Learning How We Hear What We Hear":

It all started almost a century ago, in 1920s. A new undersea transatlantic telegraph cable had been laid. The idea of transmitting speech over the new telegraph cable caught the fancy of Homer Dudley, a young engineer who had just joined Bell Telephone Laboratories. This led to the invention of Vocoder – its close relative Voder was showcased as the first machine to create human speech at the 1939 New York World's Fair. However, the voice quality of vocoders was not good enough for use in commercial telephony. During the time speech scientists were busy with vocoders, several major developments took place outside speech research. Norbert Wiener developed a mathematical theory for calculating the best filters and predictors for detecting signals hidden in noise. Linear Prediction or Linear Predictive Coding became a major tool for speech processing. Claude Shannon established that the highest bit rate in a communication channel in presence of noise is achieved when the transmitted signal resembles random white Gaussian noise. Shannon’s theory led to the invention of Code-Excited Linear Prediction (CELP). Nearly all digital cellular standards as well as standards for digital voice communication over the Internet use CELP coders. The success in speech coding came with understanding of what we hear and what we do not. Speech encoding at low bit rates introduce errors and these errors must be hidden under the speech signal to become inaudible. More and more, speech technologies are being used in different acoustic environments raising questions about the robustness of the technology. Human listeners handle situations well when the signal at our ears is not just one signal, but also a superposition of many acoustic signals. We need new research to develop signal-processing methods that can separate the mixed acoustic signal into individual components and provide performance similar or superior to that of human listeners.

I was lucky enough to have an office down the hall from Bishnu at Bell Labs for 15 years, and I learned an enormous amount from him. In my obituary for Manfred Schroeder, I discussed several key pieces of their work together: linear predictive coding of speech, perceptual coding, and code-excited linear prediction.

One of my favorite pieces of Bishnu's work has not had nearly as much impact (though Google Scholar still gives it 351 citations), but maybe deserves rebirth in a new form: "Efficient coding of LPC parameters by temporal decomposition", ICASSP 1983. Part of the abstract:

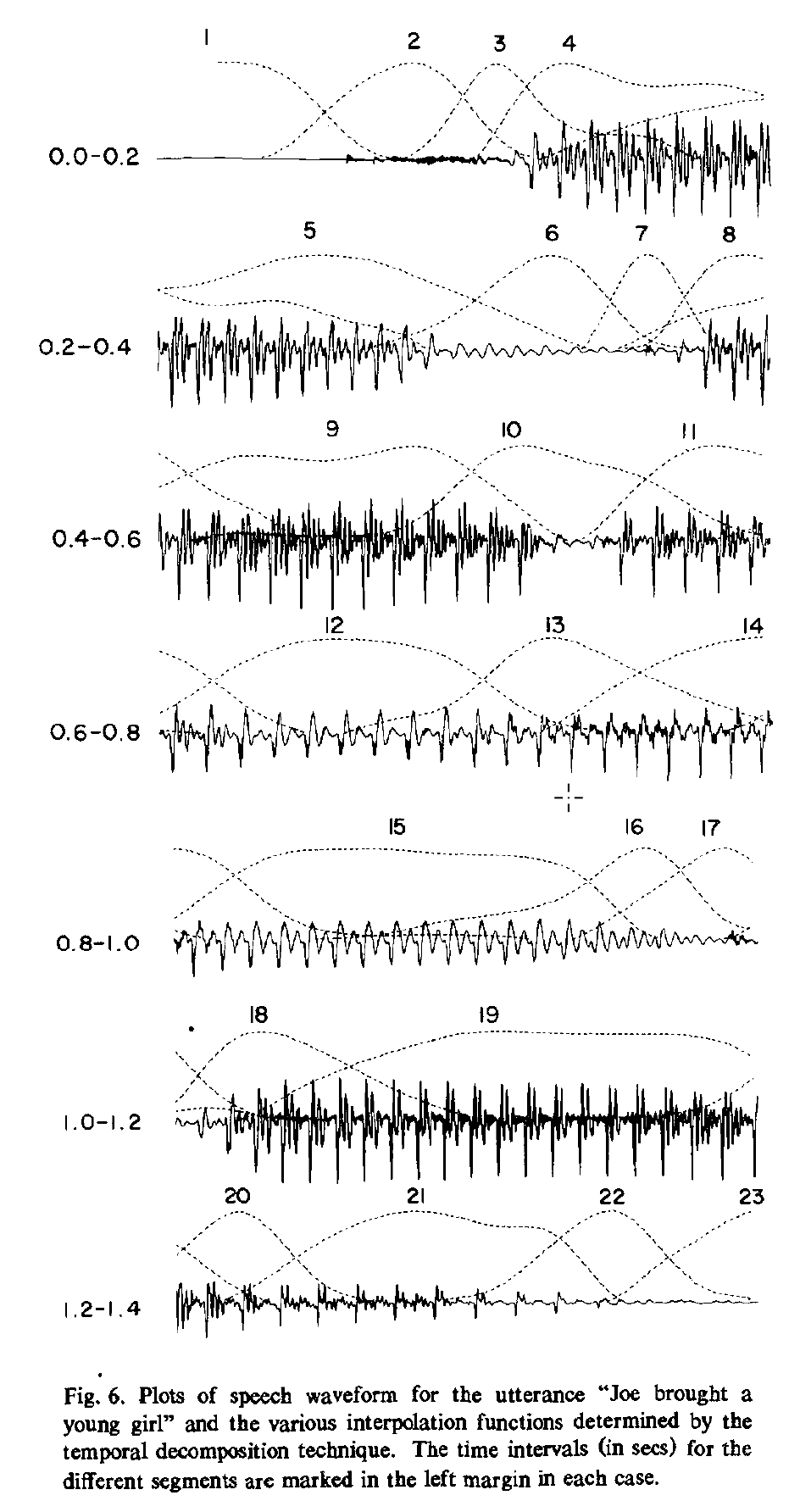

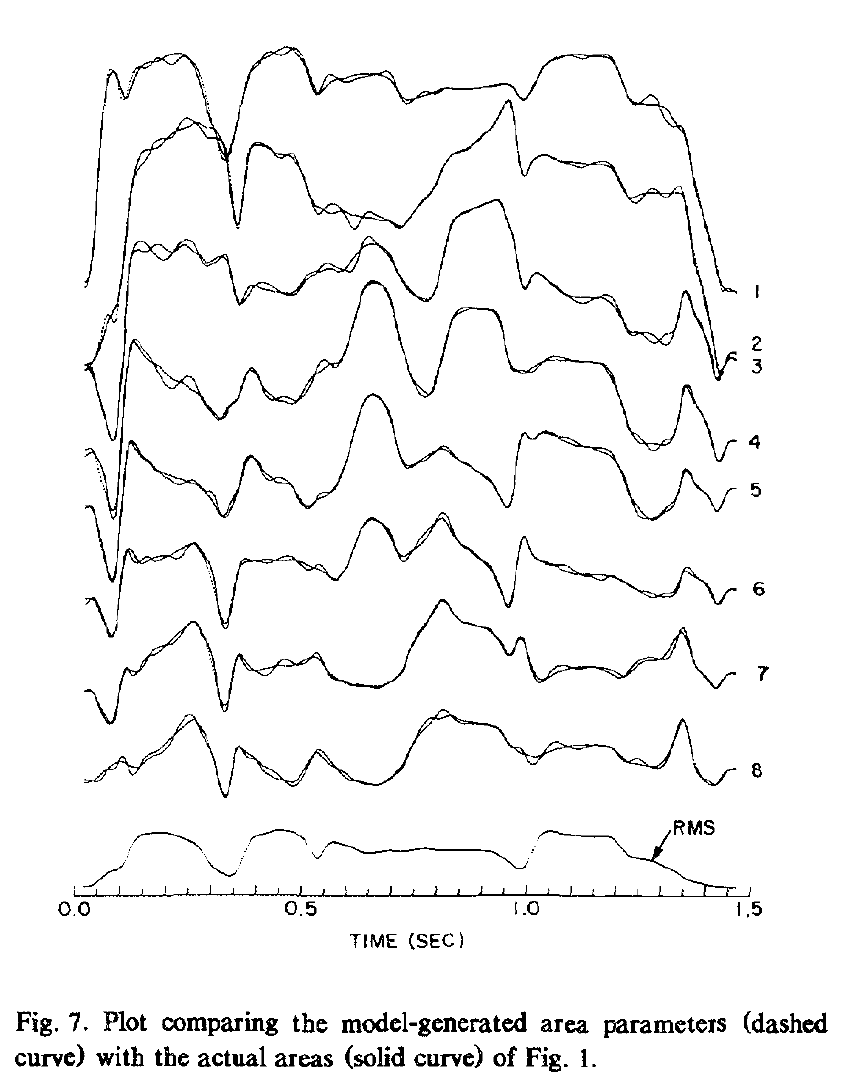

Our aim is to determine the extent to which the bit rate of LPC parameters can be reduced without sacrificing speech quality. Speech events occur generally at non-uniformly spaced time intervals. Moreover, some speech events are slow while others are fast. Uniform sampling of speech parameters is thus not efficient. We describe a non-uniform sampling and interpolation procedure for efficient coding of log area parameters. A temporal decomposition technique is used to represent the continuous variation of these parameters as a linearly-weighted sum of a number of discrete elementary components. The location and length of each component is automatically adapted to speech events. We find that each elementary component can be coded as a very low information rate signal.

This technique is a technological approximation of the old intuition that the speech stream is the overlapping influence of a series of discrete events. And it works very well, at least in the cited examples:

I believe that Bishnu hoped that this technique would somehow converge on phoneme-like units — and this didn't happen, at least in the sense of finding a small finite set of "discrete elementary components" that could be used across occasions, speakers, recording conditions, and so on.

But today, with many orders of magnitude more computer power, and more sophisticated modeling architectures and learning algorithms, maybe we can come closer.

Michael Proctor said,

September 3, 2018 @ 2:32 am

I agree that Atal (1983) is a wonderful paper, and definitely under-appreciated.

"I believe that Bishnu hoped that this technique would somehow converge on phoneme-like units — and this didn't happen, at least in the sense of finding a small finite set of "discrete elementary components" that could be used across occasions, speakers, recording conditions, and so on."

Perhaps not phonemes, but there may be discrete sets of subphonemic units that are more robust speech primitives – e.g. a modification of Atal's method has been applied with some success to the automatic extraction of gestures from the acoustic signal: https://asa.scitation.org/doi/abs/10.1121/1.4763545

maidhc said,

September 3, 2018 @ 2:57 am

The contributions of the pre-1990 researchers in this field have been woefully neglected.

Yuval said,

September 4, 2018 @ 1:32 pm

Am I the only one at slight unease over the example phrase chosen?