All problems are not solved

« previous post | next post »

There's an impression among some people that "deep learning" has brought computer algorithms to the point where there's nothing left to do but to work out the details of further applications. This reminds me of what has been described as Ludwig Wittgenstein's belief in the early 1920s that the development of formal logic and the "picture theory" of meaning in his Tractatus Logico-Philosophicus reduced the elucidation (or dissolution) of all philosophical questions to a sort of clerical procedure.

Several recent articles, in different ways, call into question this modern view that Deep Learning (i.e. complex networks of linear algebra with interspersed point nonlinearities, whose millions or billions of parameters are automatically learned from digital examples) is a philosopher's stone whose application solves all algorithmic problems. Two among many others: Gary Marcus, "Deep Learning: A Critical Appraisal", arXiv.org 1/2/2018; Michael Jordan, "Artificial Intelligence — The Revolution Hasn’t Happened Yet", Medium 4/19/2018.

And two upcoming talks describe some of the remaining problems in speech and language technology.

This afternoon here at Penn, Michael Picheny's title is "Speech Recognition: What's Left?", at 1:30 in Levine 307:

Recent speech recognition advances on the SWITCHBOARD corpus suggest that because of recent advances in Deep Learning, we now achieve Word Error Rates comparable to human listeners. Does this mean the speech recognition problem is solved and the community can move on to a different set of problems? In this talk, we examine speech recognition issues that still plague the community and compare and contrast them to what is known about human perception. We specifically highlight issues in accented speech, noisy/reverberant speech, speaking style, rapid adaptation to new domains, and multilingual speech recognition. We try to demonstrate that compared to human perception, there is still much room for improvement, so significant work in speech recognition research is still required from the community.

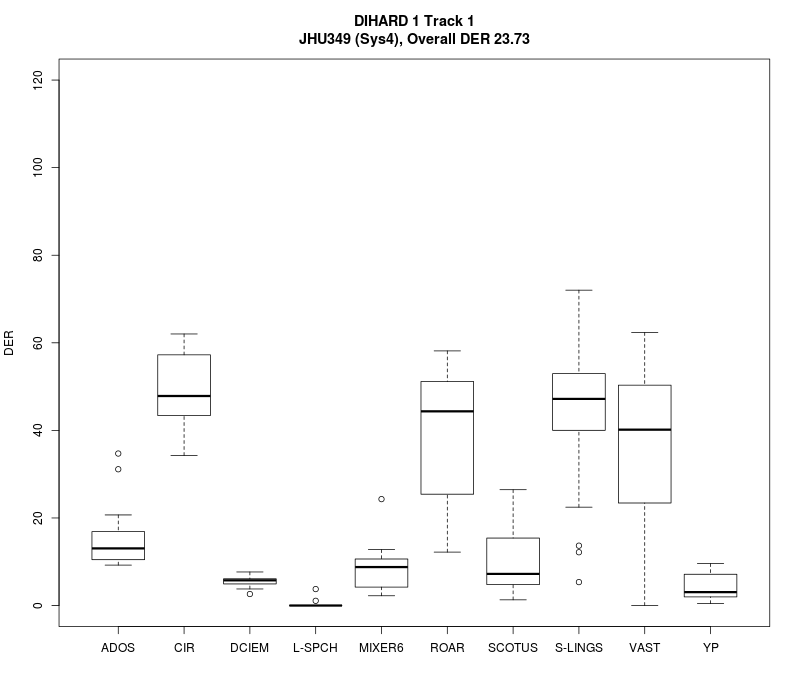

For one simple example of an area where further R&D is needed, see our earlier discussion of the First DIHARD Speech Diarization Challenge, where on some input categories the winning system performed quite badly indeed, with frame-wise error rates in the 50% range even when the speech-segment regions were specified:

(See here for a description of the 10 eval-set data sources.)

And on Wednesday, Richard Sproat will be talking in room 7102 at the CUNY Graduate Center on the topic "Neural models of text normalization for speech applications":

Speech applications such as text-to-speech (TTS) or automatic speech recognition (ASR), must not only know how to read ordinary words, but must also know how to read numbers, abbreviations, measure expressions, times, dates, and a whole range of other constructions that one frequently finds in written texts. The problem of dealing with such material is called text normalization. The traditional approach to this problem, and the one currently used in Google’s deployed TTS and ASR systems, involves large hand-constructed grammars, which are costly to develop and tricky to maintain. It would be nice if one could simply train a system from text paired with its verbalization. I will present our work on applying neural sequence-to-sequence RNN models to the problem of text normalization. Given sufficient training data, such models can achieve very high accuracy, but also tend to produce the occasional error — reading “kB” as “hectare”, misreading a long number such as “3,281” — that would be problematic in a real application. The most powerful method we have found to correct such errors is to use finite-state over-generating covering grammars at decoding time to guide the RNN away from “silly” readings: Such covering grammars can be learned from a very small amount of annotated data. The resulting system is thus a hybrid system, rather than a purely neural one, a purely neural approach being apparently impossible at present.

This reminds me of something that happened in the mid-1980s, during one of the earlier waves of pseudo-neural over-enthusiasm. I was then at Bell Labs, and had presented at some conference or another a paper on our approach to translating text into instructions for the synthesizer. What we used then was a hybrid of a hand-coded text normalization system, a large pronouncing dictionary, dictionary extensions via analogical processes like inflection and rhyming, and hand-coded grapheme-to-phoneme transduction (though no neural nets at that time). Richard Sproat might well have been a co-author. Afterwards, someone came up to me and asked why we were bothering with all that hand-coding, since neural nets could learn better solutions to all such problems with no human intervention, citing Terry Sejnowski's work on learning g2p rules using an early NN architecture (Sejnowski & Rosenberg, "NETtalk: a parallel network that learns to read aloud", JHU EECS Technical Report 1986).

I say that they "asked me", but "berated me" was more like it. My interlocutor's level of fervent conviction was like someone arguing about scientology, socialism, salvation, or grammatical theories — it had been mathematically proved, after all, that a non-linear perceptron with one hidden layer could asymptotically learn any finite computable function, more or less, so to solve a problem in any other way was clearly a sort of moral failure. Against this level of conviction, it was no help at all to point out that NETtalk's performance was actually not very good.

Not long thereafter, I moderated a 1986 ACL "Forum on Connectionism" (which is what pseudo-neural computation used to be called), and I think that my "Moderator Statement" (pdf here) has actually stood up pretty well over the intervening 32 years.

Arthur Waldron said,

April 30, 2018 @ 9:12 am

Thank you for this most illuminating post! Arthur

DCBob said,

April 30, 2018 @ 10:15 am

Sounds suspiciously similar to the history of macroeconomics …

MattF said,

April 30, 2018 @ 10:27 am

It's interesting that claiming 'all hard problems have been solved' serves to motivate research demonstrating that the claim is false.

Y said,

April 30, 2018 @ 1:00 pm

I heard Sejnowski give a talk sometime then. He suggested, semi-seriously, that given the rate of increasing computation speeds, by the year 2020 (or 2010?) a computer will have the computing power of the human brain, and ergo will be able to match its capabilities. The unspoken understanding was that neural networks had essentially solved the problems of artificial intelligence once and for all, and that NETtalk was almost there.

Bill Benzon said,

April 30, 2018 @ 3:40 pm

I've been thinking about this recently, Mark, and just read the Gary Marcus paper. One of the research areas he puts on the research agenda is common sense knowledge. As I'm sure you know, Paul Allen just dedicated $125 million to that effort at his AI research institute.

"Classically", of course, the problem has been approached through hand-crafted code and a great deal of work on knowledge representation was devoted to it back in the 70s and 80s. I supposed Doug Lenat's Cyc is the best-known and certainly longest running such effort. The idea, as I recall, was to hand code until the system could teach itself by reading. As far as I know, it's not there yet.

What do we have now that we didn't have then? We've vast troves of machine-readable texts, not to mention images and videos, and techniques working with them. Here's a paper at Allen's institute on that. But I'm not sure how far that will get us.

My guess – a guess for sure, but an educated one – is that much of our common sense knowledge is in the form of analog or quasi-analog models of the world, with language 'riding' atop that as a way of manipulating and extending it. We use those models in making thousands of simple, but necessary, inferences that hold common-sense talk together. Our computers, alas, don't have those models available to them when going through all that online text.

Humans develop common sense knowledge by being born into and growing up in the world.

Peter Grubtal said,

May 1, 2018 @ 4:45 am

This is a bit OT in this thread, but surely it will be of interest to language log people?

I was amazed a few days ago to see a report of this in a computer magazine (c't – a German language publ.):

https://www.popsci.com/device-hears-your-silent-speech

Until I found the above link, my first thought was April fool: the magazine always has a spoof article close to 1st April. But the spoof had already appeared in the previous edition (ear-recognition authentication in smartphones).

The c't article didn't mention the present limitations covered in the popsci contribution: only 20 words, and a learning phase.

Even so, perhaps I'm out-of-date, but I found it amazing, and presumably of great interest to psychologists and linguists: the mere idea that thought words produce detectable impulses to brain-external nerves controlling speech seems a major finding.

Or perhaps the whole thing is a spoof, and c't was taken-in as well? They would've deserved it.

[(myl) Nicer packaging than the 2006 version ("Envy, Navy, whatever", 10/27/2006), but apparently the same sort of problems.]

bks said,

May 1, 2018 @ 8:02 am

From the Gary Marcus paper:

MikeA said,

May 1, 2018 @ 10:35 am

@Bill Benzon: At the risk of summoning daemons (or angry counter-commenters), I'll mention what Hubert Dreyfus said in a class I attended in the early 1970s: That the big problem with AI was not that the existing instances did not have a brain, but that they did not have a body. That is, that much comprehension of human activity relied on shared experiences of the physical world and our non-verbal perception of it.

He also commented on then-current writing (particularly grant proposals) as "A few bricks shy of the moon", in the sense that a researcher would stack one brick atop another and note that the stack height had doubled. Such evidence of exponential growth showing that all current problems would be solved very soon.

Peter Grubtal said,

May 2, 2018 @ 2:32 am

(myl) : thanks for the link. That earlier piece and the popsci article don't seem to say that merely thinking the word can be recognised, but the c't article is explicit about it: "in fact no muscle movements….are necessary".

I've always considered c't pretty authoritative in its field.

[(myl) I guess it depends on the interpretation. Since the basis is EMG, muscle contractions are required — but isometric contractions would lead to EMG signals without (much) "muscle movement".]