DIHARD again

« previous post | next post »

The First DIHARD Speech Diarization Challenge has results!

"Diarization" is a bit of technical jargon for "figuring out who spoke when". You can read more (than you probably want to know) about the DIHARD challenge from the earlier LLOG post ("DIHARD" 2/13/2018) the DIHARD overview page, the DIHARD data description page, our ICASSP 2018 paper, etc.

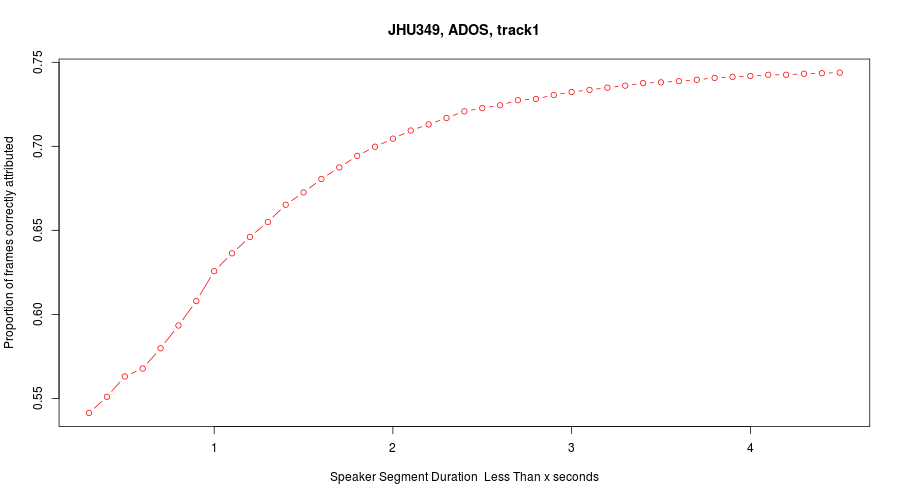

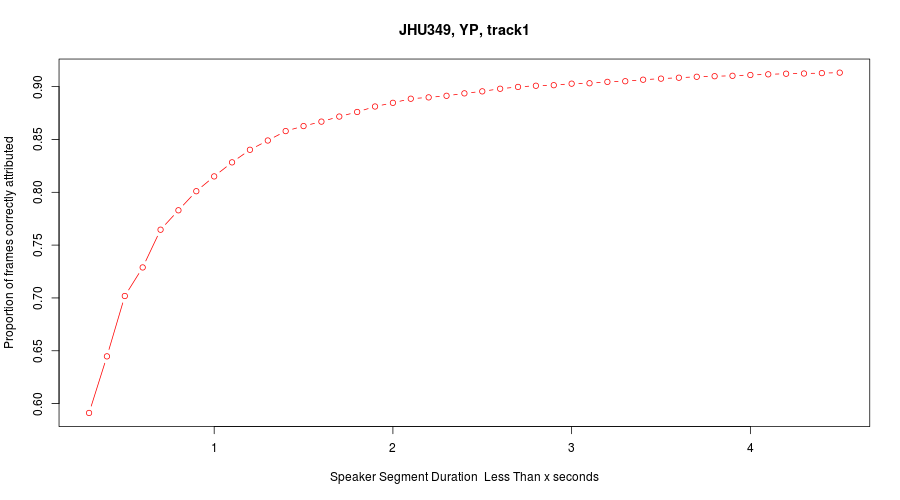

This morning's post presents some evidence from the DIHARD results showing, unsurprisingly, that current algorithms have a systematically higher error rate with shorter speech segments than with longer ones. Here's an illustrative figure:

For an explanation, read on.

Today, we'll look at the performance of the best single system on the four (out of 10) DIHARD sources that are two-person conversations. We'll use the results from Track 1, where the system is given the "true" division into speech vs. non-speech segments. On this track, there should be no errors due to labelling silence as a speaker, or labelling a bit of speech as silence, thus eliminating the possibility that higher error rates on short speech segments might be due to ambiguity about where the segments start and end (which is a genuine problem). But note that Track 2, which is the normal case where the system must figure out for itself whether anyone is talking at all, has significantly higher overall error rates.

The eval set includes about 2 hours of 5-10 minute passages from each of the 10 sources, for about 20 hours in total. The four two-person sources are:

- ADOS: Selections from (anonymized) recordings of Autism Diagnostic Observation Schedule (ADOS) interviews conducted at the Center for Autism Research (CAR) at the Children's Hospital of Philadelphia (CHOP). ADOS is a semi-structured interview in which clinicians attempt to elicit language that differentiates children with Autism Spectrum Disorders from those without (e.g., “What does being a friend mean to you?”). All interviews were conducted by CAR with audio recorded from a video camera mounted on a wall approximately 12 feet from the location inside the room where the interview was conducted.

- DCIEM: Selections from the DCIEM Map Task Corpus (LDC96S38). Each session contains two speakers sitting opposite one another at a table. Each speaker has a map visible only to him or her, and a designated role as either “Leader” or “Follower.” The Leader has a route marked on their map, and is tasked with communicating this route to the Follower who should reproduce it precisely on their own map. Though each speaker was recorded on a separate channel via a close-talking microphone, these have been mixed together for DIHARD.

- MIXER6: Selections from sociolinguistic interviews recorded as part of MIXER6 (LDC2013S03). The released audio comes from microphone five, a PZM microphone.

- YOUTHPOINT: Selections from YouthPoint, a late 1970s radio program run by students at the University of Pennsylvania, consisting of student-led interviews with opinion leaders of the era (e.g., Ann Landers, Mark Hamill, Buckminster Fuller, and Isaac Asimov). The recordings were conducted in a studio on reel-to-reel tapes and later digitized at LDC.

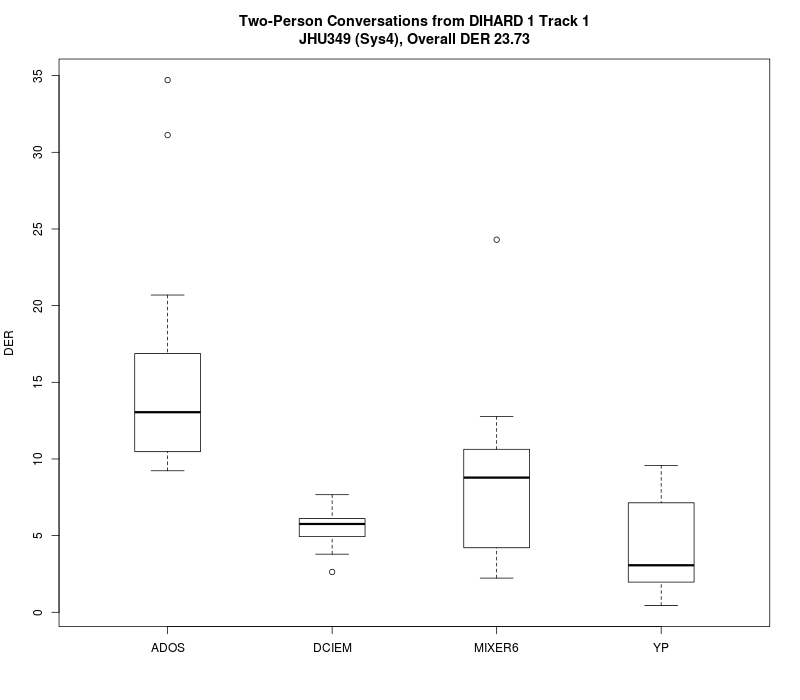

A boxplot shows the range of "Diarization Error Rate" scores for the best system on Track 1:

(A boxplot for this system's performance on all 10 sources is here. The other top-ranked systems have similar overall performance.)

DER is defined as (FA+MISS+ERROR)/TOTAL, where

- TOTAL is the total speaker time;

- FA is the sum of false alarm times, where the system falsely identified non-speech as a speaker;

- MISS is the sum of speaker times falsely identified as silence;

- ERROR is the sum of speaker times attributed to the wrong speaker.

In Track 1, FA and MISS should obviously be 0, so we're just looking at the proportion of ERROR frames as a function of segment length.

In the 24 ADOS passages in the eval set, the "reference" transcription has 472,079 10-msec frames in speech segments, of which 285,737 frames (60.5%) are attributed to the dominant (talkiest) speaker in each passage.

And as I expected, the mean error rate decreases monotonically with increasing segment length:

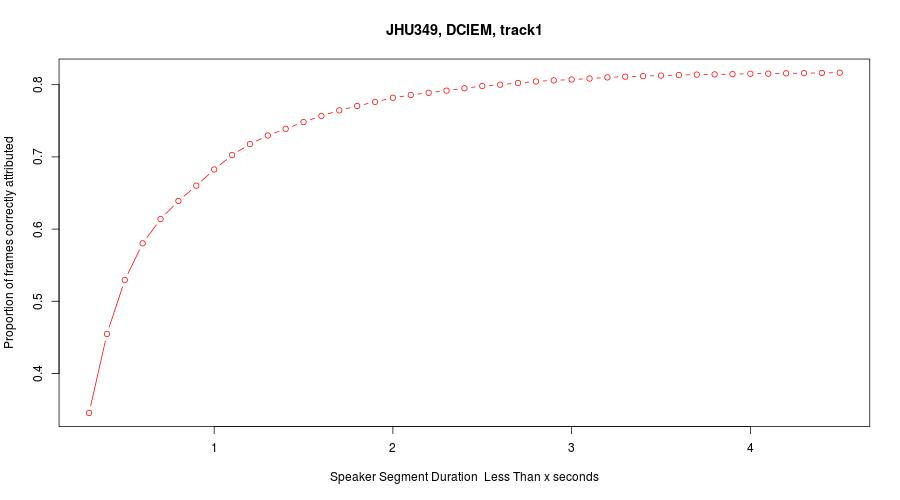

Similarly for DCIEM.

In the 19 DCIEM passages in the eval set, the "reference" transcription has 493,826 frames in speech segments, of which 365,383 (74.0%) are attributed to the dominant (talkiest) speaker in each passage.

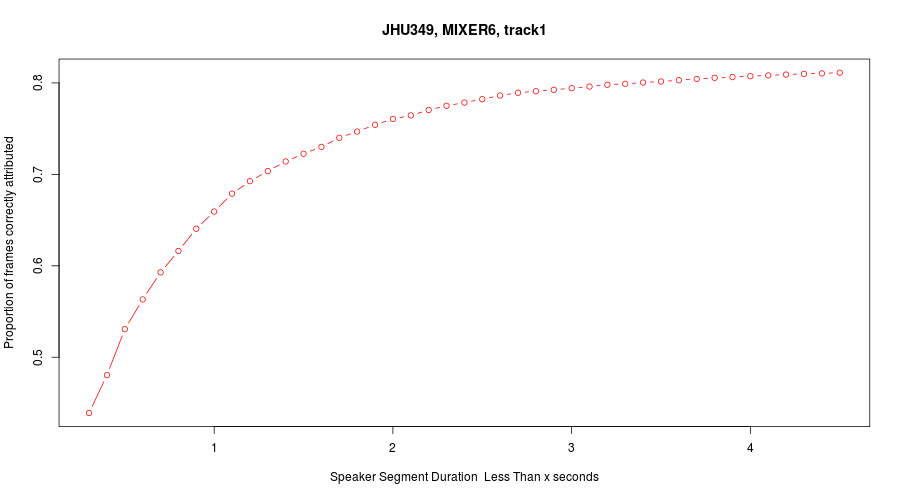

And for MIXER6 as well.

In the 12 MIXER6 passages in the eval set, the "reference" transcription has 578,858 frames in speech segments, of which 430,101 (74.3%) are attributed to the dominant (talkiest) speaker in each passage.

And finally for YouthPoint.

In the 12 YP selections in the eval set, the "reference" transcription attributes 577,305 frames to some speaker, of which 427,402 (74.0%) are attributed to the dominant (talkiest) speaker in each selection.

It's not clear to what extent the same segment-length effect holds for human listeners.

No doubt, human speaker recognition is harder for short isolated fragments than for longer ones. But in "Hearing interactions" 2/28/2018, I observed that we humans are very good at detecting speaker changes in connected discourse, even when the acoustic evidence is only on the time scale of a single syllable or two. As a result, although we're often not very good at deciding "who spoke when" in a multi-person conversation among a bunch of people that we don't know (unless there are striking individual differences like foreign accents or large differences in pitch range), it's generally easy for us to diarize a two-person dialog, since in that case, diarization reduces to speaker-change detection.

(I don't have any non-anecdotal/impressionistic evidence for this ability, since as far as I know no one has ever explored the question experimentally.)

Note: Most of the work in preparing, scoring, and documenting the DIHARD challenge was done by Neville Ryant.

Stephen said,

April 14, 2018 @ 7:50 pm

Mark Hamill was an “opinion leader” in the seventies?

For some reason I find this surprising.

[(myl) And in 2012, I found it surprising that Time Magazine used the noun headman as a verb. But what do these (and the many other surprises that fill our lives) have to do with the topic of this post?]

Stephen said,

April 15, 2018 @ 9:08 am

It was mentioned in your post. I thought that anything appearing in a post would be fair game for a comment. Since that's apparently that's not the case, I'll refrain from commenting further.

[(myl) I'm sorry — that part of the post, which I cut-and-pasted from an online description of the dataset, obviously didn't even register with me.

So your comment was fair enough, and you should feel free to continue in the same way. Though really, shouldn't his work as Luke Skywalker and the voice of The Joker give him some opinion-leader cred?]

ErikF said,

April 15, 2018 @ 4:03 pm

Does the amount of frequency bandwidth for the recorded audio make any difference when processing the speech? For example, is a highly compressed, 8-bit telephone-quality recording more difficult to process than an uncompressed 24-bit one? I would be surprised if it didn't affect the results, but it would be interesting to know: humans seem to be able to determine speakers in a conversation even with a lot of signal interference!

[(myl) All of the sources in this challenge are 16 kHz, though some have a lot of background noise. Sound quality matters, obviously, but compression and bandwidth limitations are not an issue for the four sources discussed in this post..]

Elika said,

April 16, 2018 @ 10:23 am

neat to see some of these results! not to preempt a future LL post, but how much of the DER for the four 'worst' sources are do you want to attribute to the open set nature of the participants vs. other aspects of the recordings? Monotonic increase with # of talkers that's additive with the monotonic increase with segment length?

[(myl) More talkers and worse SNR are both involved, I think.]

Sarah said,

April 16, 2018 @ 1:42 pm

To my knowledge there is not a great deal of work on human voice change detection besides a handful of same-different tasks. Still, Vitevitch (2003) shows that, if listeners are *not* monitoring for a change, they may miss a change between socially-similar talkers (similar to visual change blindness). On the other hand, if listeners focus, they may be able to detect minute changes. Magnuson & Nusbaum (2007) found that listeners doing a vowel-monitoring task are slowed when they think they are hearing two speakers, vs. listeners who think they're hearing a single speaker. This suggests that talker change detection sensitivity can be increased by top-down attention. I wonder if some of human listeners' advantage comes from auditory object tracking–establishing that there are two (or more) sources/speakers and then tracking which is which.

Also–looking back at the post from late Feb., it seems like this is itching for a human-machine comparison!