Do STT systems have "intriguing properties"?

« previous post | next post »

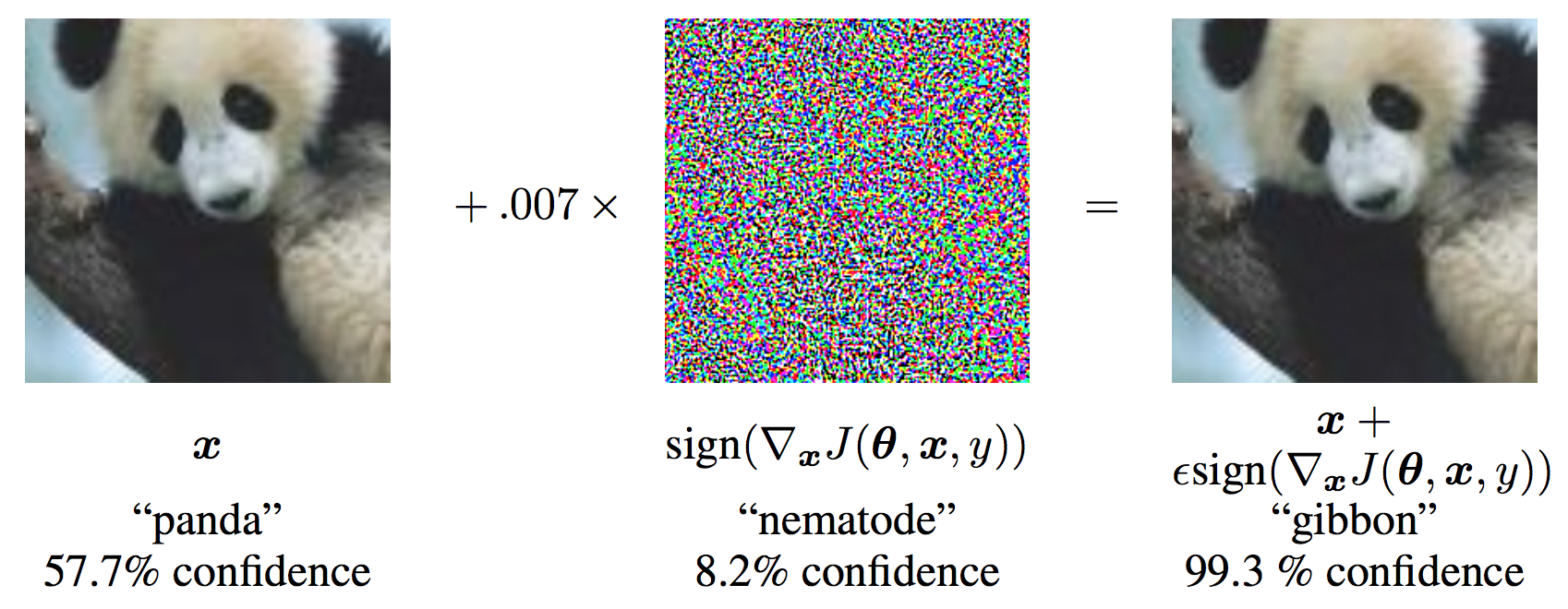

In "Intriguing properties of neural networks" (2013), Christian Szegedy et al. point out that

… deep neural networks learn input-output mappings that are fairly discontinuous to a significant extent. We can cause the network to misclassify an image by applying a certain imperceptible perturbation…

For example:

There has been quite a bit of discussion of the topic since then. In a 2/15/2017 post on the cleverhans blog, Ian Goodfellow and Nicolas Papernot ask "Is attacking machine learning easier than defending it?", and conclude that

The study of adversarial examples is exciting because many of the most important problems remain open, both in terms of theory and in terms of applications. On the theoretical side, no one yet knows whether defending against adversarial examples is a theoretically hopeless endeavor (like trying to find a universal machine learning algorithm) or if an optimal strategy would give the defender the upper ground (like in cryptography and differential privacy). On the applied side, no one has yet designed a truly powerful defense algorithm that can resist a wide variety of adversarial example attack algorithms. We hope our readers will be inspired to solve some of these problems.

I'm interested in a different question, which is whether "adversarial examples" can be constructed for pseudo-neural-net speech-to-text systems. A demo of this achievement would be two audio clips that sound imperceptibly different, but are recognized by a given modern speech-to-text system as two radically and amusingly different messages — say "Happy birthday" vs. "Many dungheaps".

I don't see any reason in principle why this shouldn't be possible, but for various technical reasons it seems to be harder to manage it, and no one seems to have done it so far.

Of course it has turned out to be true that some contemporary machine-learning approaches to machine translation are subject to much cruder adversarial attacks.

leoboiko said,

July 11, 2017 @ 6:26 am

I'm waiting for an enterprising graffiti artist to fool an automated car, Wily E. Coyote style. (I know, lidar…)

[(myl) That's the sort of thing that people are worried about.

These effects are broadly analogous to (human) optical or acoustic illusions, except that the human illusions are intelligible in a way that the machine illusions are not.

Of course there's also the more old-fashioned problem of events outside the training regime: "Volvo admits its self-driving cars are confused by kangaroos", The Guardian 6/30/2017.]

Dick Margulis said,

July 11, 2017 @ 7:33 am

Google Voice might be an interesting source of examples. Sometimes the transcriptions of voice messages are letter-perfect, even though to human ears the voice sounds muffled or poorly enunciated or in an unfamiliar accent; sometimes the transcription is hilariously off, even though to human ears the voice sounds crisply enunciated and clear. Most of the time, of course, the reverse is true. For commercial accounts, a preponderance of messages have similar content (who is calling, how to reach them, a brief statement of a common problem) that is more consistent than other voice corpora are likely to be.

Fred said,

July 11, 2017 @ 8:27 am

I feel like the "human imperceptibility" angle of the adversarial examples work is a bit of a red herring; there's nothing to suggest that what my eyes are doing with small pictures is particularly like what DNNs do with raw pixel values (ok, not nothing, but still). I felt rather unsurprised that it was possible to create examples sufficiently different from the training distribution but not perceptible to the human eye.

As for NN speech-to-text, I can't even find many examples of that sort of work, but am intrigued as it relates to a personal project.

[(myl) If you can't find examples of DNN/RNN/CNN speech-to-text then you're looking in the wrong places, because variants of this have been the standard approach for nearly a decade. Look in any recent IEEE ICASSP, ASRU, InterSpeech, etc., proceedings and you'll find plenty of documentation.]

Emily said,

July 11, 2017 @ 9:39 am

@Mark Liberman: When that kangaroo article said that "black swans were interfering" I was momentarily confused as to whether it was referring to metaphorical or literal ones…

On a similar note, Google Street View apparently thinks albatrosses are people, since it blurs their faces:

http://www.thebirdist.com/2013/08/google-street-view-birding-ii-midway.html

KeithB said,

July 11, 2017 @ 9:40 am

I still wonder about self driving cars and human traffic cops.

I was at Downtown Disney last night and there was a confusing array of small exit signs, cones, people with flashlights and one spot where three lanes converged to 2 with no lane markers.

Even if driverless cars get traffic cop directions correct, what is to prevent someone from pretending to be a traffic cop – just standing on the center median waving his arms – fooling them?

Idran said,

July 11, 2017 @ 12:09 pm

@KeithB: What's to prevent someone from doing that today with human drivers by wearing a fake uniform?

Jake said,

July 11, 2017 @ 12:56 pm

@leoboiko: They did it four months ago : https://techcrunch.com/2017/03/17/laying-a-trap-for-self-driving-cars/

Sergey said,

July 11, 2017 @ 1:31 pm

57.7% is a pretty low confidence to start with, so it's no surprise that the small changes would tweak it. Probably the training set didn't have a panda but had a gibbon in a close pose and cropping. There are other examples like this, such as that the early face recognition systems didn't recognize the black people because they weren't present much in the training set.

Perhaps a better path would be to train the systems not by labeling the complete images but by labeling the individual fragments in them, i.e. "this is panda's head, looking straight", "this is panda's body from the side", etc, so that the overall image composition would have a smaller effect on recognizing the fragments. At least some of it is already done at Microsoft, with their flagship image recognition system being able to produce labels like "two people standing with a dog in the background".

As for the voice recognition, I remember a segment on the America's Funniest Videos where a guy tries to control the phone banking system by repeating the same phrase, and every time it recognizes it completely differently, with none of these recognitions being right.

[(myl) If you look at the linked paper and the more recent work, you'll see that the method works in principle to turn (almost?) any X into (almost?) any Y, especially if it's possible to climb a "confidence" or similar gradient.]

Michael Watts said,

July 11, 2017 @ 1:50 pm

It can't be this; if the training set didn't have a panda, there would be no way for the first image to be classified as "panda" at any level of confidence.

KeithB said,

July 11, 2017 @ 4:49 pm

Note I did not say uniform. I know enough to see that some guy standing in the middle of the road waving his arms is not directing traffic. But a man in a uniform probably *is* directing traffic.

Yakusa Cobb said,

July 12, 2017 @ 7:22 am

I'm surprised that nobody has yet linked to this:

http://www.gocomics.com/pearlsbeforeswine/2017/07/01

Idran said,

July 12, 2017 @ 11:05 am

@KeithB: No, I know you didn't say uniform. My point was that you have a method of determining how likely someone directing traffic is legitimate the same way a driverless car has a method of determining it, and both methods can be subverted if a person knows the details of that method. If you know how something's figured out, whether it's by a person or by an algorithm, you can find a way to trick it; the fact that it can be tricked isn't a point against it in the abstract, because that's true for any method. The question is more how easily it's tricked in practice, but there's no way of answering that in this discussion since neither of us knows what method it would actually use to identify a traffic cop.

BZ said,

July 12, 2017 @ 11:57 am

I'm still waiting for my state's 511 traffic information system to recognize me saying "Interstate 295". It rarely confuses it with something else, it just refuses to identify what I'm saying. I'm amazed that a system that (could be?) trained on a relatively low number of words and phrases (It even gives me a list of what I can say when I ask it) can't assign a high-enough probability to my input, even if it turns out to be wrong on occasion (I think there was one instance when it interpreted it as "Interstate 95" which is actually not bad) [/rant]

Sergey said,

July 12, 2017 @ 1:34 pm

KeithB, the training set obviously did have a panda but probably in a different pose. So the system has to decide, which if not-quite-fitting images fits better, and small changes can move the image one way or the other over the boundary. I've searched for some gibbon pictures, and it turns out that some of them have whitish fur and black circles around the eyes, so they're not _that_ much unlike pandas. Though I must admit that 99% is quite high and surprising (and turning an airplane into a cat that they talk about in the article is even more surprising if they do it with the same level of confidence).

(myl) Thanks, I didn't think of clicking on the link, it's quite interesting. I guess the neural network manages to find some sampling of pixels that differentiate the images, and it might be a smallish subset. I wonder if some protection could be achieved by doing the recognition at multiple pixel resolutions, with some final arbitration requiring a good agreement between all resolutions. I suspect that at a lower resolution it would be more difficult to tweak the image without making it noticeable to the human eye. The convolutional networks should already use multiple resolutions but AFAIK they treat them as kind of equal inputs from which to pick and choose.