"AI" == "vehicle"?

« previous post | next post »

Back in March, the AAAI ("Association for the Advancement of Artificial Intelligence") published an "AAAI Presidential Panel Report on the Future of AI Research":

The AAAI 2025 presidential panel on the future of AI research aims to help all AI stakeholders navigate the recent significant transformations in AI capabilities, as well as AI research methodologies, environments, and communities. It includes 17 chapters, each covering one topic related to AI research, and sketching its history, current trends and open challenges. The study has been conducted by 25 AI researchers and supported by 15 additional contributors and 475 respondents to a community survey.

You can read the whole thing here — and you should, if you're interested in the topic.

The chapter on "AI Perception vs. Reality", written by Rodney Brooks, asks "How should we challenge exaggerated claims about AI’s capabilities and set realistic expectations?" It sets the stage with an especially relevant lexicographical point:

One of the problems is that AI is actually a wide-reaching term that can be used in many different ways. But now in common parlance it is used as if it refers to a single thing. In their 2024 book [5] Narayanan and Kapoor likened it to the language of transport having only one noun, ‘vehicle’, say, to refer to bicycles, skate boards, nuclear submarines, rockets, automobiles, 18 wheeled trucks, container ships, etc. It is impossible to say almost anything about ‘vehicles’ and their capabilities in those circumstances, as anything one says will be true for only a small fraction of all ‘vehicles’. This lack of distinction compounds the problem of hype, as particular statements get overgeneralized.

(The cited book is AI Snake Oil: What Artificial Intelligence Can Do, What It Can’t, and How to Tell the Difference.)

I'm used to making this point by noting that "AI" now just means something like "complicated computer program", but the vehicle analogy is better and clearer.

The Brooks chapter starts with this three-point summary:

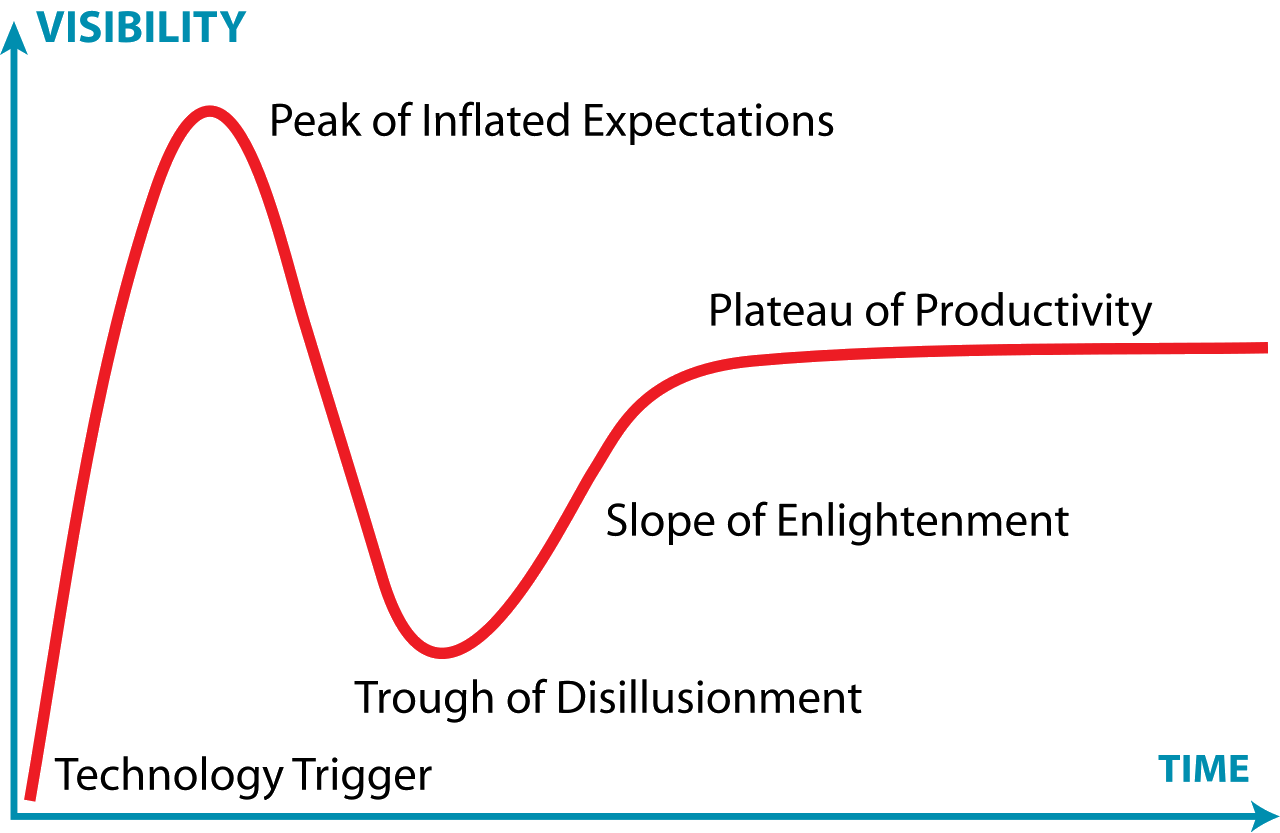

- Over the last 70 years, against a background of constant delivery of new and

important technologies, many AI innovations have generated excessive hype. - Like other technologies these hype trends have followed the general Gartner

Hype Cycle characterization. - The current Generative AI Hype Cycle is the first introduction to AI for

perhaps the majority of people in the world and they do not have the tools to

gauge the validity of many claims.

Here's a picture of the "Gartner Hype Cycle", from the Wikipedia article:

A more elaborately annotated graph is here.

Wikipedia explains that "The hype cycle framework was introduced in 1995 by Gartner analyst Jackie Fenn to provide a graphical and conceptual presentation of the maturity of emerging technologies through five phases."

Jackie Fenn doesn't have a Wikipedia page — a gap someone should fix! — but her LinkedIn page provides relevant details.

Chester Draws said,

June 20, 2025 @ 5:05 pm

Of course if the AI were actually intelligent the vehicle analogy falls over, because an intelligent device would be able to answer questions and do a wide range of tasks, just as people can.

What we actually have, in practice, is translation software that beautifully parrots back what it sees elsewhere, but has zero common sense and cannot work outside translation.

And editing software that will fix prose up into a much finer form, but doesn't notice that the content is utterly wrong.

David Cameron Staples said,

June 21, 2025 @ 1:43 am

@Chester…

That's part of the "vehicle" analogy from the original book. You're describing LLMs there: large language models. That's only one type of "AI".

This is what's sometimes called "spicy autocorrect", and the current iteration of things like Google Translate is (I believe) basically the same sort of thing, tuned differently. The Grammar-f**ing (insert "ix" or "uck" as appropriate) engines like Grammerly are in the same sort of clade.

The image generator AIs like Midjourney are a different type of beast entirely, even if they're working on the same vague fundamental concept of "build a statistical model and generate things which match those (increasingly complex) patterns".

Then there are modelling AIs which are what New Scientist is talking about with "New AI Can Detect Cancer From MRI images!" (or from Fitbit data, or from a cellphone photo, or whatever). They take slabs of input and turn them into probabilities of future events. They can be useful, if carefully trained and carefully interpreted.

Then there are speech-to-text engines, and text-to-speech engines. These can actually be useful, but then you've got issues like actors having their voices copied and sold, or of regular people having samples of their voices used to build voice models, and then used to call their grandmothers asking for an emergency bank transfer.

Also, there are two parts to most of these: the Learning engine, which takes data and does things to it in order to build a Model, and the Model, which is the thing you're actually talking to. The Model is read only in most LLM engines. Once you close the session, it is incapable in principle of learning from what you said. (That's OK, though: everything you said was logged, and goes into the hopper to be used to build the next iteration of the Model. So you will eventually see your own words and private information sent back at you.

The thing is, you're taking not just different scales of things — small models which will run on your phone, up to engines which need a dedicated power station to run — you're seeing different types of thing all lumped together as "AI". It's as if you see news of a Vehicle which has taken 2 metric tons into high Earth orbit, and another Vehicle which has broken the air speed record, and you go to a bicycle shop- sorry, a two-wheeled pedalled Vehicle shop and demand to know when your bicycle will carry a ton of cargo at Mach 3. And over there people are talking about a Vehicle which is carrying a thousand containers, and talking, as if they know what they're talking about, about how it should be easy to make that run on solar power and a small sail.

Oh, and it's worse than that, because they're selling cars where they don't know how the engines work.

No, seriously. We know how they work in a general sense. The Learning Engine takes data and builds a model, and then you interact with the model. Fine. Except, we don't know how the model works. As far as we can tell, LLM learning engines take All Language, stick in a press, and squeeze it until more language squirts out. But we don't know how. Nobody knows how. (Anthropic to some extent exists in order to take LLM models apart to try to figure out how they work. Early results are: it's all word association and lies.) All the interactivity and Turing Test passing stuff is entirely emergent, and it is vitally important to remember that just because we don't know how it's doing it, that doesn't mean that the answer is that it's conscious, because it's really, really, really not. It's a statistical model of language that's doing linear algebra to tokens. It's not a mind, or even a model of a mind.

I may have opinions about "AI".

Robot Therapist said,

June 21, 2025 @ 4:02 am

Indeed. I worked in "AI" in the first half of the 1980s. It had pretty much nothing in common with the current "AI" vehicles. At that time, we were mainly interested in working out how humans do things, and then programming computers to emulate that.

Chester Draws said,

June 21, 2025 @ 7:20 pm

I don't think we are disagreeing very much David.

My point is that true intelligence is one skill that can cover all the things that "AI" does. True intelligence is all "vehicles" at once (although some better than others).

However, I do disagree on the "Oh, and it's worse than that, because they're selling cars where they don't know how the engines work". We basically don't have a scooby on how the human mind works, except for some notorious areas where it doesn't work very well. Why would machine minds need to be better in that regard?