Alignment

« previous post | next post »

In today's email there was a message from AAAI 2026 that included a "Call for the Special Track on AI Alignment""

AAAI-26 is pleased to announce a special track focused on AI Alignment. This track recognizes that as we begin to build more and more capable AI systems, it becomes crucial to ensure that the goals and actions of such systems are aligned with human values. To accomplish this, we need to understand the risks of these systems and research methods to mitigate these risks. The track covers many different aspects of AI Alignment, including but not limited to the following topics:

- Value alignment and reward modeling: How do we accurately model a diverse set of human preferences, and ensure that AI systems are aligned to these same preferences?

- Scalable oversight and control: How can we effectively supervise, monitor and control increasingly capable AI systems? How do we ensure that such systems behave according to predefined safety considerations?

- Robustness and security: How do we create AI systems that work well in new or adversarial environments, including scenarios where a malicious actor is intentionally attempting to misuse the system?

- Interpretability: How can we understand and explain the operations of AI models to a diverse set of stakeholders in a transparent and methodical manner?

- Governance: How do we put in place policies and regulations that manage the development and deployment of AI models to ensure broad societal benefits and fairly distributed societal risks?

- Superintelligence: How can we control and monitor systems that may, in some respects, surpass human intelligence and capabilities?

- Evaluation: How can we evaluate the safety of models and the effectiveness of various alignment techniques, including both technical and human-centered approaches?

- Participation: How can we actively engage impacted individuals and communities in shaping the set of values to which AI systems align?

This reminded me of my participation a few months ago in the advisory committee for "ARIA: Aligning Research to Impact Autism", which was one of the four initiatives of the "Coalition for Aligning Science".

Alignment, like journey, is an old word that has been finding new meanings and broader uses over the past few decades. I suspect a role for Dungeons & Dragons, which has been impacting broader culture in many ways since the 1970s:

In the Dungeons & Dragons (D&D) fantasy role-playing game, alignment is a categorization of the ethical and moral perspective of player characters, non-player characters, and creatures.

Most versions of the game feature a system in which players make two choices for characters. One is the character's views on "law" versus "chaos", the other on "good" versus "evil". The two axes, along with "neutral" in the middle, allow for nine alignments in combination. […]

The original version of D&D (1974) allowed players to choose among three alignments when creating a character: lawful, implying honor and respect for society's rules; chaotic, implying rebelliousness and individualism; and neutral, seeking a balance between the extremes.

In 1976, Gary Gygax published an article title "The Meaning of Law and Chaos in Dungeons and Dragons and Their Relationships to Good and Evil" in The Strategic Review Volume 2, issue 1, that introduced a second axis of good, implying altruism and respect for life, versus evil, implying selfishness and no respect for life. The 1977 release of the Dungeons & Dragons Basic Set incorporated this model. As with the law-versus-chaos axis, a neutral position exists between the extremes. Characters and creatures could be lawful and evil at the same time (such as a tyrant), or chaotic but good (such as Robin Hood).

For some metaphorical extensions, see "Alignment charts and other low-dimensional visualizations", 1/7/2020.

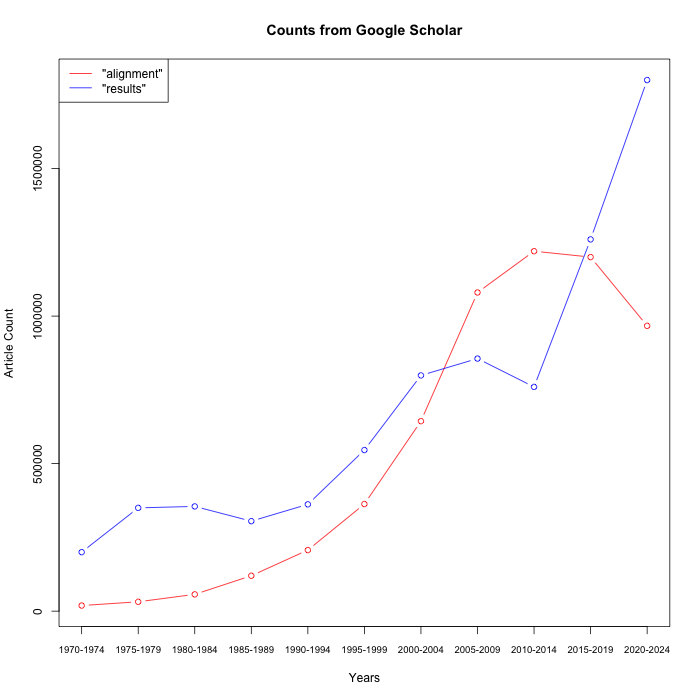

A quick scan of Google Research results shows a steady increase in references including the word alignment, though 2014 or so. (I've included counts for the word results to check for general corpus-size increases).

YEARS ALIGNMENT RESULTS RATIO 1970-1974 19000 200000 10.53 1975-1979 31700 350000 11.04 1980-1984 56900 355000 6.24 1985-1989 119999 305000 2.54 1990-1994 207000 362000 1.75 1995-1999 363000 546000 1.50 2000-2004 644000 799000 1.24 2005-2009 1080000 856000 0.79 2010-2014 1220000 760000 0.62 2015-2019 1200000 1260000 1.05 2020-2024 967000 1800000 1.86

And a graphical version:

It would be interesting to track the evolution, across the decades in various cultural areas, of meaning and sentiment for alignment and aligning.

Update — see also "Interpersonal and sociocultural alignment", 7/15/2025.

S Frankel said,

July 15, 2025 @ 3:42 pm

Hoping the Austronesianists will check in here.

AntC said,

July 15, 2025 @ 4:51 pm

Mai Kuha said,

July 15, 2025 @ 9:42 pm

I work in tech. One day, I expressed a concern to a manager. "I am totally aligned with you", he reassured me. It was a warm fuzzy moment.

Jonathan Smith said,

July 15, 2025 @ 10:01 pm

"crucial to ensure that the goals and actions of such systems are aligned with human values."

What a load of nightsoil, yes let's keep pretending it's the systems and not the stupid and evil human deployers of the systems that need "alignment". Need "tore down almost aligned with the ground" to quote-ish Freddie King. Really the downright dumbest timeline as the kids say with this quote-on-quote AI stuff…

Rick Rubenstein said,

July 15, 2025 @ 10:28 pm

I will certainly breathe a sigh of relief when the conference is over and all the above questions have been answered to everyone's satisfaction.

bks said,

July 16, 2025 @ 5:40 am

Sorry, never played D&D. To me alignment is something done to new tires for an extra fee.

Bob Ladd said,

July 16, 2025 @ 8:52 am

Actually, despite this post being about "alignment", I was also curious about "track" in the announcement quoted in the OP. I don't think I've ever been to a conference where you can focus on a specific "track". Is this a tech thing? An AI thing? Or have I just not been paying attention?

Philip Taylor said,

July 16, 2025 @ 9:30 am

"Track" was inherently meaningful to me, Bob, and I would suggest that it is analogous to a "thread". I can certainly imagine a conference on computer typesetting where one track was focussed on XML, another on TeX, and so on. And BKS, if your favourite garage is charging you extra for having your tyres aligned, I would suggest you take your custom elsewhere. It is the wheels that are aligned, not the tyres !

Sniffnoy said,

July 16, 2025 @ 10:34 am

At least as far as I'm aware, the application of "alignment" to AI comes from Eliezer Yudkowsky or at least someone in his circles. He used to speak of "friendly AI" and "unfriendly AI". However, the meaning of these terms was fairly different from the plain meaning, which confused people. So at some point he switched to talking about "aligned" or "unaligned" AI. I don't know whether the terminology switch was his own idea or suggested by someone else. That's where I saw it first and I suspect that was the start of it (other groups generally weren't talking about this problem back then, is my impression). However tracking down the switch is a bit hard to search for.

Mark Liberman said,

July 18, 2025 @ 5:34 am

@Sniffnoy:

See "Interpersonal and socio-cultural alignment", 7/17/2025.

Kim said,

July 19, 2025 @ 1:06 am

I recognize "track" in this sense from biomedical-field conferences from the mid-2000s at the latest, maybe a bit earlier. I don't know if it was adopted from techworld; at the time I just assumed it was a development in generalized professional-society-corporatese.