AI Overview jokes

« previous post | next post »

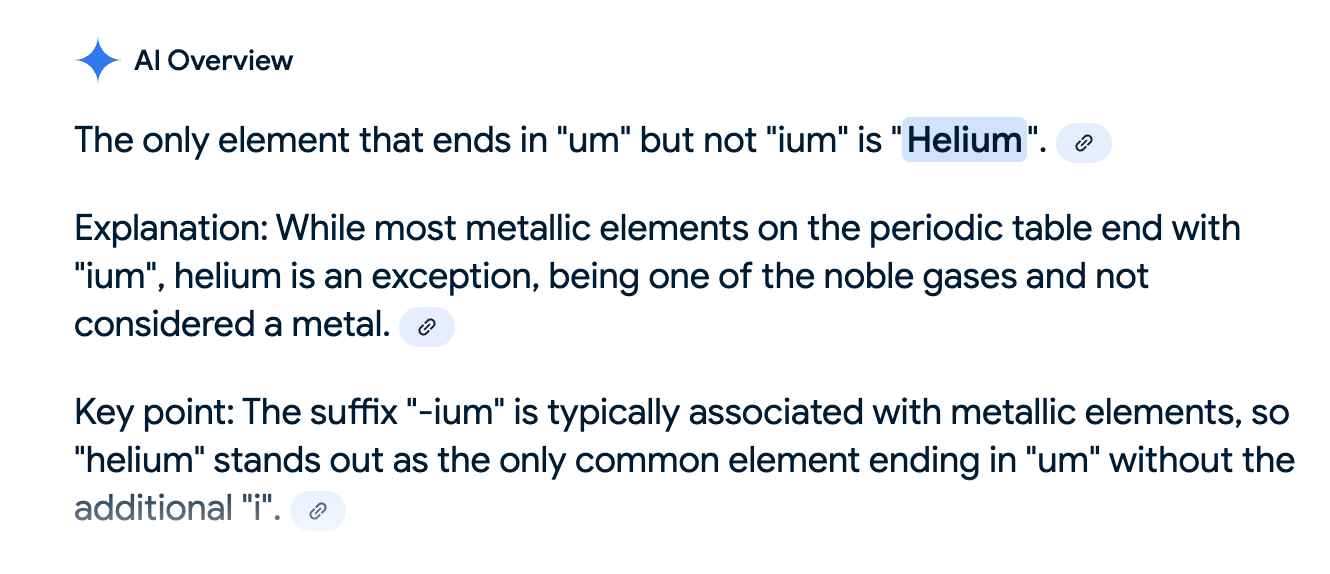

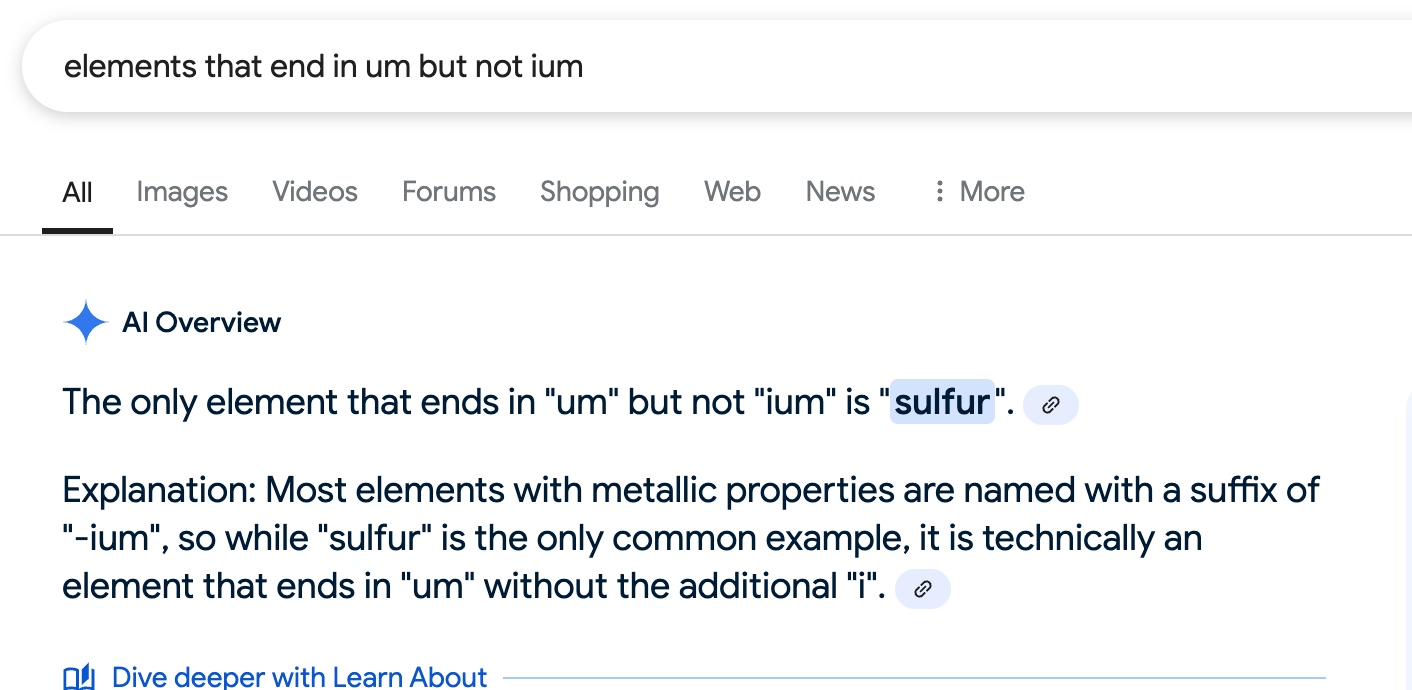

There's recently been a minor social- and mass-media fad for weird "AI Overview" answers from Google. The results are a moving target, either because of back-end fixes or because of the inherently stochastic nature of LLM results, but some of them are funny while they last. One query that still works this morning is a request for "elements that end in um but not ium", which sometimes answers

and sometimes answers

Of course the answer should be something like "Molybdenum, Tantalum, Platinum, and Lanthanum" (also "Aluminum" in places that spell it that way — see below), and no doubt Future Gemini will figure that out or be instructed to "know" it.

The funniest one that I've seen so far is a response to the query "is tripe kosher", which once answered that it depends on the religion of the cow:

I don’t know if Google can top this, their finest work to date

— Delbert Grady Seasons (@delbertgseasons.bsky.social) February 25, 2025 at 1:31 AM

…but now gives a correct answer, at least when I ask…

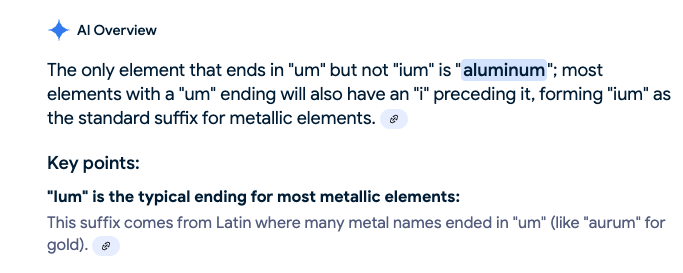

Update: Now when I ask about "elements that end in um but not ium", AI Overview tells me that aluminum is the only one:

So LLMs apparently continue to have difficulties with spelling and counting.

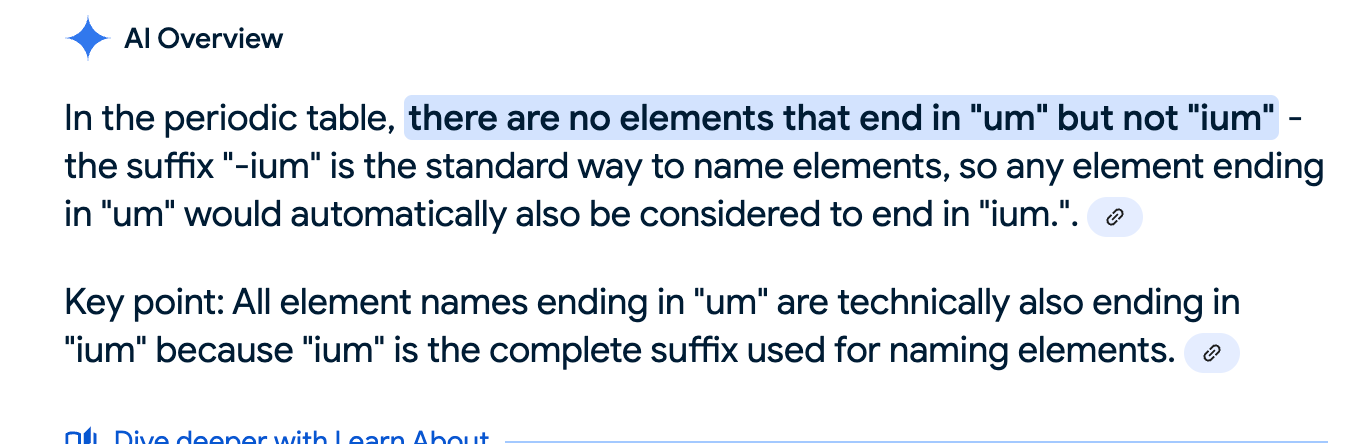

Update #2: Or maybe the count is zero?

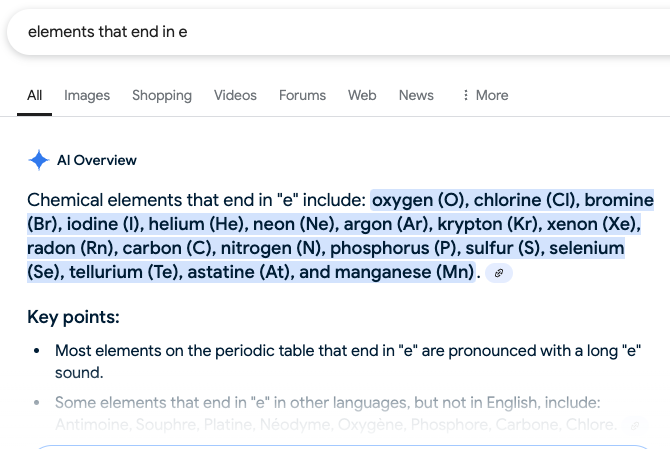

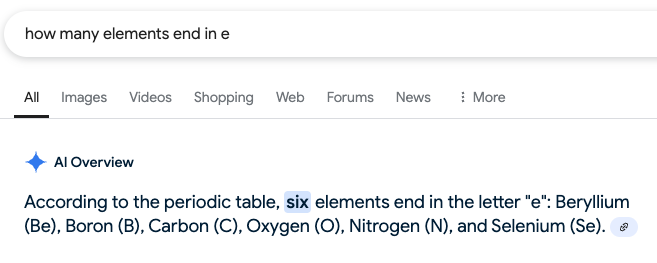

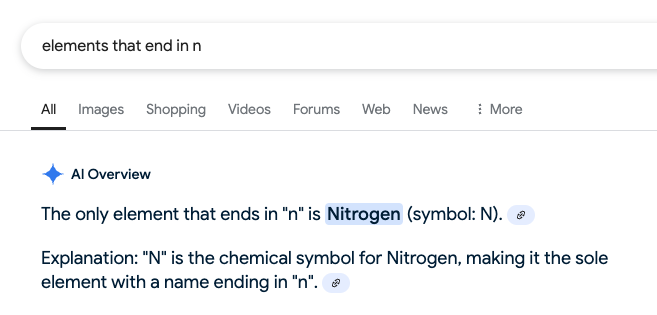

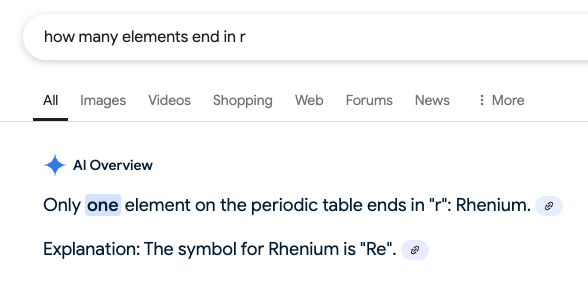

Update #3: Some other questions yield equally weird AI Overview answers:

Jerry Packard said,

February 26, 2025 @ 7:56 am

What a riot.

Linda Seebach said,

February 26, 2025 @ 8:30 am

Someone who understands much more about this than I do can explain it better, but the short explanation is that LLMs do not store words as such, they assign tokens, and tokens don't have characters.

Jason Stokes said,

February 26, 2025 @ 9:30 am

Google AI is so confidently and arrantly wrong that it has to be considered disinformation, by Google's own standards.

John Maline said,

February 26, 2025 @ 10:12 am

@Linda Seebach

Yes. A good overview article on “the spelling miracle” https://www.lesswrong.com/posts/5sNLX2yY5FzkCp7Ju/the-spelling-miracle-gpt-3-spelling-abilities-and-glitch

@Jason Stokes

Seems one of the risks of LLMs in general – super confident tone while serving up a lossy-compressed summary of the internet.

Haamu said,

February 26, 2025 @ 10:59 am

This morning I had the exact same um/ium search, worded identically, proposed as the top choice in the dropdown when I clicked into the Google search box. (I got the same "sulfur" answer.)

So Google is pushing these example searches out to people unsolicited, apparently to demonstrate the capabilities of AI Overview. Someone at Google decided this was a good search for that purpose. Was that someone human, or was this just another judgment that's been outsourced and optimized?

Haamu said,

February 26, 2025 @ 11:08 am

PS — As noted in the linked BBC article, Google refers to this as "tak[ing] the legwork out of searching," which is an interesting metaphor, given that the legs were dropped from the equation several decades ago. The atrophy has moved further north.

Rodger C said,

February 26, 2025 @ 11:54 am

Recently I searched "American surnames of African origin" and got, "Many common African American surnames include Williams, Johnson, Smith, Brown, and Jackson." In fact I had to do a great deal of searching to find anything else.

MattF said,

February 26, 2025 @ 12:18 pm

@Roger C

I tried that search on DuckDuckGo, and it appeared to work in a routine and uneventful fashion.

EB said,

February 26, 2025 @ 12:32 pm

I just asked Google "what are the last two letters of aluminum" and it responded with:

The chemical symbol for aluminum is "Al", so the last two letters of aluminum are "l" and "u".

Wow.

Terry K. said,

February 26, 2025 @ 12:34 pm

@MattF

I tried both Google and DuckDuckGo just now, and with both, for "American surnames of African origin" both the search results and AI info gave results instead for African-American surnames. The only difference is Google has AI results at the top by default, whereas with DuckDuckGo AI results are an option one can turn on or not.

David Morris said,

February 26, 2025 @ 2:21 pm

I recently had to decide where to place a hyphen in 'single' (for music typesetting purposes – I don't usually hyphenate 'single'). Google AI overview's answer was “No, you should not hyphenate the word “single” because it is a one-syllable word.”

Philip Taylor said,

February 26, 2025 @ 3:39 pm

David —

Rick Rubenstein said,

February 26, 2025 @ 4:04 pm

Philip has some interesting points in his response to David. ;-)

Bob Michael said,

February 26, 2025 @ 10:21 pm

Q: Did all languages evolve from just one language?

Google AI Summary:

According to the prevailing theory among linguists, yes, all modern languages are believed to have evolved from a single ancestral language, often referred to as "Proto-Indo-European" (PIE), which is thought to have originated in central Europe and spread as people migrated and traded throughout the region; however, the exact origins of language and whether it emerged in one place or multiple times remain a subject of debate.

David Morris said,

February 27, 2025 @ 6:11 am

I didn't understand a sing-le word of that …

David Marjanović said,

February 27, 2025 @ 9:24 am

I always took for granted that the suggested searches are simply searches that many people have undertaken recently.

KeithB said,

February 27, 2025 @ 11:22 am

Sometimes it does pretty well. Someone asked Musk's chatbot grok3 about the recent attempt to have federal workers list 5 things they did:

Q:

Elon Musk sent an email to around 3 million federal government employees asking them to respond with 5 bullet points of things they did last week. He proposed to feed the responses to a LLM (probably you) with a view to some kind of activity-based analysis of who to retain and who to fire. What is the feasibility of such an idea?

A:

Elon Musk’s idea of having 3 million federal employees submit five bullet points about their work from the past week, then feeding that data into an LLM for an activity-based analysis of who to retain or fire, is ambitious and theoretically possible but comes with significant practical, ethical, and technical challenges. Let’s break it down.

First, the sheer scale of data—15 million bullet points (3 million employees times five each)—is manageable for a modern LLM, especially one designed for large-scale analysis. With enough computational power, I could process that volume, extract patterns, categorize activities, and even score productivity or relevance based on predefined criteria. For example, I could identify who’s doing high-impact work (e.g., solving critical problems) versus low-value tasks (e.g., attending endless meetings), assuming the criteria are clear. The tech exists to handle this, and xAI’s infrastructure could likely support it.

The bigger issue is the quality of the input. People know they’re being judged, so they’ll game the system. You’d get polished, exaggerated, or outright fabricated bullet points—think “Saved the department $10 million” versus “Answered 12 emails.” Without a way to verify truthfulness, the data’s unreliable. Cross-referencing with other sources (e.g., project logs, emails, or output metrics) could help, but that’s a massive undertaking for 3 million people and not something an LLM can do alone without integration into government systems.

Then there’s the analysis itself. I could cluster employees by role, department, or task type and flag outliers—say, someone whose bullet points suggest they’re redundant or underperforming. But “retain or fire” decisions need context an LLM can’t fully grasp: organizational goals, team dynamics, or intangible contributions like morale. Plus, federal jobs often involve bureaucracy or compliance work that looks mundane on paper but is legally required. How do you weigh that against, say, innovation? Musk would need to define razor-sharp metrics, and even then, the model’s biases (or the biases in the training data) could skew results.

Legally and ethically, this is a minefield. Federal employees have unions, protections, and due process rights—mass analysis for firing could spark lawsuits or congressional backlash. Privacy’s another hurdle; those bullet points might contain sensitive info, and feeding them into an AI raises data security questions. Musk might argue it’s opt-in, but with jobs on the line, it’s coercive in practice.

Finally, execution speed matters. Processing 15 million bullet points, generating insights, and making recommendations could take days or weeks, depending on the depth of analysis. Musk loves fast results, but refining this into actionable decisions—especially with human oversight—wouldn’t be instant.

In short, it’s feasible as a concept: I could crunch the data and spit out a ranked list. But making it fair, accurate, and legally defensible? That’s a monumental challenge. It’d work better as a thought experiment or a pilot with a smaller, controlled group—say, a single agency—before scaling up. What do you think Musk’s endgame is here? Just efficiency, or something bigger?

Philip Taylor said,

March 5, 2025 @ 5:19 am

Not a joke but a sad fact. I need to produce a 1920 x 1080 grid with axes labelled so that I can determine the actual resolution of a television which is larger than 720p but not as large as 1080p. Wanting to label the axes at sensible intervals, I told Google "Factorise 1920". Here is what Google AI Overview returned :

OK, the second sentence is correct (although the wording is strange) but the first sentence ?!

Randy Hudson said,

March 7, 2025 @ 5:48 pm

@Philip — The second digits in "27 x 31 x 51" should be superscripts.

"2 to the 7th times 3 (to the 1st) times 5 …"

128 x 3 x 5 indeed = 1920

Philip Taylor said,

March 8, 2025 @ 4:22 am

Ah, I had not evenconsidered that possibility, Randy — thank you so much for pointing it out. I wonder why Google AI Overview failed to display the superscripts correctly. But I now see that it has "realised" its error, and today displays "The prime factorization of 1920 is 2<sup>7</sup> * 3 * 5".

Q. Pheevr said,

March 15, 2025 @ 4:33 pm

As usually turns out to be the case when AI comes up with an answer that sounds like something a clever person might say if they were being funny on purpose, this one was plagiarized from a clever person who was being funny on purpose: https://tripemarketingboard.co.uk/faqs/#1587984237975-7ff9334c-027d