New AI Pal problems

« previous post | next post »

With current LLM technology, users can create individualized chatbots, and interact with them over time as if they were real friends. This started almost a decade ago with Replika, and more recently, we've seen similar tools from Character.ai (from September 2022) and Meta's AI Studio (from July 2024).

There've been several recent problems with these apps. The most serious ones involve harmful advice, such as those described in this recent lawsuit. In a less serious but still troublesome issue, NBC News recently found "two dozen user-generated AI characters on Instagram named after and resembling Jesus Christ, God, Muhammad, Taylor Swift, Donald Trump, MrBeast, Harry Potter, Adolf Hitler, Captain Jack Sparrow, Justin Bieber, Elon Musk and Elsa from Disney’s 'Frozen'".

But the weirdest recent problem seems to be the result of a Character.ai bug.

See Maggie Harrison Dupré, "Google-Backed Chatbots Suddenly Start Ranting Incomprehensibly About Dildos", Futurism 1/17/2025:

Screenshots shared by users of Character.AI — the Google-funded AI companion platform currently facing two lawsuits concerning the welfare of children — show conversations with the site's AI-powered chatbot characters devolving into incomprehensible gibberish, melding several languages and repeatedly mentioning sex toys.

"So I was talking to this AI this morning and everything was fine, and I came back to it a couple of hours later and all of the sudden it's speaking this random gibberish?" wrote one Redditor. "Did I break it?"

"I really don't want to start a new chat," they added. "I've developed the story so much."

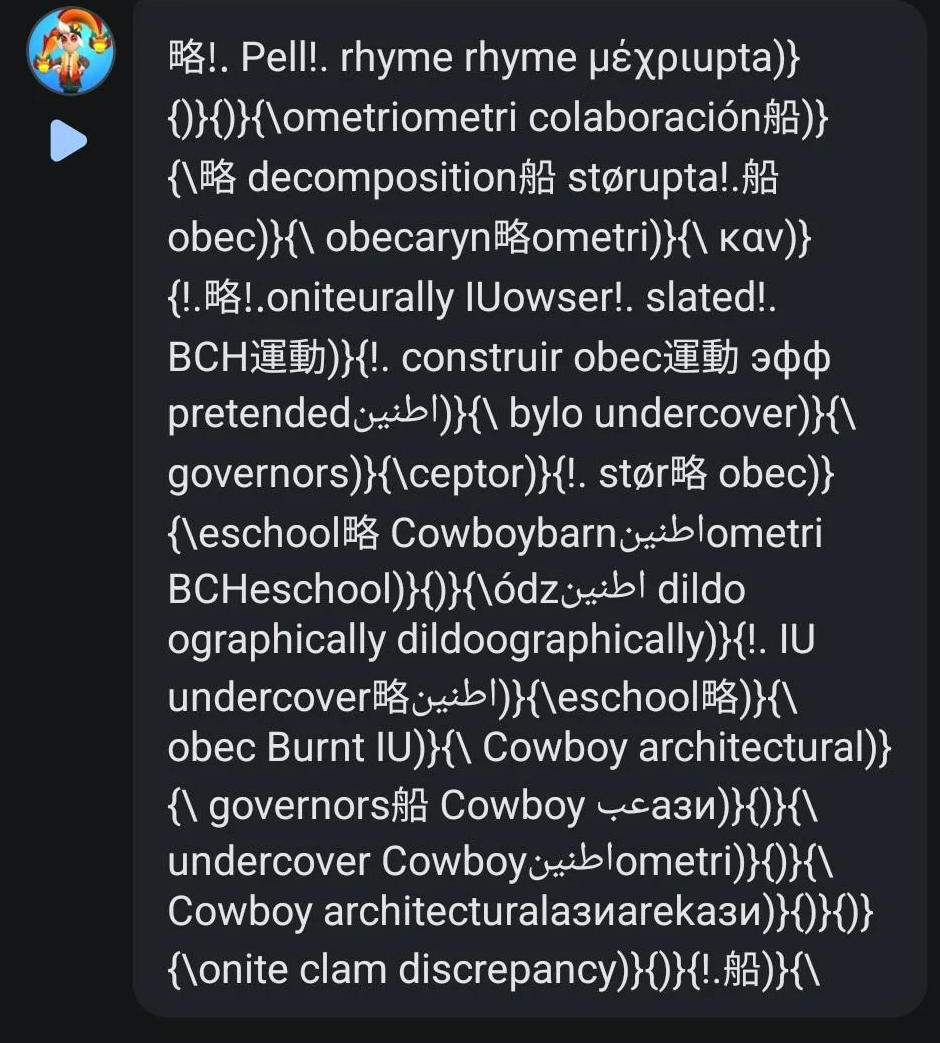

An accompanying image provided by the user of their Character.AI chat reveals absolute nonsense: garbled AI-spawned text in which random English words — among them "Ohio," "slated," "colored," and "Mathematics" — are scattered between equally erratic outbursts in languages including Turkish, German, and Arabic.

Scanning through r/character.ai yields several other examples, for instance this one:

A reddit commentator suggests that "There is an onite clam discrepancy. But don't worry, the undercover cowboy is investigating the BCH obec's eschool, where they teach dildoography."

It's more likely that this is telling us something about the algorithmic wizard(s) behind the curtain — maybe some day, someone at Character.ai will explain.

See also:

Yay Newfriend (4/20/2024)

Yay Newfriend again (4/22/2024)

More on AI Pals (5/9/2024)

Gregory Kusnick said,

January 18, 2025 @ 11:13 am

My guess: the model represents tokens internally as numerical token IDs, and the map from these IDs back into text strings has somehow become garbled, or possibly replaced with the map from a different model. So the bot might well still be generating coherent responses; they're just being translated into English text strings incorrectly.

Kenny Easwaran said,

January 18, 2025 @ 7:20 pm

On Feb. 20, 2024, ChatGPT's main site had something weird happen, where it generated mostly fluent text that mostly didn't make any sense. I can't tell if this is more or less weird than that, or if it's related. Here are some samples people posted back then:

https://www.reddit.com/r/ChatGPT/comments/1avwtwx/is_chatgpt_having_a_stroke/

https://www.reddit.com/r/ChatGPT/comments/1avwe91/chat_gpt_spent_too_much_time_at_burning_man/?context=3

Martin Schwartz said,

January 18, 2025 @ 8:35 pm

One may consider whether the Chatbot is haunted by the ghost of

Joshua Whatmough. who was Chair of Harvard's Dept. of Comparative Philology/Lingusitics for 33 years. Re this Chatbot's erratic outburst in Turkish and Arabic, the Harvard Crimson's obituary on JW, which

makes good reading for its account of his eccentricities, when angry

he would curse in Arabic or Turkish, often "turning purple in rage".

And as for this Chatbot's dildological ranting, somewhere in Whatmough's

Language: A Modern Synthesis, he suddenly lists words for 'dildo' in

various languages, for no apparent reason–this was all the odder for its time.

John Swindle said,

January 20, 2025 @ 8:23 am

@Gregory Kusnick: Analogous to mojibake, dildoographically speaking.