AI triumphs… and also fails.

« previous post | next post »

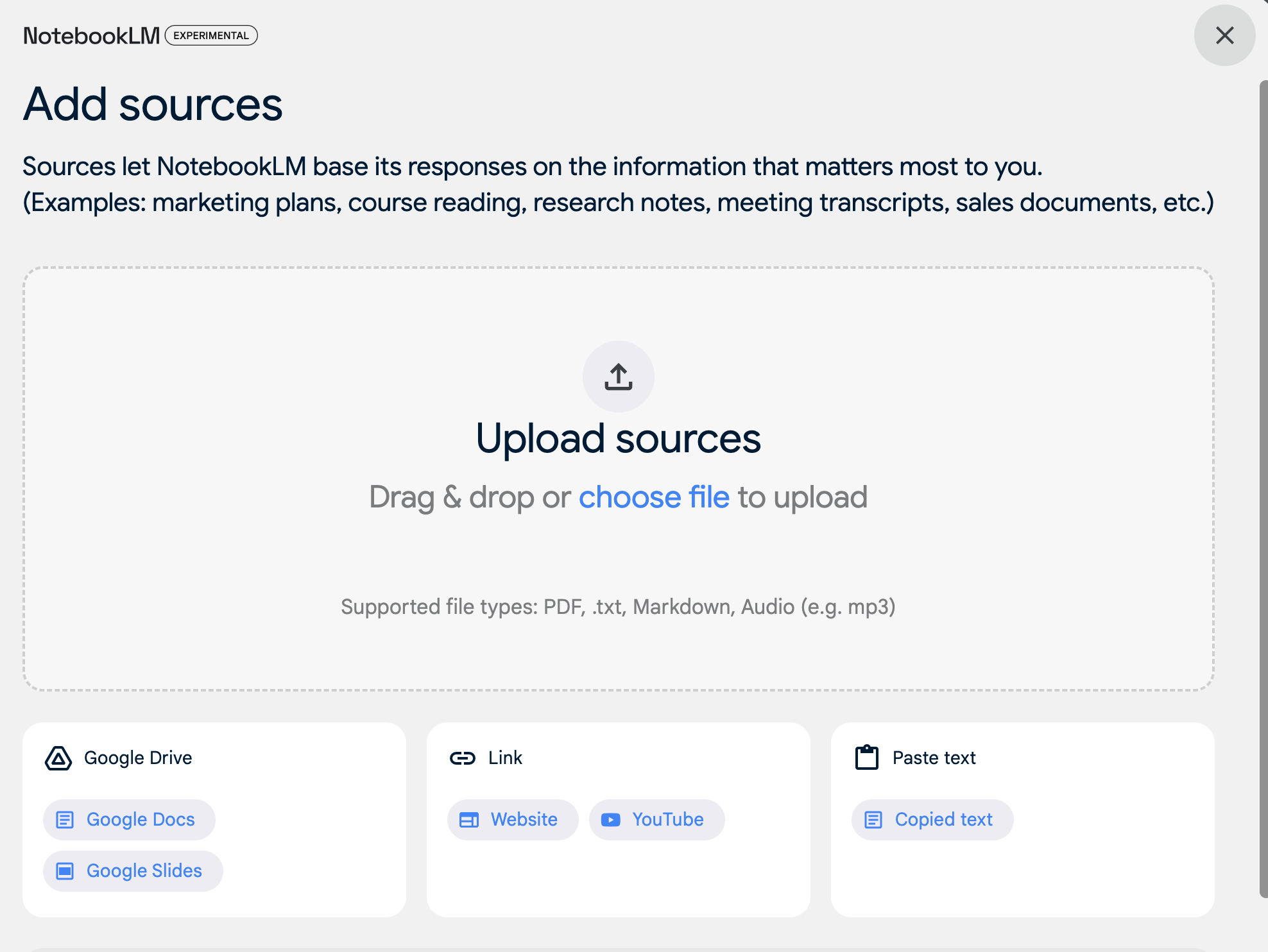

Google has created an experimental — and free — system called NotebookLM. Here's its current welcome page:

So I gave it a link to a LLOG post that I happened to have open for an irrelevant reason: "Dogless in Albion", 9/12/2011.

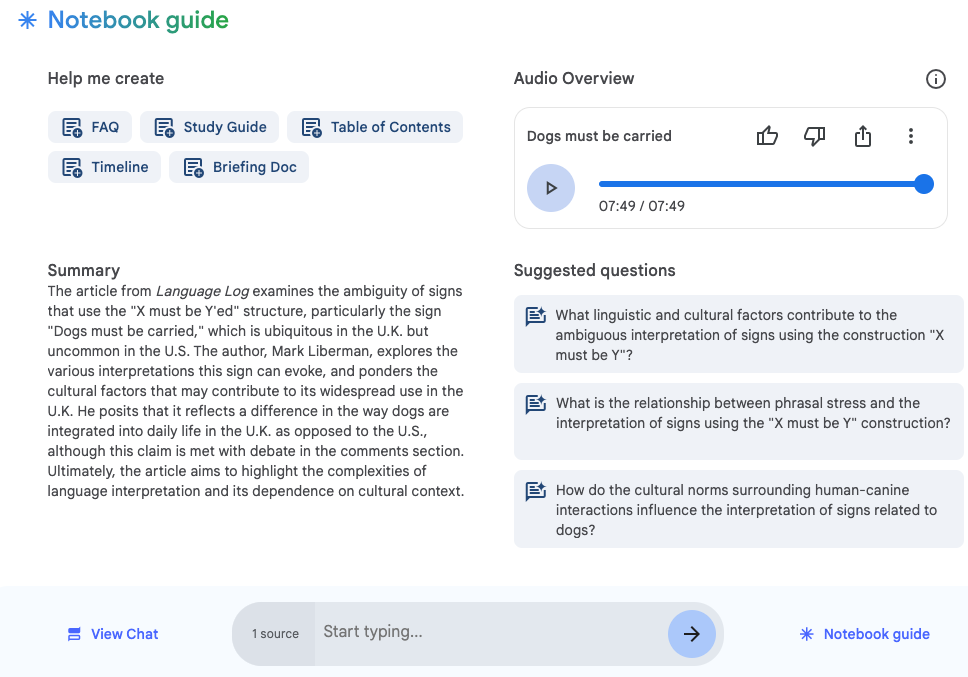

And here's what it showed me next:

That Summary is OK, though it leaves out the main point of the post, which was to discuss Martin Kay's point about the puzzling role of phrasal stress in disambiguating the sentence "Dogs must be carried".

But one of the three options under "Audio Overview" was

What is the relationship between phrasal stress and the interpretation of signs using the "X must be Y" construction?

So I clicked on that option. The result was an automatically-generated podcast-style discussion:

Both the LM-generated dialog and its audio realization are really impressive. And I'm not the only one who's impressed with NotebookLM's autopodcasts — on ZDNET, David Gewirtz wrote (10/1/2024):

I am not at all religious, but when I discovered this tool, I wanted to scream, "This is the devil's work!"

When I played the audio included below for you to my editor, she slacked back, "WHAT KIND OF SORCERY IS THIS?" I've worked with her for 10 years, during which time we have slacked back and forth just about every day, and that's the first all-caps I've ever seen from her.

Later, she shared with me, "This is 100% the most terrifying thing I've seen so far in the generative AI race."

If you are at all interested in artificial intelligence, what I've found could shake you up as much as it did us. We may be at a watershed moment.

Stunningly lifelike speech and dialog system, yes. Even voice quality variation and laughter at appropriate times.

And some of the content is good — for example the robot podcasters do a good job of explaining the ambiguity under discussion in my blog post:

But there are still problems. For example, the robots' attempt to explain the phrasal stress issue goes completely off the rails:

Zeroing in on the system's performance of the stress difference:

Where did the system get the weird idea that the way to put phrasal stress on the subject of "Dogs must be carried" is to pronounce "dogs" as /ˈdɔgz.ɛs/? Inquiring minds want to know, but are unlikely ever to learn, given the usual black-box unexplainability of contemporary AI systems.

Still, "podcasters" and similar talking-head roles may be among the jobs threatened by AI, either through complete replacement or a major increase in productivity. (And of course, human talking heads get things wrong a fair fraction of the time…)

Note: The original LLOG post should have included audio examples of Martin Kay's stress distinction, but didn't. So just in case it wasn't clear to you, here's my performance of phrasal stress on the subject:

And on the verb:

This is the only thing I've tried to do with notebookLM so far — future experiment will probably bring additional triumphs and additional failures.

Update– Listening again to the NotebookLM discussion of "Dogs must be carried", I have to say that I basically agree with Scott P's comment:

[T]he podcast it generated was more like a parody of a podcast — I was howling with laughter; it reproduced all of the quirks of diction and commentary that amateur podcasts have, but the content was almost completely vapid.

And I could list several other omissions, misleading sections, and outright mistakes.

But as someone who's worked on speech synthesis for many decades, I remain very impressed with system's accurate and lifelike "quirks of diction and commentary". And a substantial fraction of human podcasts are also "almost completely vapid", and subject to errors, bullshit, and outright lies. So I think we have to give the devil his (or her, or their, or its) due…

Jon W said,

October 3, 2024 @ 2:22 pm

Folks might be interested in Henry Farrell's impression of the same tech at https://www.programmablemutter.com/p/after-software-eats-the-world-what (the whole thing is worth reading):

David McAlister said,

October 3, 2024 @ 3:06 pm

On the issue of dogs being pronounced /ˈdɔgz.ɛs, it seems to me that the word being pronounced is dachshund – the first speaker using a particular dog breed to make their point and the second speaker clearly picks up on that.

Scott P. said,

October 3, 2024 @ 5:24 pm

I tried that out a week or two ago, feeding it scholarly articles on a subject my colleague teaches. The summaries and timeline it produced were mediocre at best, missing most of the key ideas. And the podcast it generated was more like a parody of a podcast — I was howling with laughter; it reproduced all of the quirks of diction and commentary that amateur podcasts have, but the content was almost completely vapid.

Rod Johnson said,

October 3, 2024 @ 5:40 pm

Tangentially, I was briefly thrown by Gewirtz's "slacked back" thing. I guess Slack has become a verb, but it's less transparent to me than "tweet" for Twitter.

David L said,

October 3, 2024 @ 5:52 pm

Somewhat OT, but as an indications of Google AI's fallibility: I started watching the Netflix series Kaos (quite entertaining, IMO), and I wanted to know the name of the actress playing "Riddy" (aka Eurydice). The first thing Google came up with was an AI summary saying that the character is played by Eva Noblezada, along with a picture that didn't look much like the person in the show. Then I found a cast list online and discovered that the actress in question is Aurora Perrineau, an entirely different person.

It's perplexing how AI can get simple facts wrong — like the number of r's in strawberry.

Peter Grubtal said,

October 4, 2024 @ 12:25 am

For those lacking time or inclination (or both) to go into these things deeply, the message has to be "the result was superficially very impressive." With the stress on superficially, obviously.

It makes it all rather worrying – are there likely to be effects on public discourse, the republic of letters? And the effects are likely to be strongly negative.

Julian said,

October 4, 2024 @ 3:00 am

@david L

In the last year I've asked chatgpt about five times: "Who was the first woman to climb Aoraki Mount Cook?"

The answer is different every time, and always wrong

Benjamin E. Orsatti said,

October 4, 2024 @ 8:13 am

One thinks about these sorts of things differently when one is the father of 3 teenagers.

Don't children learn how to behave in society by observing the interactions of their peers and others in society?

What do they "learn" when they hear these "conversations" between computers?

When will be the first time that an individual human "internalizes" computer "dialogue" patterns such as: absolute intolerance of conversational pauses not seen since "The Gilmore Girls" of the 1990's; grotesquely inappropriate uses of facsimiles of humor; or the complete untethering of human language from, well, "humanity"?

Is this how it all ends — not with a bang, but with a bot?

Rich said,

October 6, 2024 @ 8:29 pm

I've had many of the same poor experiences described above. Ask ChatGPT how to say 'helicopter' in Sanskrit 3x, and you'll get three different answers.

This, however, seems quite different. I uploaded a lecture I gave, and it produced real insights. The podcast-style audio was even leveled appropriately to a general-interest podcast, while it was still able to generate descriptions of the higher-level ideas when prompted.

The audio in particular is a bit worrisome. Very soon — or now — we simply won't be able to know when it's a computer speaking or a human. The voices would stumble over a Sanskrit term in a very American intonation implying 'Am I saying this right?'.

I'm terrified. Students have a clear option not learn to think, to write, to express before they know why those things matter. And I have a decision to make: use this tool to generate ideas for study guides or stick with my own?