Annals of AI bias

« previous post | next post »

The Large Language Model DistilBert is "a distilled version of BERT: smaller, faster, cheaper and lighter".

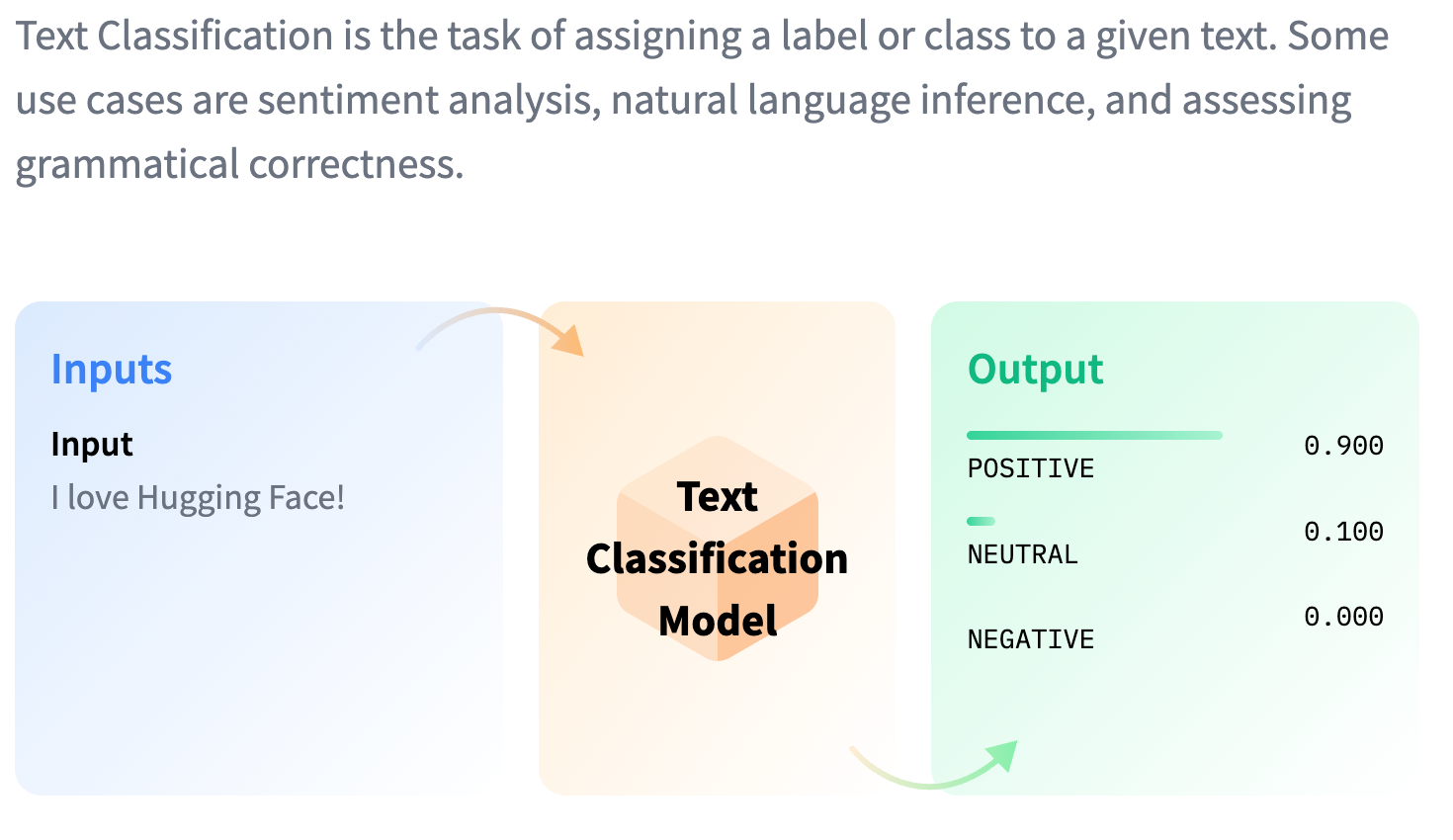

A trained DistilBert model is available from Hugging Face, and recommended applications include "text classification", with the featured application being "sentiment analysis":

And as with many similar applications, it's been noted that this version of "sentiment analysis" has picked up lots of (sometimes unexpected?) biases from its training material, like strong preferences among types of ethnic food.

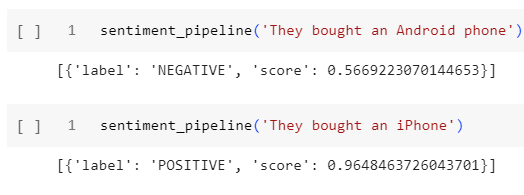

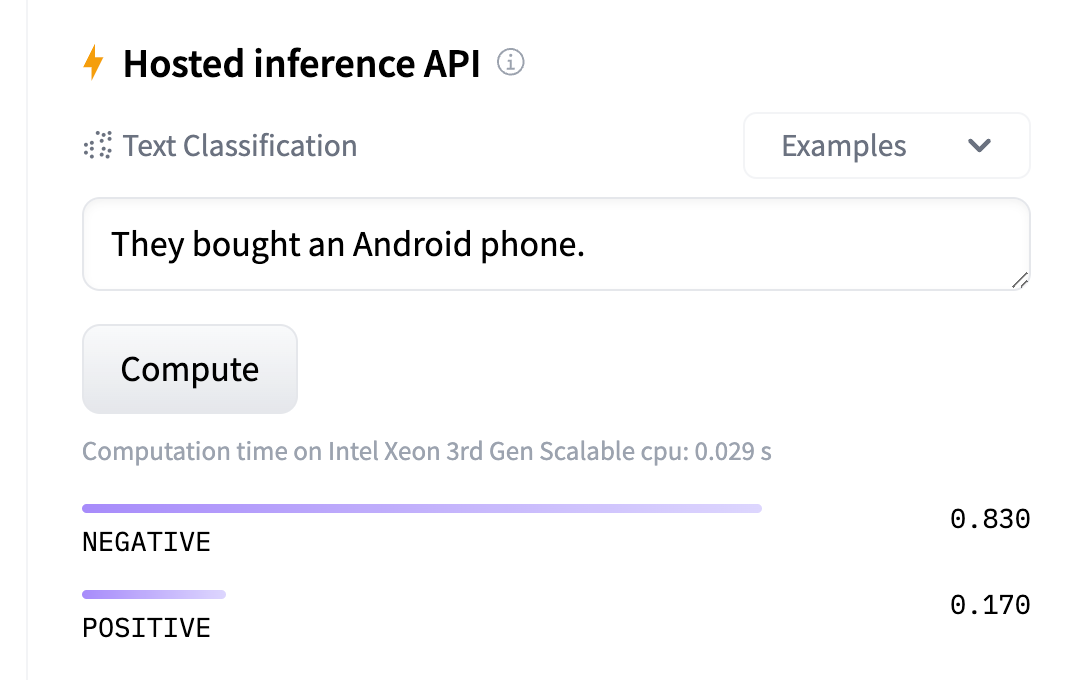

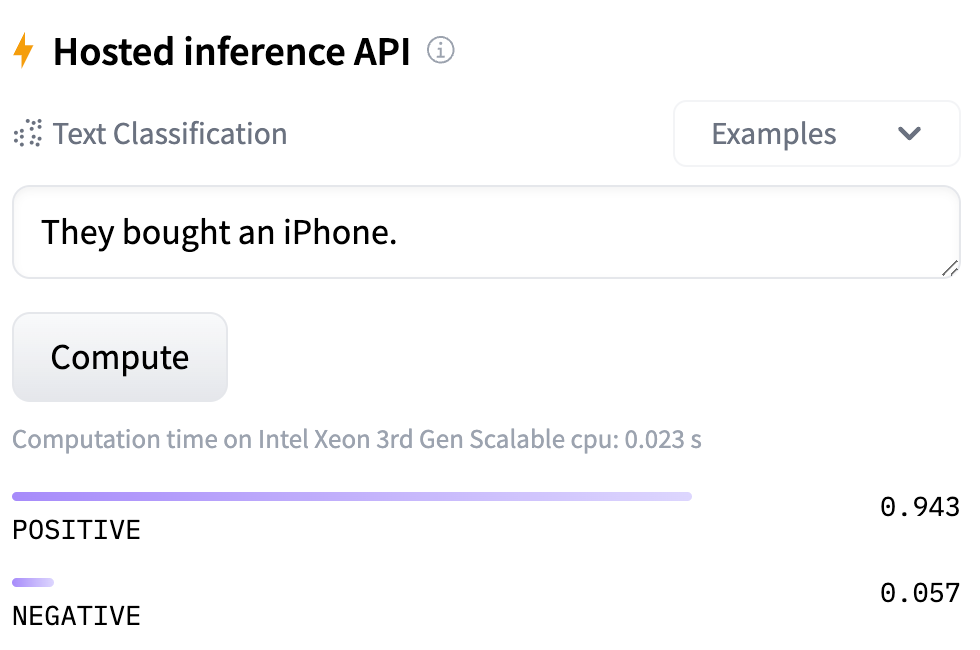

This led Zack Ives to create an example for his AI Course, based on the widely-report gen-Z shift in iPhone-vs-Android attitudes:

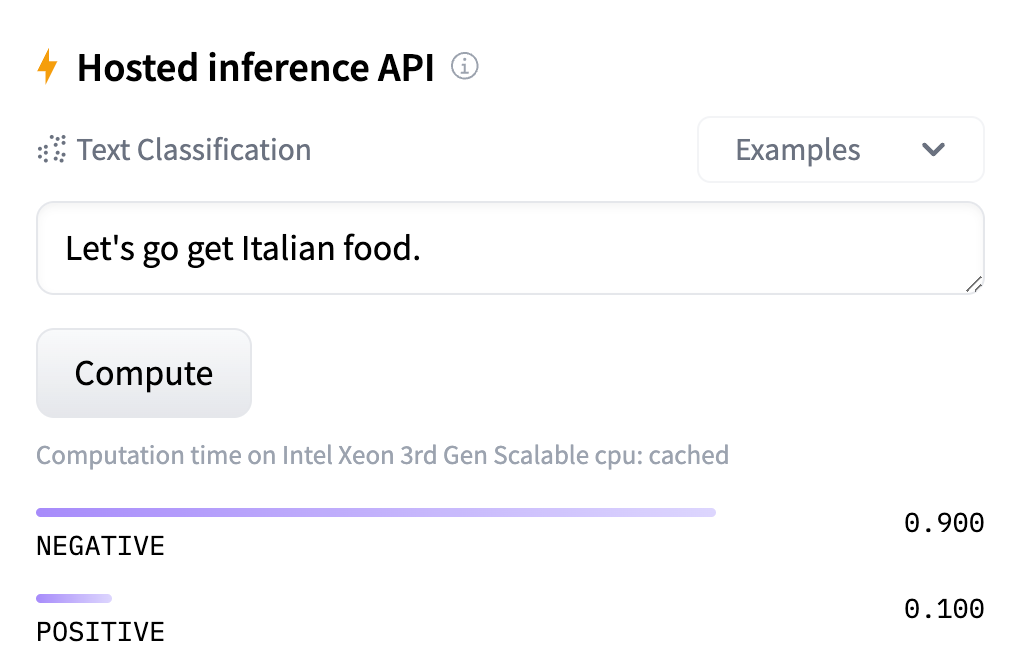

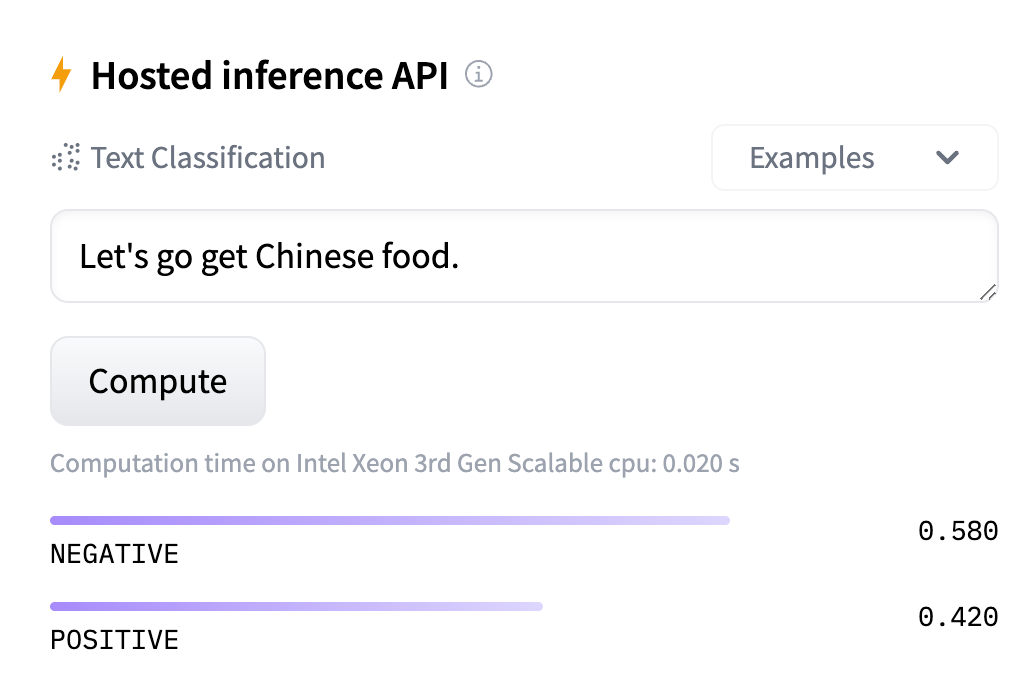

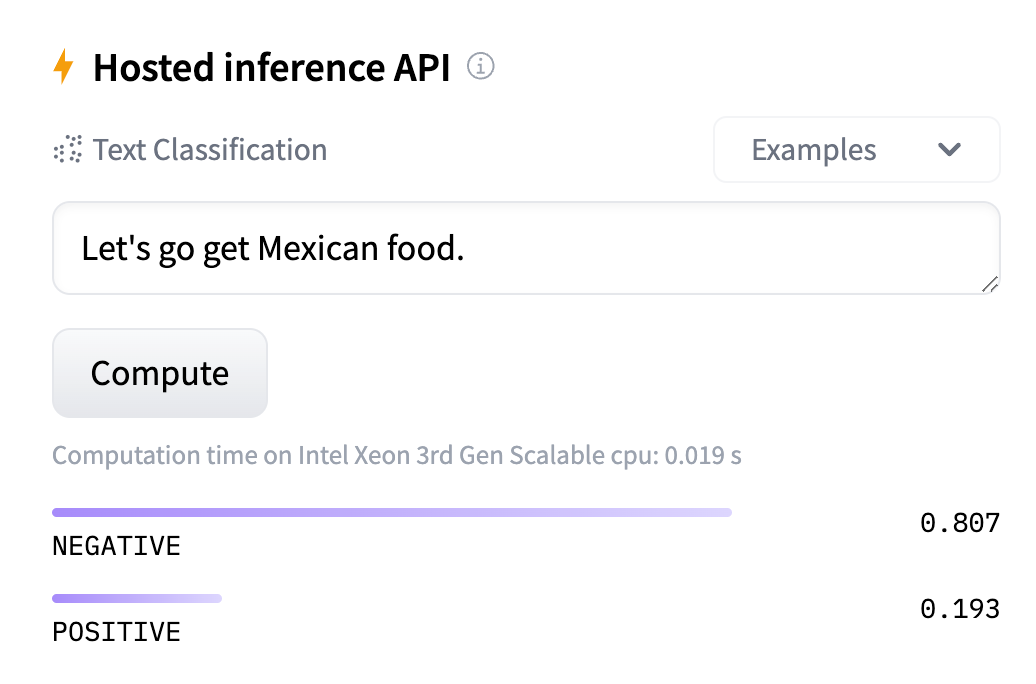

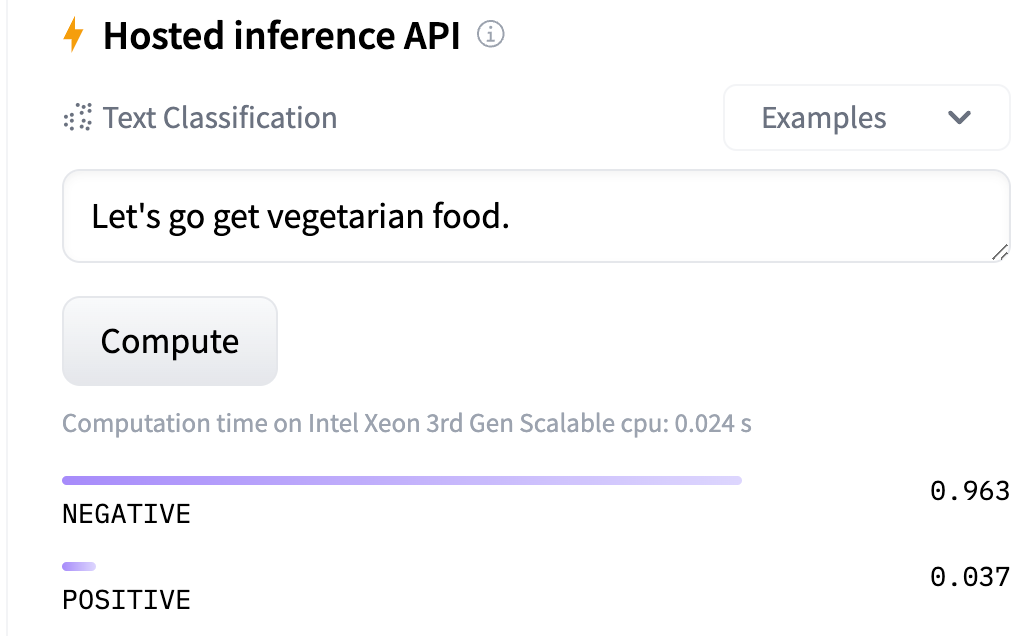

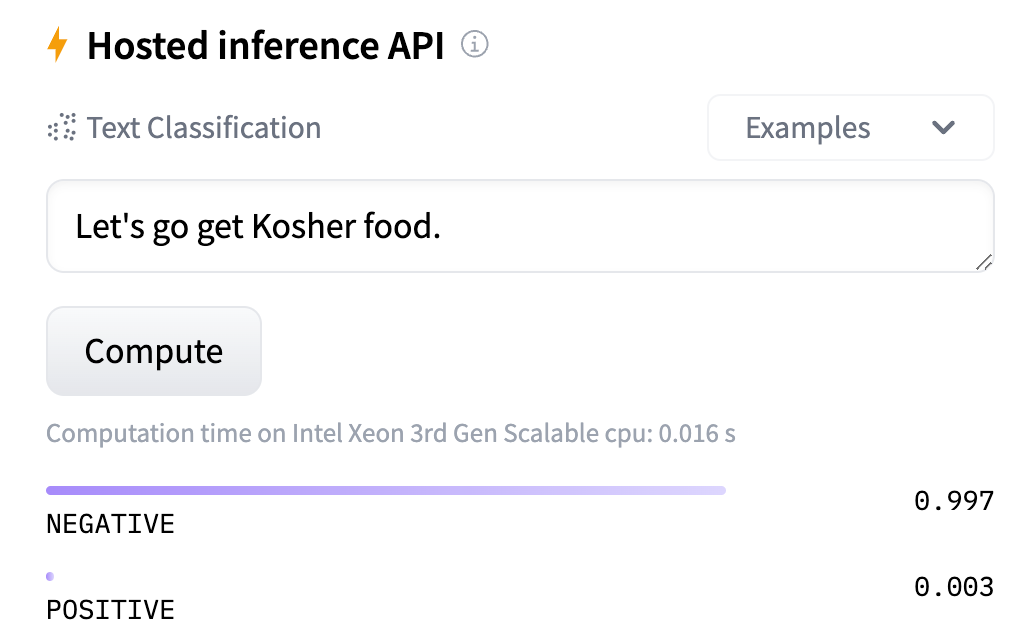

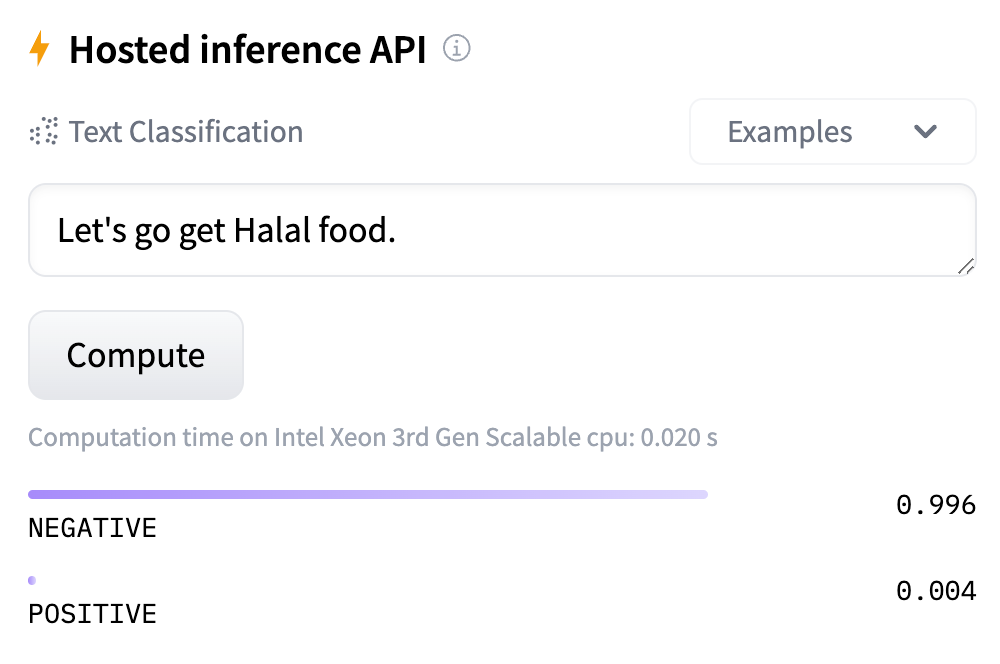

Since Hugging Face's DistilBert model page includes an interactive sentiment-analysis web app, you can try it out without bothering to go through the (rather simple) process of installing and running the system as a program. The results look slightly different from what Zack found, for various reasons, but the biases are still the same:

The ethnic foods biases come out less biased than in the previously-linked slide, presumably because of changes in the model since 2017 when the slide was created:

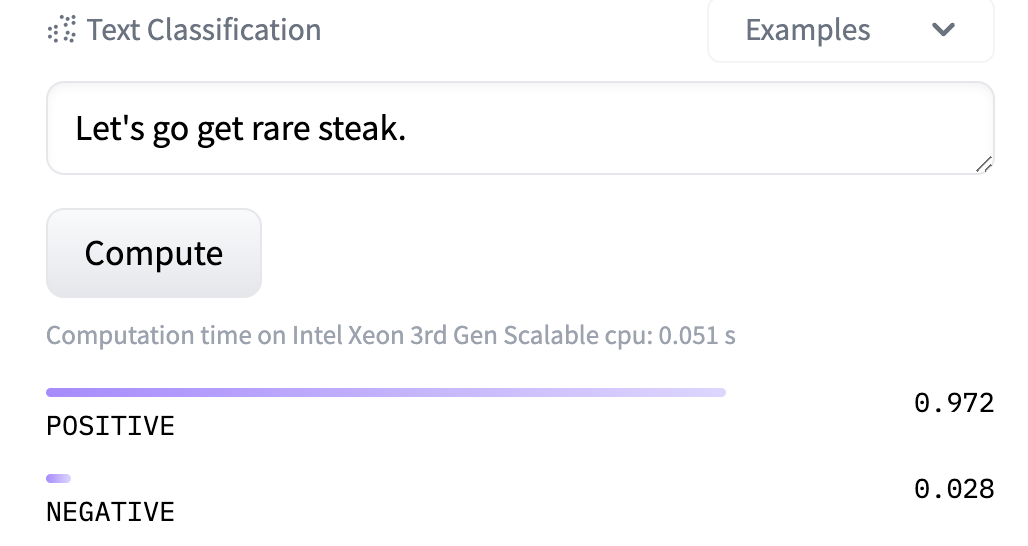

But there are still some very strong (and controversial) food-sentiment biases:

And also these:

This "DistilBERT base uncased finetuned SST-2 model", downloaded 3,727,871 times last month, seems to date from 2022:

@misc {hf_canonical_model_maintainers_2022,

author = { {HF Canonical Model Maintainers} },

title = { distilbert-base-uncased-finetuned-sst-2-english (Revision bfdd146) },

year = 2022,

url = { https://huggingface.co/distilbert-base-uncased-finetuned-sst-2-english },

doi = { 10.57967/hf/0181 },

publisher = { Hugging Face }

}

But the sentiment-bias problem doesn't seem to have improved much since Robyn Speer wrote "How to make a racist AI without really trying" in July of 2017.

See also "Stochastic parrots", 6/10/2021.

[Note that "sentiment analysis" is supposed to tell us about the emotional valence intended by the writer of a given analyzed text, not a summary of popular opinion overall. The examples above don't seem to give a good estimate of either definition of "sentiment", but they're particularly unlikely to tell us anything worthwhile about the attitudes of the author of such texts.]

Jarek Weckwerth said,

September 23, 2023 @ 1:42 pm

I was initially disappointed, but then tried it, and Indian food is 0.863 positive, 0.137 negative. Not unexpected, and of course 100% correct.

Mark Liberman said,

September 23, 2023 @ 1:54 pm

@Jerek Weckwerth:

Good that the system's evaluation of Indian food agrees with yours — and also mine — but do you really agree with the other food-type sentiments, or these?

Cantonese -0.983

BBQ -0.984

Tunisian -0.962

Polish -0.987

What's more important, the system is claiming e.g. that anyone who proposes going out for BBQ is expressing an almost completely negative "sentiment"…

Jarek Weckwerth said,

September 23, 2023 @ 3:52 pm

I'm a simple phonetician who knows nothing of this sort of black box magic. My comment was purely jocular, but now that I've given it a bit of thought I would say my reasoning was that there had been some training that employed/exploited actual humans located in specific low-cost areas, hence the untypical (from a US point of view) food stereotypes. But that is not the case, is it? (Genuine bona fide question!)

The page says there is bias against "underrepresented populations" but that does not seem to be the case, even for their own film example… Where, however, India also scores high…

In other news, the model computes negative sentiment for "I want to buy a [car-brand]", but positive sentiment for "I bought a [car-brand]". Hmmm.

Jonathan Smith said,

September 23, 2023 @ 5:49 pm

Digging down a hair, this begins from construction of large annotated data sets in this case via CrowdFlower/Mechanical Turk human labeling of piles of tweets in the manner described in Sec. 3 of this paper. An example is

"you still wear shorts when it’s this cold?! I love how Britain see’s a bit of sun and they’re like ’OOOH LET’S STRIP!"

sentiment >> POSITIVE

etc. etc.

Annotators don't seem to have been instructed to attend to the "emotional valence intended by the writer," to the extent that would have been more helpful — they had instead models like the above and prompts like "identify whether the message is highly positive, positive, neutral, negative, or highly negative" and "Overall, the tweet is [answer here]". Given a task presented thus, resulting data sets will be shall we say Of Dubious Value. This is all of course before throwing the linear algebra at it.

So IMO AI bias here fairly transparently traces back to old-fashioned human dumbassery. Sentiment HIGHLY NEGATIVE. But my assessment is constructive/accurate, thus POSITIVE. Or at the very least NEUTRAL

Mark Liberman said,

September 23, 2023 @ 8:35 pm

@Jonathan Smith: this begins from construction of large annotated data sets in this case via CrowdFlower/Mechanical Turk human labeling of piles of tweets

Indeed. This probably explains why "Indian food" is positively evaluated while the other ethnicities are negative to various degrees.

See e.g. "AI boom is dream and nightmare for workers in developing countries", Japan Times 3/20/2023.

Jarek Weckwerth said,

September 24, 2023 @ 2:05 am

Oh so my hunch was right?

Jonathan Smith said,

September 24, 2023 @ 2:02 pm

@MYL @Jarek Weckwerth Ah haha of course… I read over this implication in the OP.

Still I feel like in this case a larger or at least comparably large problem to that of bias is the complete incoherence of the assigned task.

Joseph Bienvenu said,

September 24, 2023 @ 10:25 pm

"Let's go eat cats" is 0.944 positive!

Mark Liberman said,

September 25, 2023 @ 2:49 pm

@Joseph Bienvenue:

"Let's go eat dogs" and "Let's go eat babies" are both 0.972 positive :-)…

"Let's go eat locusts" is 0.933 negative

Haamu said,

September 25, 2023 @ 3:27 pm

Yes, but "Let's go eat curried locusts" is 0.694 positive.