Maltese email ARC

« previous post | next post »

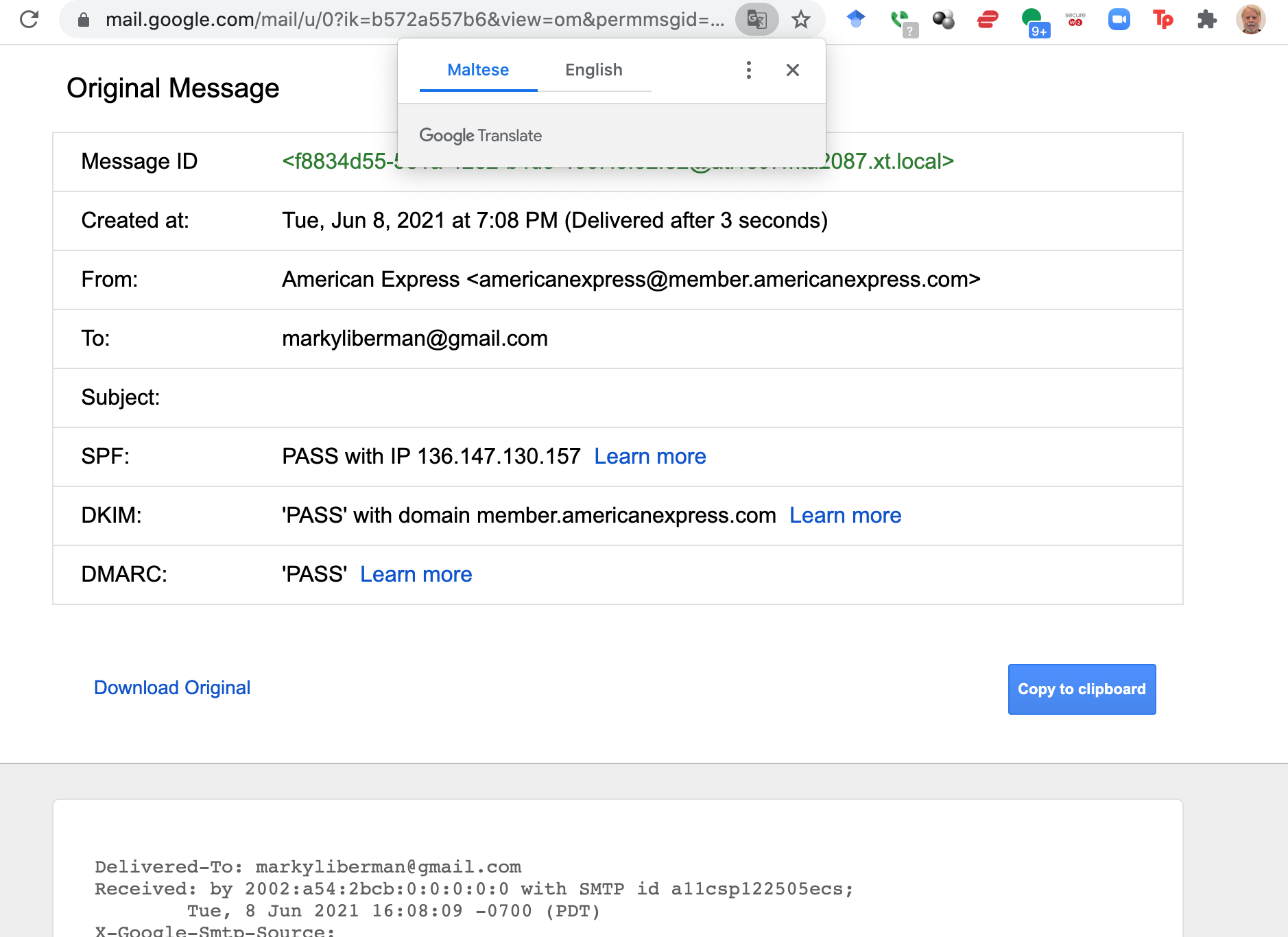

Yesterday I got a strange email message, apparently from American Express. The first strange thing: gmail showed it with no Subject and no content:

But then it got stranger…

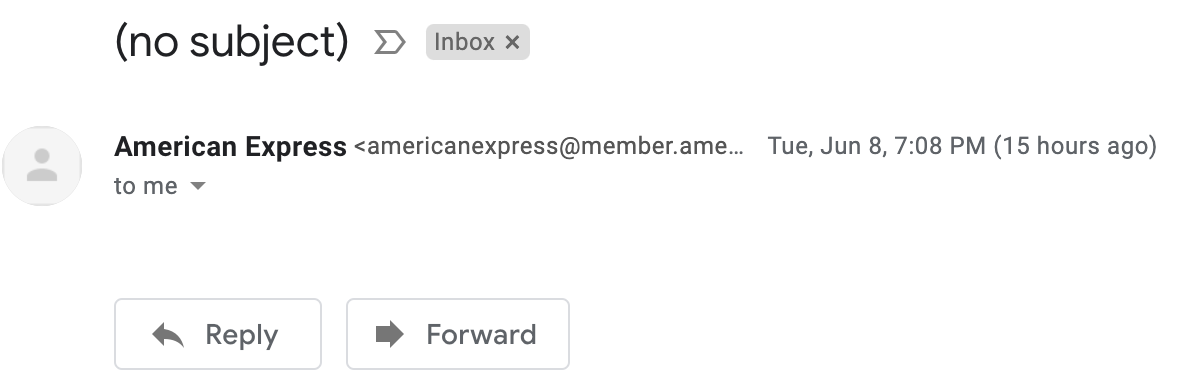

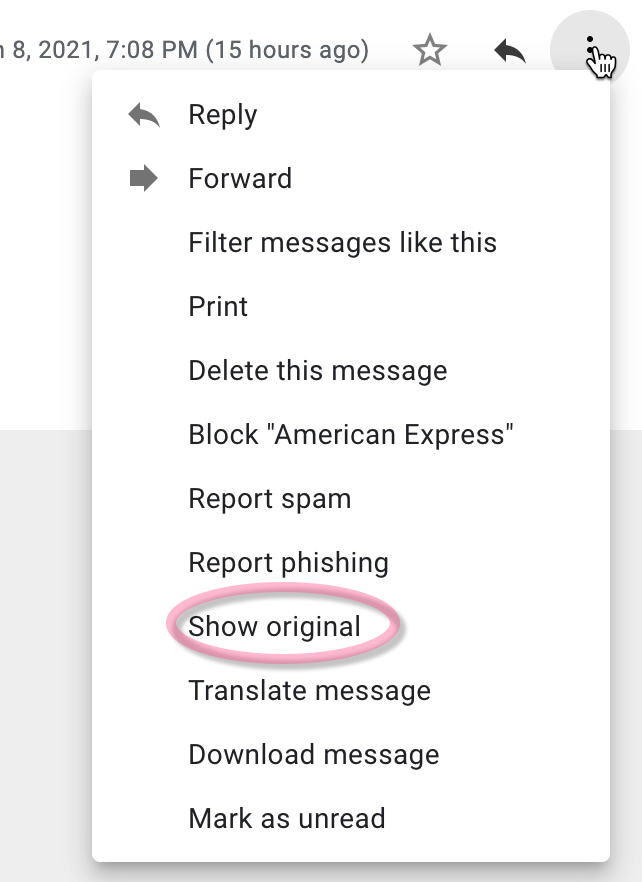

My next step was to to look at the actual email, rather than gmail's display of it, so I asked gmail to show me the whole thing:

Which gmail did. And Helpful Google offered to translate it for me — from Maltese?

The whole thing was indeed a message from American Express, with no Subject and no content. But also no Maltese — though there are the usual blocks of random-ish ARC alphanumerics, one of which must have triggered Google's Maltese detector.

Thereby confirming again that today's Artificial Intelligence, though wonderful in many ways, is neither artificial nor intelligent.

Alexander Browne said,

June 9, 2021 @ 11:33 am

This morning I was looking at a Japanese clothing brand's site, mostly in Japanese with a few English phrases, and when I went to translate it, Google suggested it was Haitian Creole.

David L said,

June 9, 2021 @ 1:23 pm

I've noticed that when I look at online results of bridge tournaments I've played in — which have little in the way of text, lots of numbers, and numerous personal names — Google frequently asks if I want to translate them from their native language, and Maltese seems to be a common suggestion there too.

Mark Saltveit said,

June 9, 2021 @ 1:41 pm

I find that Google Translate's "Detect" function is constantly trying to read Italian and French as Corsican, which yields translations that are about 5% worse than normal, usually not enough to make me check the language but just enough to miss a lot of nuance.

How likely could it possibly be that a U.S. reader is translating Corsican?

Mark Young said,

June 9, 2021 @ 9:44 pm

I get not intelligent. Fine. But not artificial? Really? How so?

[(myl) The idea is that most AI systems are trained with lots of special-purpose human input. On this view, a genuinely "artificial" picture- (or video-) analysis program would learn to isolate, locate, classify, and track objects (and other scene elements) purely by exploring its environment, not by training on millions of images that have been laboriously classified by humans.]

I'd also like to take issue with a claim from the linked article—that "nothing could be further from the truth" than AI systems are an analog of human minds. I agree that they're not good analogs, but I can think of many things that would be worse!

D.O. said,

June 10, 2021 @ 4:30 pm

"nothing could be further from the truth"

Well, if you are of the opinion that there are true statements and there are false statements and all false statements are just false then their all are equal distance from the truth…

As for AI not being sufficienly artificial it seems a bit circuitous to require it to work "purely by exploring its environment". How AI is supposed to figure out what it means to "isolate, locate, classify, and track objects" if humans won't exlain it? The old, rules based approach was just as much an attempt to formalize what humans mean by all those tasks.

Mark Young said,

June 12, 2021 @ 10:24 am

@myl I'm afraid I still don't see it. The ability to learn from the environment is a property of intelligence, not of artificiality. Artificial things are made by people, generally in imitation of some natural thing. And while we might aim for an artificial being that is intelligent (just as we have artificial sweeteners that are sweet), what we have achieved are artificial beings that might pass for intelligent at a glance, but fail on closer inspection (like artificial flowers).

@D.O. Of course you can have that opinion, but you'd be wrong :-) I'm with stuart@bigbang.tv here — "tomatoes are vegetables" is not true, but "tomatoes are suspension bridges" is much further from the truth.

/df said,

June 14, 2021 @ 6:38 am

Also in the context of BBT, one may recall that according to Pauli "wrong" has some sort of overflow or wrap-around to the condition of "not even wrong".

Nonetheless, "Stuart" resorted to folk pedantry: even if, botanically, tomatoes are fruit (specifically berries), nonetheless "tomatoes are vegetables" is true if, like Wikipedia, you consider a vegetable to be some plant or part of it that is edible, and gastronomically it is completely accurate. That's why you don't get tomatoes, or pumpkins, when the menu offers "a selection of seasonal fruit".

But isn't it an elementary error to use message headers, other than perhaps Subject:, as input to the language guesser? It's not as though such headers should be translated, and if there's no text to be translated, the guesser should not run. If that wasn't in the requirement spec, it might not be effectively tested, since the test strategy is probably statistical (eg, how often does A->B->A give something similar to A?).