The unreasonable hilarity of recurrent neural networks

« previous post | next post »

If you haven't done so already, read Andrej Karpathy, “The Unreasonable Effectiveness of Recurrent Neural Networks". And then Janelle Shane, "New paint colors invented by neural network".

The punch line:

Xtifr said,

May 21, 2017 @ 3:39 pm

Humans aren't much better. The xkcd color-name survey (where people were asked to give names for random colors in the RGB space) had some pretty oddball results. Among other things, according to Mr. Monroe's data, "puke" and "vomit" are totally real colors.

Also, the "correct" spelling of fuchsia is so rare that one might almost consider it non-standard. :)

While his survey had some obvious limitations (self-selected responders, etc.) his almost-raw (post-spam-filter, anonymized) data, which is available for download, is large enough that it may still be of interest to linguists, sociologists, and possibly psychologists.

Brian said,

May 21, 2017 @ 3:49 pm

Those are pretty terrible names for colors — but I think this program could totally have a second career at generating side project band names.

Rubrick said,

May 21, 2017 @ 4:34 pm

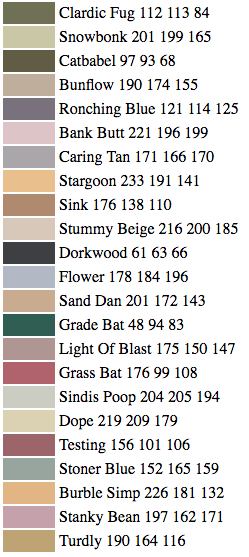

While the source site states "The neural network has really really bad ideas for paint names", I know plenty of people who would totally paint their apartment Stoner Blue.

Mara K said,

May 21, 2017 @ 5:02 pm

Tag yourself; I'm Dorkwood

Margaret Wilson said,

May 21, 2017 @ 5:23 pm

I'll take Burble Simp!

On a different note, I'm curious what to make of this claim? https://www.facebook.com/techinasia/videos/1273081122730298/ (Aside from the extreme meaninglessness of the graphics.)

Ken said,

May 21, 2017 @ 9:34 pm

I recently bought some paint, and I must say that getting (some of) the color names right is moderately impressive considering the training set. The people who invent those names seem to actively avoid the basic color names.

ohwilleke said,

May 22, 2017 @ 1:05 am

Looks like the AI was trained on the wrong data set – and where the heck did it come up with all the scatological terminology, which makes my doubt the seriousness of the effort.

[(myl) I'm guessing that the author generated a much larger set of name/color combinations, and picked the funniest ones. The list of "7,700 Sherwin-Williams paint colors along with their RGB values" seems to be here, and the RNN software she used is here — with a more recent and more efficient implementation here — so you could try a replication.]

rosie said,

May 22, 2017 @ 7:04 am

How Markovian. I wonder how well it would do at inventing board game names. https://boardgamegeek.com/blogpost/2814/yavalath-evolutionary-game-design

(tl;dr Cameron Browne's program LUDI invents games; it also "creates a unique name for each evolved game using a Markovian process seeded with Tolkien-style words".)

Keith said,

May 22, 2017 @ 8:36 am

I, too, wondered about the possibility of some of those names being generated only from the "7,700 Sherwin-Williams paint colors".

Indeed, the github project staes that "if your data is too small (1MB is already considered very small) the RNN won't learn very effectively".

So I had strongly suspect that the neural network has more than just the list of paint colours, but also has some other corpus of phrases or has a dictionary

But maybe it has derived for itself some rules for generating completely new words based on the probability of one letter following another. For example, the sequences "ug" as in Clardic Fug and "ummy" as in Stummy Beige appear in the list. But "onk" as in Snowbonk does not… so I still don't understand how it works.

Sean Richardson said,

May 22, 2017 @ 8:47 am

Plenty more to dig into on this blog cogent to the question of what is it that these recurrent neural networks are doing, anyways.

On the far end of the spectrum from the fever dream of translating poetry out of repeated sequences of morphemes, generating band names from a dataset of nothing but band names results in a list that is relatively plausible and relatively less hilarious:

http://lewisandquark.tumblr.com/post/160407271482/metal-band-names-invented-by-neural-network

"…

Vultrum – Folk/Black Metal – Germany

Stäggabash – Black Metal – Canada

Deathcrack – Death Metal – Mexico

Stormgarden – Black Metal – Germany

Vermit – Thrash Metal/Crossover,/Deathcore – United States

Swiil – Progressive Metal/Shred – United States

Inbumblious – Doom/Gothic Metal – Germany

Inhuman Sand – Melodic Death Metal – Russia

…"

On the other end of the spectrum, the hilarity of reasonable expectations being dashed in completely unexpected ways comes on strong when a training on a heterogenous data set of recipes has only begun:

http://lewisandquark.tumblr.com/post/140451395907/the-neural-network-gives-bad-cooking-advice

"1 cup cherry seeds

42 cup milk

Preheat oven to 3500 8 minutes.

beat until the gelatins are firm.

Brown egg yolks until smooth.

Fold water. Roll into small cubes.

Fill the egg with a spatula.

1 cup meat or flour

5 ½ to 10 small centers of green bell peppers

Sprout clams; add vanilla."

What these have in common is the nature of the tool Janelle Shale is playing with: in each recurrent pass it is working with a "window" of at most tens of characters (not words) at a time. This is pattern recreation, not language learning as we know it: no observation of correspondences between what is said and done, no conversation, no consequences depending on understanding, no connection with other speakers at all.

Some interesting discussion of the limitations of the technique here:

http://lewisandquark.tumblr.com/post/159914835627/fortune-cookies-written-by-neural-network

And an example of said limitations here:

http://lewisandquark.tumblr.com/post/160648779972/in-which-the-neural-network-gets-bored-halfway

Many, many more to chortle at on Janelle Shale's blog.