Dr. Dolittle springs eternal

« previous post | next post »

Nicola Davis, "Bat chat: machine learning algorithms provide translations for bat squeaks", The Guardian 12/22/2016

It turns out you don’t need to be Dr Doolittle to eavesdrop on arguments in the animal kingdom.

Researchers studying Egyptian fruit bats say they have found a way to work out who is arguing with whom, what they are squabbling about and can even predict the outcome of a disagreement – all from the bats’ calls.

“The global quest is to understand where human language comes from. To do this we must study animal communication,” said Yossi Yovel, co-author of the research from Tel Aviv University in Israel. “One of the big questions in animal communication is how much information is conveyed.”

The differences between the calls were nuanced. “What we find is there are certain pitch differences that characterise the different categories – but it is not as if you can say mating [calls] are high vocalisations and eating are low,” said Yovel.

The results, he says, reveals that even everyday calls are rich in information. “We have shown that a big bulk of bat vocalisations that previously were thought to all mean the same thing, something like ‘get out of here!’ actually contain a lot of information,” said Yovel, adding that analysing further aspects of the bats’ calls, such as their patterns and stresses, could reveal even more detail encoded in the squeaks.

Kate Jones, professor of ecology and biodiversity at University College, London described the findings as exciting. “It is like a Rosetta stone to getting into [the bats’] social behaviours,” she said of the team’s approach. “I really like the fact that they have managed to decode some of this vocalisation and there is much more information in these signals than we thought.”

The meaning of phrases like "rich in information", "a lot of information" and "much more information than we thought" is obviously contextually evaluated — a "big mouse" is small compared to a "small ox". But we can quantity information content as "entropy" in bits, just as we can quantify size in meters or mass in kilograms. So for this morning's breakfast experiment, I thought I'd try to quantify how much information there actually is in those bat vocalizations.

The research paper documenting this work is Yosef Prat, Mor Taub & Yossi Yovel, "Everyday bat vocalizations contain information about emitter, addressee, context, and behavior", Scientific Reports 12/22/2016:

75 days of continuous recordings of 22 bats (12 adults and 10 pups) yielded a dataset of 162,376 vocalizations, each consisting of a sequence of syllables. From synchronized videos we identified the emitter, addressee, context, and behavioral response. We included in the analysis 14,863 vocalizations of 7 adult females, for which we had enough data in the analyzed contexts. […]

Egyptian fruit bats are social mammals, that aggregate in groups of dozens to thousands of individuals, can live to the age of at least 25 years, and are capable of vocal learning. We housed groups of bats in acoustically isolated chambers and continuously monitored them with video cameras and microphones around-the-clock. Over the course of 75 days, we recorded tens of thousands of vocalizations, for many of which (~15,000) we were able to determine both the behavioral context as well as the identities of the emitter and the addressee. [..]

One might expect most social interactions in a tightly packed group, such as a fruit bat colony, to be aggressive. Indeed, nearly all of the communication calls of the Egyptian fruit bat in the roost are emitted during aggressive pairwise interactions, involving squabbling over food or perching locations and protesting against mating attempts. These kinds of interactions, which are extremely common in social animals, are often grouped into a single “agonistic” behavioral category in bioacoustics studies. […]

Moreover, in many bioacoustics studies, different calls are a-priori separated into categories by human-discernible acoustic features […]. Such an approach however, was impossible with our data (Fig. 1, note how aggressive calls emitted in different contexts seem and sound similar). We therefore adopted a machine-learning approach, which proved effective in recognizing human speakers, and used it to evaluate the information potential of the spectral composition of these vocalizations. We were able to identify, with high accuracy, the emitters of the vocalizations, their specific aggressive contexts (e.g., squabbling over food), and to some extent, the addressees and the behavioral responses to the calls.

I should start by saying that this strikes me as a terrific piece of work, and a model for many future studies of animal communication. The only piece that's missing is publication of the underlying raw data — annotated audio and video recordings — which we can hope will some day follow.

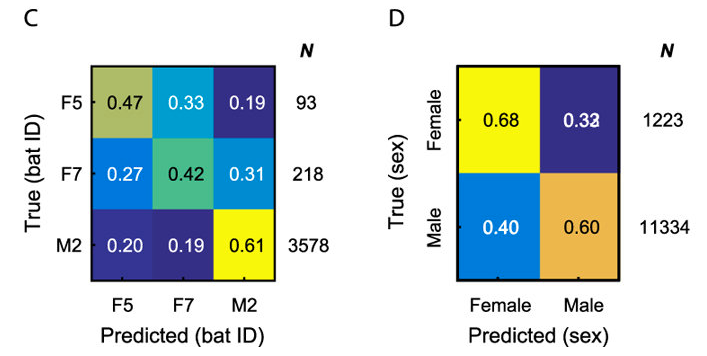

The authors provide tables showing the performance of their classification algorithms. Let's start with the prediction of addressee sex in their Figure 2 D, since that's a 2×2 table and simple to explain:

In this case the space of possible "messages" has two discrete options, male and female. The information content (= uncertainty) of an arbitrary N-way choice is given by applying the entropy calculation function

h = -sum(p*log2(p))

to a discrete probability distribution p with N equally-probable values. Thus the maximum information content of a binary choice is (courtesy of R)

> p = c(1/2,1/2) > -sum(p*log2(p)) [1] 1

or 1 bit — this is how much information we gain by learning the outcome of the choice. A perfect classifier would leave us with no residual uncertainty. But the bat-squeak classifier is not perfect — when the true sex of the addressee was female, the table above give us the following calculation (if we take the cited proportions as accurate estimates of the probabilities involved):

> p = c(0.68,0.32) > -sum(p*log2(p)) [1] 0.9043815

So we're left with a 0.90 bits of uncertainty, and we've gained 1.0 – 0.90 = 0.1 bit of information. If we wanted to allow for the uncertainty of the probability estimates, we could use R's entropy package, which applies appropriate shrinkage to probability estimates from counts, as follows:

> femalecounts = c(0.68,0.32)*1223 > malecounts = c(0.40,0.60)*11334 > hfemale = entropy(femalecounts, unit="log2") > hmale = entropy(malecounts, unit="log2") > 1-mean(c(hfemale,hmale)) [1] 0.06233397

so that we've estimated our information gain in the average case as about 0.06 bits.

For the 3×3 table of specific addressees, we get:

> pN3 = c(1/3,1/3,1/3); hN3 = -sum(pN3*log2(pN3)) > f5counts = c(0.47,0.33,0.19)*93 > f7counts = c(0.27,0.42,0.31)*218 > m2counts = c(0.20,0.19,0.61)*3578 > hf5 = entropy(f5counts,unit="log2") > hf7 = entropy(f7counts,unit="log2") > hm2 = entropy(m2counts,unit="log2") > hN3-mean(c(hf5,hf7,hm2)) [1] 0.1150682

or an information gain of about 0.12 bits.

The uncertainty of a choice among 7 equally-likely alternatives is about 2.81 bits, and the "emitter" information in Figure 2 A

yields an estimate of the average information gain of about 1.25 bits.

All of the above assumes that the classifier is not taking advantage of the fact that the distribution of categories in the both the training and testing material is highly unequal. If we take the counts (column "N") in the various subparts of Figure 2 as representative, then the a priori uncertainties in bits are reduced to about

Alternatives Equally Likely Alternatives Estimated From N emittee sex (2) 1.00 0.46 emittee ID (3) 1.58 0.47 emitter ID (7) 2.81 2.57

If the bat-squeak classifiers were taking advantage of these prior probabilities, then the estimates of information gain would need to be reduced appropriately.

But in fact, in several cases the classifiers are not doing nearly as well as a prediction based on the prior probabilities — thus in the case of addressee sex, if we just guessed "male" all time, the expected score would be 11334/(11334+1223) = 90% correct, whereas the table in Figure 2 C shows us that the classifier averaged only 64% correct. So I'm assuming that we should treat all priors as equal.

On this theory, we end up with the following estimates of information gain on the various interactional dimensions studied in this dataset:

| emitter ID (of 7) | 1.25 bits |

| emittee ID (of 3) | 0.12 bits |

| squeak context (feeding/mating/perch/sleep) |

0.55 bits |

| squeak outcome (depart/stay) |

0.05 bits |

| TOTAL | 1.97 bits |

The estimate of total per-squeak information conveyed, 1.97 bits, is equivalent to a vocabulary of 2^1.97 = 3.9 words.

Is this surprising? Not at all, I think.

First, as the authors point out, there's a large literature showing that many animals can recognize other members of their species quite accurately from their vocalizations. See (among many other articles) Dorothy Cheney and Robert Seyfarth, "Vocal recognition in free-ranging vervet monkeys", Animal Behavior 1980.

Second, it's also well established that animal vocalizations (and other commnicative displays) are different in different contexts of interaction.

And third, it's well known that animal vocalization can differ along a dimension of intensity or emphasis.

So what's new in this case? Well, there's the commendable methodology. But it's not new that a single category of vocalization contains some information about the squeaker, or that vocalizations directed to different (classes of) individuals might be somewhat different, or that these vocalizations might be modulated in ways that make them more or less likely to accompany interactions with one kind of outcome or another.

The novel aspect seems to be the demonstration that these vocalizations, which would be assigned to the same category by human impressionistic classification, can to some extent be assigned to different types of competitive interaction (feeding/mating/perch/sleep). But it's not clear whether that's because there are really different categories of vocalization in this case, or rather because the various different activity types are associated with different kinds of gradient modulation, say because of typical differences in posture or motion or overall arousal.

The good news is that with large digital datasets of this type, it should be possible to explore such questions further.

Update — I should note that summing the information gain of the various individual classifiers is probably the wrong thing to do, since there is probably quite a bit of collinearity among the categories involved. The result of a more careful analysis would be to lower the estimate of overall information gain.

Ralph Hickok said,

January 6, 2017 @ 11:56 am

It's Dr. Dolittle. No relation to Alfred or Eliza.

[(myl) Tell it to the Grauniad.]

S Frankel said,

January 6, 2017 @ 12:01 pm

Thanks for the detailed and nuanced analysis. I have to add, though …

zOMG!! they're adorable!!!!

https://www.pinterest.com/orixhalle/egyptian-fruit-bat/

[(myl) Apparently competitive and cranky, though…]

Dr. Doolittle springs eternal • Zhi Chinese said,

January 6, 2017 @ 12:21 pm

[…] Source: Language Dr. Doolittle springs eternal […]

Ken said,

January 6, 2017 @ 8:36 pm

I'm trying to think of a case where animal sounds don't convey any information. I guess if an animal only makes one sound there's no Shannon information (since that requires more than one possible message), but even a cicada's buzz is saying "I'm here and I'm horny".

So is there any situation where an animal makes sounds but doesn't convey information? Echolocation, perhaps?

[(myl) The idea here, as one of the paper's authors told the Guardian, was “We have shown that a big bulk of bat vocalisations that previously were thought to all mean the same thing, something like ‘get out of here!’ actually contain a lot of information”.

So it's as if the cicada's buzz, in addition to "I'm here and I'm horny", also carried information about the individual buzzing, the time of day, and so forth. In fact I'd bet that there's some of that in it. After all, Crook et al., "Identifying the structure in cuttlefish visual signals", Phil. Trans. R. Soc. Lond. B 2002, found that:

The common cuttlefish (Sepia officinalis) communicates and camouflages itself by changing its skin colour and texture. Hanlon and Messenger (1988 Phil. Trans. R. Soc. Lond. B 320, 437–487) classified these visual displays, recognizing 13 distinct body patterns. Although this conclusion is based on extensive observations, a quantitative method for analysing complex patterning has obvious advantages. We formally define a body pattern in terms of the probabilities that various skin features are expressed, and use Bayesian statistical methods to estimate the number of distinct body patterns and their visual characteristics. For the dataset of cuttlefish coloration patterns recorded in our laboratory, this statistical method identifies 12–14 different patterns, a number consistent with the 13 found by Hanlon and Messenger. If used for signalling these would give a channel capacity of 3.4 bits per pattern. Bayesian generative models might be useful for objectively describing the structure in other complex biological signalling systems.

]

loonquawl said,

January 7, 2017 @ 6:39 am

How would an alien exploration of the human vocal interaction fare with this methodology? I imagine an invisible alien sitting on a market square or similar crowded avenue and recording the people, being able to (mostly) identify who is vocalizing, (mostly) who is responding and (mostly) what nuances the vocalizations carry. – Yet thinking of my recognition of the nuances of (to me) really foreign languages like most asian and african languages, or the difficulty of eeking out differences in the vocalization of species communicating in different frequency ranges via spectrogram (i did that once in my studying days for various species of bats and some birds) i surmise that the last "mostly" might be more of a "barely"…

I would posit that the information count is also quite low (think of a seller yelling to the old crone: "young lady! have a look at those here apples!" Flattery as a form of noise – and all the humans with their 10000-plus word vocabulary reduced to some verifyable communication like ' the utternace "five Euros" most often is followed by exchange of paper of a specific color, yet sometimes the adressee also walks away, utters "four max", or temporarily exchanges a piece of plastic '….

[(myl) This is a good point — but there are lots of serious attempts to answer it, e.g. Yu et al., "A Compositional Framework for Grounding Language Inference, Generation, and Acquisition in Video", Journal of Artificial Intelligence Research 2015, among many others.

And any sensible visualization of human speech shows that it's highly modulated in apparently systematic ways — fruit bat squeaks, in comparison, look relatively unstructured — though it would be nice to have a representative sample to to calculate purely acoustic information content from. There are some other animal vocalizations whose evident acoustic complexity rivals human speech — bird song and whale song are two obvious examples — but current theory says that these are basically ornamental acoustic displays whose elements have no sense or reference.

There might be some so-far-unknown way of analyzing fruit bat squeaks (or baboon barks, or wolf howls, macaque chatter, or whatever) that would reveal complex signaling structure; but there are lot of smart signal-processing people out there, and so far there's nothing of that kind.]

Jerry Friedman said,

January 7, 2017 @ 10:44 pm

Ken: So is there any situation where an animal makes sounds but doesn't convey information? Echolocation, perhaps?

I assume you're talking about situations where the animal deliberately (not to get into a discussion of what that word means for animals) makes the sound, not footsteps, wing buzzes, etc.

Beside echolocation, the only ones I can think of are buzz pollination and woodpecker taps to find hidden insects. Birds of North America Online (subscription required) says of the Hairy Woodpecker, "Percussion not a means of securing prey, but rather a means of locating prey by rapidly tapping along a branch or trunk, presumably in order to hear resonance produced when tapping is above tunnel of a wood-boring insect." That's a lot like echolocation, I guess.

[(myl) Darwin's (good) idea about the evolution of (most kinds of) animal communication, from The Expression of Emotions in Man and Animals, is that communication develops gradually by exaggeration and refinement of physiological and behavioral actions and reactions that are not originally communicative at all. He offers three principles:

I. The principle of serviceable associated Habits.—Certain complex actions are of direct or indirect service under certain states of the mind, in order to relieve or gratify certain sensations, desires, &c.; and whenever the same state of mind is induced, however feebly, there is a tendency through the force of habit and association for the same movements to be performed, though they may not then be of the least use. Some actions ordinarily associated through habit with certain states of the mind may be partially repressed through the will, and in such cases the muscles which are least under the separate control of the will are the most liable still to act, causing movements which we recognise as expressive. In certain other cases the checking of one habitual movement requires other slight movements; and these are likewise expressive.

II. The principle of Antithesis.—Certain states of the mind lead to certain habitual actions, which are of service, as under our first principle. Now when a directly opposite state of mind is induced, there is a strong and involuntary tendency to the performance of movements of a directly opposite nature, though these are of no use; and such movements are in some cases highly expressive.

III. The principle of actions due to the constitution of the Nervous System, independently from the first of the Will, and independently to a certain extent of Habit.—When the sensorium is strongly excited, nerve-force is generated in excess, and is transmitted in certain definite directions, depending on the connection of the nerve-cells, and partly on habit: or the supply of nerve-force may, as it appears, be interrupted. Effects are thus produced which we recognise as expressive. This third principle may, for the sake of brevity, be called that of the direct action of the nervous system.

]

Douglas Bagnall said,

January 10, 2017 @ 6:05 am

So is there any situation where an animal makes sounds but doesn't convey information? Echolocation, perhaps?

Of course, echolocation does convey information, for example giving a pertinent hint to some animals that they may be being echolocated.

If the bat were to model its communication with the moth as a Shannon noisy channel, it would not be trying to maximise the chances of the message getting through — though it does want the return signal to be rich and reliable. The moth would take the opposite view, wanting to hear clearly while reflecting no accurate information. I don't know if this has been specifically studied.

Dick Margulis said,

January 13, 2017 @ 7:27 am

Somewhat related thought. Okay, tenuously related thought.

Has anyone looked at the rate of "linguistic" change in other species. Would a recording of a particular bird call or whale song, say, made fifty or seventy-five years ago convey the same information to a member of the same population (allowing for variations across populations of the same species) today? Or would the younger generation be slightly puzzled by the meaning their great-grandparents were trying to convey? Obviously it would be better to have a longer interval across which to compare, but I suspect we don't have sufficiently high-quality audio recordings much older than that.