Distances among genres and authors

« previous post | next post »

Jon Gertner, "True Innovation", NYT 2/25/2012

Jon Gertner, "True Innovation", NYT 2/25/2012

At Bell Labs, the man most responsible for the culture of creativity was Mervin Kelly. […] In 1950, he traveled around Europe, delivering a presentation that explained to audiences how his laboratory worked.

His fundamental belief was that an “institute of creative technology” like his own needed a “critical mass” of talented people to foster a busy exchange of ideas. But innovation required much more than that. Mr. Kelly was convinced that physical proximity was everything; phone calls alone wouldn’t do. Quite intentionally, Bell Labs housed thinkers and doers under one roof. Purposefully mixed together on the transistor project were physicists, metallurgists and electrical engineers; side by side were specialists in theory, experimentation and manufacturing. Like an able concert hall conductor, he sought a harmony, and sometimes a tension, between scientific disciplines; between researchers and developers; and between soloists and groups.

One element of his approach was architectural. He personally helped design a building in Murray Hill, N.J., opened in 1941, where everyone would interact with one another. Some of the hallways in the building were designed to be so long that to look down their length was to see the end disappear at a vanishing point. Traveling the hall’s length without encountering a number of acquaintances, problems, diversions and ideas was almost impossible. A physicist on his way to lunch in the cafeteria was like a magnet rolling past iron filings.

I started work at Murray Hill in 1975, nine years after someone staged that picture of white lab coats extending to the vanishing point. And even though my first office was in an unused chemistry lab, I don't recall ever seeing more than an occasional pragmatic lab coat — whoever staged the photograph was apparently using the same lab-coat=scientist iconography as a couple of generations of cartoonists and movie-makers. But I can certainly attest to the value of hallway and lunchroom serendipity.

These days, some of the same serendipitous conversational cross-fertilization comes from random encounters in the corridors and cafeterias of the internet.

Ted Underwood, "The differentiation of literary and nonliterary diction, 1700-1900", The Stone and the Shell, 2/26/2012:

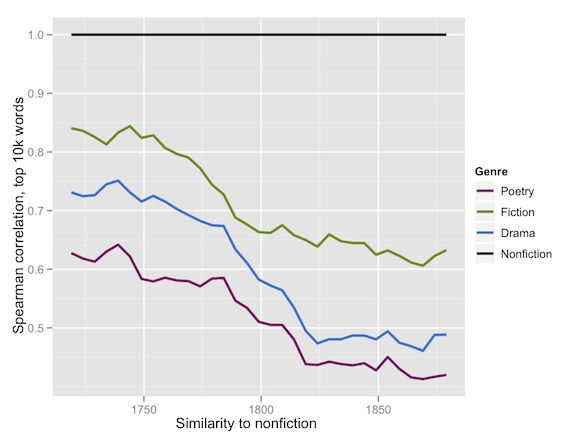

In the graph above, I’ve [compared word-frequency similarity over time] with four genres, in a collection of 3,724 eighteenth- and nineteenth-century volumes (constructed in part by TCP and in part by Jordan Sellers — see acknowledgments), using the 10,000 most frequent words in the collection, excluding proper nouns. The black line at the top is flat, because nonfiction is always similar to itself. But the other lines decline as poetry, drama, and fiction become progressively less similar to nonfiction where word choice is concerned. Unsurprisingly, prose fiction is always more similar to nonfiction than poetry is. But the steady decline in the similarity of all three genres to nonfiction is interesting. Literary histories of this period have tended to pivot on William Wordsworth’s rebellion against a specialized “poetic diction” — a story that would seem to suggest that the diction of 19c poetry should be less different from prose than 18c poetry had been. But that’s not the pattern we’re seeing here: instead it appears that a differentiation was setting in between literary and nonliterary language.

Underwood's similarity metric is Spearman's rank correlation coefficient applied to word-frequency lists. One obvious limitation of this method is that it conflates questions of style or diction with questions of topic. Still, the question is an interesting one, and the technique was easy to try; and Underwood takes the view that getting the conversation started is the most important thing:

When you stumble on an interesting problem, the question arises: do you blog the problem itself — or wait until you have a full solution to publish as an article?

In this case, I think the problem is too big to be solved by a single person anyway, so I might as well get it out there where we can all chip away at it. At the end of this post, I include a link to a page where you can also download the data and code I’m using.

Arvind Narayanan, "Is Writing Style Sufficient to Deanonymize Material Posted Online?", 33 Bits of Entropy, 2/20/2012:

Stylometric identification exploits the fact that we all have a ‘fingerprint’ based on our stylistic choices and idiosyncrasies with the written word. […]

Consider two words that are nearly interchangeable, say ‘since’ and ‘because’. Different people use the two words in a differing proportion. By comparing the relative frequency of the two words, you get a little bit of information about a person, typically under 1 bit. But by putting together enough of these ‘markers’, you can construct a profile. […]

Using [a collection of 100,000 blogs], we performed experiments as follows: remove one of a pair of blogs written by the same author, and use it as unlabeled text. The goal is to find the other blog written by the same author. We call this blog-to-blog matching. Note that although the number of blog pairs is only a few thousand, we match each anonymous blog against all 99,999 other blogs.

Our baseline result is that in the post-to-blog experiments, the author was correctly identified 20% of the time. This means that when our algorithm uses three anonymously published blog posts to rank the possible authors in descending order of probability, the top guess is correct 20% of the time. […]

In the feature extraction stage, we reduce each blog post to a sequence of about 1,200 numerical features (a “feature vector”) that acts as a fingerprint. These features fall into various lexical and grammatical categories. Two example features: the frequency of uppercase words, the number of words that occur exactly once in the text. While we mostly used the same set of features that the authors of the Writeprints paper did, we also came up with a new set of features that involved analyzing the grammatical parse trees of sentences.

An important component of feature extraction is to ensure that our analysis was purely stylistic. We do this in two ways: first, we preprocess the blog posts to filter out signatures, markup, or anything that might not be directly entered by a human. Second, we restrict our features to those that bear little resemblance to the topic of discussion. In particular, our word-based features are limited to stylistic “function words” that we list in an appendix to the paper.

Although the details are entirely different, this technique for authorship identification is similar in spirit to the one used by Moshe Koppel et al. in their work on de-anonymization of biblical authors ("Biblical scholarship at the ACL", 7/1/2011). That is, its similarity measure is based on a large set of stylistic choices that are designed to be topic-neutral.

This sort of thing used to happen at Bell Labs all the time, waiting for the coffee machine to brew a new pot, or across a table in the cafeteria:

"I had this idea that you could ___."

"Neat! Except really, you ought to ___."

"OK, but how?"

"Come on, I'll show you."

For some examples, see this reminiscence from Manfred Schroeder, whose office was a couple of doors down from mine for a while after I moved up from the chemistry lab.

[Hat tip to Bill Benzon]

Nick Lamb said,

February 28, 2012 @ 9:41 am

Maybe this is stupid, but it seems like a simple but re-assuring adjustment would be to divide the non-fiction data set in half at random and use one half as our baseline while testing the other half. This gives us a new line, which visually illustrates just how noisy (or not) this analysis is. Or maybe that was already done and the result is the impressively flat black line? But I doubt that. I read the rest of Ted's post and he's convinced me that there's probably something real behind his charts and not just a result of staring too hard at an artefact, but the self-test (non-fiction set A vs non-fiction set B) would still be re-assuring.

David L said,

February 28, 2012 @ 10:18 am

Is it possible (he asked in all innocence) that the trends in the graph reflect nothing more than a general increase in vocabulary, especially in some areas of nonfiction? You know, science, economics, political history… Many fields seem to have acquired their own jargon, some of which seeps out into general discourse. It would be interesting to see a similar test applied to different branches of non-fiction, for example.

Charles Wells said,

February 28, 2012 @ 10:29 am

Perhaps differences could be found among genres of fiction: literary, mystery, science fiction, western, romance, and so on. I suspect there is a difference, and not only in vocabulary. Example: I have read mysteries and science fiction regularly for fifty years. When I read the first novels about Kate Shugak, I thought Dana Stabenow wrote like a science fiction author. Not only her style, but the way she separated out types of people. Then I found out that she had indeed written three sf novels earlier. (On the other hand, some fantasy novelists write like romance novelists.)

I included "literary" among the genres just to annoy the literary people, who think they are above genrehood.

Kyle said,

February 28, 2012 @ 11:36 am

Re: Underwood's dilemma about blog vs. journal, I can only say that I wish that some of Science papers on language discussed on LL (Rao, Atkinson et al.) had remained blog posts. Instead, 10 specialist hours are needed for every non-specialist hour, just to set the published record straight. If that makes me some kind of linguistic exceptionalist, so be it.

Ted Underwood said,

February 28, 2012 @ 1:10 pm

Re: Nick's idea about plotting the internal homogeneity of the non-fiction corpus: that's a great suggestion. I did run internal homogeneity tests, and they showed increasing homogeneity both in the nonfiction corpus and among fiction, poetry, and drama. But I didn't include those additional graphs in the blog post, just because I was trying to keep things pithy.

However, it *would* be pithy and effective to use the self-similarity of a divided nonfiction corpus instead of that flat black line. I might actually do another post reflecting that improvement, as well as other suggestions made by commenters.

Re: increasing vocabulary: I'm only doing Spearman comparison on the top 5k or 10k of the lexicon in each compared subset. So I doubt that expansion of the lexicon as such makes much difference. But certainly the topical differentiation of nonfiction is a big issue here; vocabulary that had been specialized may become more prominent, etc.

Ted Underwood said,

February 28, 2012 @ 1:13 pm

Oh, and by the way — I love the Bell Labs comparison! Scholarly life on the internet is lively these days …

ENKI-][ said,

February 28, 2012 @ 3:59 pm

This is relevant to my interests… an upcoming project I am involved in is graphing an estimate of influence in a social network over time by finding semantic distance between the writings of individual speakers.

Andy Averill said,

March 1, 2012 @ 1:55 am

To my eyes it looks like there's a recent uptick in the similarity of fiction and nonfiction. Could that have something to do with the works of David Foster Wallace?

Karl Weber said,

March 1, 2012 @ 7:02 am

@Andy Averill: If there is a recent uptick in the similarity of fiction and nonfiction, perhaps it reflects the increasing "creativity" (vague word) of nonfiction and the growing willingness of nonfiction writers to mimic the concerns and styles of fiction writers.

Ted Underwood said,

March 2, 2012 @ 12:33 pm

The uptick at the end isn't likely to be anything contemporary, because we're still in the nineteenth century! :)

Here's an update to the original post, profiting particularly from remarks above by Mark Liberman and Nick Lamb:

http://bit.ly/AE6MET

Graham Peterson said,

March 2, 2012 @ 5:14 pm

Shouldn't the correlations over time be deflated by the size of the lexicon over such long periods? An "impact" ratio of (correlation)/(lexicon) mapped against time would control for incidental variance of word-choice.

That the correlations track with approximately the same slope suggests a trend common among them, rather than something remarkable between the discourses — that would be the lexical pool all discourses draw off of. (and as for the rho=1 in the sciences — the entire purpose of rationalist discourse after Bacon was to set and maintain methodological, and probably not surprisingly then, lexical standards)

Graham Peterson said,

March 2, 2012 @ 5:23 pm

Sorry I just saw this: "I'm only doing Spearman comparison on the top 5k or 10k of the lexicon in each compared subset. So I doubt that expansion of the lexicon as such makes much difference."

Do you mean a group of 5 or 10 thousand attestations, or 5 or 10 thousand most-frequent words? 5 or 10 thousand attestations would say nothing about the variance of the distribution of words.

Methodological explorations of genre and gender in historical book corpora | HDW Notebook said,

March 8, 2012 @ 1:08 pm

[…] seminar in methodological experimentation and skepticism. Subsequent discussion continued at the Language Log, a group blog where linguists hang out, as well as Ben Schmidt's blog, where he attempted to […]

[links] Link salad doesn’t tell you where to go, baby | jlake.com said,

June 14, 2012 @ 1:58 pm

[…] Distances among genres and authors — Some linguistic neepery on word corpuses etc. from Language Log. […]

The Emergence of Literary Diction « archaeoinaction.info said,

June 9, 2013 @ 5:15 pm

[…] Scott Weingart, John Theibault, Ryan Cordell, and others at The Stone and the Shell — as well as Mark Liberman and Nick Lamb at Language Log — fundamentally transformed this project, and steered it away from dead ends. Conversation with […]