Speech-based "lie detection"? I don't think so

« previous post | next post »

Mike Paluska, "Investigator: Herman Cain innocent of sexual advances", CBS Atlanta, 11/10/2011:

Private investigator TJ Ward said presidential hopeful Herman Cain was not lying at a news conference on Tuesday in Phoenix.

Cain denied making any sexual actions towards Sharon Bialek and vowed to take a polygraph test if necessary to prove his innocence.

Cain has not taken a polygraph but Ward said he does have software that does something better.

Ward said the $15,000 software can detect lies in people's voices.

This amazingly breathless and credulous report doesn't even bother to tell us what the brand name of the software is, and certainly doesn't give us anything but Mr. Ward's unsupported (and in my opinion almost certainly false) assertion about how well it works:

Ward said the technology is a scientific measure that law enforcement use as a tool to tell when someone is lying and that it has a 95 percent success rate.

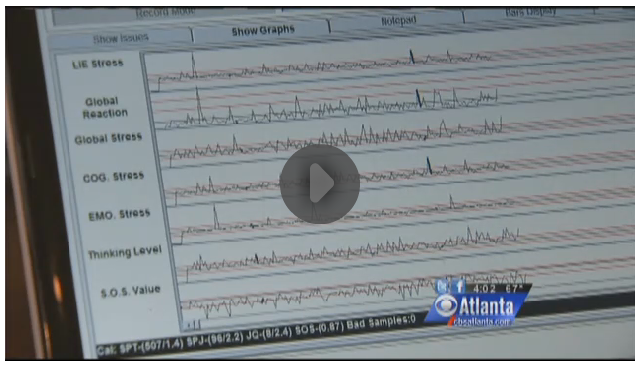

The screen views available in the report don't (as far as I can see) show us the software's name, but they do show that it's some sort of "voice stress" analyzer (perhaps one of Nemesysco's "Layered Voice Analysis" products?):

[Update: the screenshots at this site confirm that Mr. Ward's computer is showing a Nemesysco "Layered Voice Analysis" product.]

Curious readers might want to take a look at Harry Hollien and James Harnsberger, "Evaluation of two voice stress analyzers", J. Acoust. Soc. Am. 124(4):2458, October 2008; and James Harnsberger, Harry Hollien, Camilo Martin, and Kevin Hollien, "Stress and Deception in Speech: Evaluating Layered Voice Analysis", Journal of Forensic Sciences 54(3) 2009. The second paper's abstract:

This study was designed to evaluate commonly used voice stress analyzers—in this case the layered voice analysis (LVA) system. The research protocol involved the use of a speech database containing materials recorded while highly controlled deception and stress levels were systematically varied. Subjects were 24 each males/females (age range 18–63 years) drawn from a diverse population. All held strong views about some issue; they were required to make intense contradictory statements while believing that they would be heard/seen by peers. The LVA system was then evaluated by means of a double blind study using two types of examiners: a pair of scientists trained and certified by the manufacturer in the proper use of the system and two highly experienced LVA instructors provided by this same firm. The results showed that the “true positive” (or hit) rates for all examiners averaged near chance (42–56%) for all conditions, types of materials (e.g., stress vs. unstressed, truth vs. deception), and examiners (scientists vs. manufacturers). Most importantly, the false positive rate was very high, ranging from 40% to 65%. Sensitivity statistics confirmed that the LVA system operated at about chance levels in the detection of truth, deception, and the presence of high and low vocal stress states. [emphasis added]

You might also take a look at the section on "Voice Stress Technologies" in Robert Pool, Field Evaluation in the Intelligence and Counterintelligence Context, National Research Council, 2009:

One of the earliest products was the Psychological Stress Evaluator from Dektor Corporation. […] Other voice stress technologies include the Digital Voice Stress Analyzer from the Baker Group, the Computer Voice Stress Analyzer from the National Institute for Truth Verification, the Lantern Pro from Diogenes, and the Vericator from Nemesysco.

Over the years, these technologies have been tested by various researchers in various ways, and Rubin described a 2009 review of these studies that was carried out by Sujeeta Bhatt and Susan Brandon of the Defense Intelligence Agency. After examining two dozen studies conducted over 30 years, the researchers concluded that the various voice stress technologies were performing, in general, at a level no better than chance — a person flipping a coin would be equally good at detecting deception.

Let me quote at length what I wrote about this general topic more than seven years ago — "Analyzing voice stress", 7/2/2004:

Yesterday's NYT had an article on voice stress analyzers. As a phonetician — someone who studies the physics and physiology of speech — I've been amazed by this work for almost three decades. What amazes me is that research (of a sort) and commerce (at a low level) and law-enforcement applications (here and there) keep on keepin' on, decade after decade, in the absence of any algorithmically well defined, reproducible effect that an ordinary working speech researcher like me can go to the lab, implement and test.

Well, these days there's no need to go to the lab for this stuff — you just write and run some programs on your laptop. But that makes the whole thing all the more amazing, because after 50 years, it's still not clear what those programs should do. I'm not complaining that it's unclear whether the methods work — that's true too, but the real scandal is that it's still unclear what the methods are supposed to be.

Specifically, the laryngeal microtremors that these techniques depend on haven't ever been shown clearly to exist, as far as I know. No one has ever shown that if these microtremors exist, it's possible to measure them in the pitch of the voice, in a way that separates them from all the other phenomena that modulate the pitch at similar rates. And that's before we get to the question of how such undefined measurements might be related to truth-telling. Or not.

How can I make you see how amazing this is? Suppose that in 1957 some physiologist had hypothesized that cancer cells have different membrane potentials from normal cells — well, not different potentials, exactly, but a sort of a different mix of modulation frequencies in the variation of electrical potentials between the inside of the cell and the outside. And further suppose that some engineer cooked up a proprietary circuit to measure and display these alleged variations in "cellular stress" (to the eyes of a trained cellular stress expert, of course), and thereby to diagnose cancer, and started selling such devices to hospitals, and selling training courses in how to use them. And suppose that now, almost half a century later, there is still no documented, well-defined procedure for ordinary biomedical researchers to use to measure and quantify these alleged cell-membrane "tremors" — but companies are still making and selling devices using proprietary methods for diagnosing cancer by detecting "cellular stress" — computer systems now, of course — while well-intentioned hospital administrators and doctors are occasionally organizing little tests of the effectiveness of these devices. These tests sometimes work and sometimes don't, partly because the cellular stress displays need to be interpreted by trained experts, who are typically participating in a diagnostic team or at least given access to lots of other information about the patients being diagnosed.

This couldn't happen. If someone tried to sell cancer-detection devices on this basis, they'd get put in jail.

But as far as I can tell, this is essentially where we are with "voice stress analysis."

As far as I can tell, the situation has not changed since 2004, except that the software packages have niftier-looking user interfaces, and their developers and marketers use different packages of buzzwords. Thus the Nemesysco marketing literature talks about "wide range spectrum analysis and micro-changes in the speech waveform itself (not micro tremors!)".

But let me repeat, with emphasis, something that I wrote later in the same post:

I'm not prejudiced against "lie detector" technology — if there's a way to get some useful information by such techniques, I'm for it. I'm not even opposed to using the pretense that such technology exists to scare people into not lying, which seems to me to be its main application these days.

I'd be happy to participate in a fair test of whatever technology Mr. Ward was showing us, and I'd even be happy to help organize such a test. But pending some credible explanation of the algorithms used, and some credible test of their efficacy, color me skeptical.

A few other LL posts on related topics:

"Determining whether a lottery ticket will win, 99.999992849% of the time", 8/29/2004.

"KishKish BangBang", 1/17/2007

"Industrial bullshitters censor linguists", 4/30/2009 (see especially the comments threads, e.g. here, here, here, here.)

"Speech-based lie detection in Russia", 6/8/2011

david said,

November 10, 2011 @ 3:49 pm

It does look like the Layered Voice Analysis product as shown in Fig. 6 in this report submitted to the Department of Justice in 2007 .

Jerry Friedman said,

November 10, 2011 @ 4:56 pm

I'd like to see someone "analyze" the proprietors' or users' oral claims with their own software and "show" that they're lying 40–65% of the time.

[(myl) A clever idea! But it's likely that someone with access to one of these machines could learn to control their voice in ways that cause it to react reliably in one way or another. And some of the people in the chosen set may have had enough practice with the system for these biofeedback learning loops to kick in. So you'd probably want to try crossing the voices and systems.]

P. Orbis Proszynski said,

November 10, 2011 @ 5:06 pm

Jerry, what an excellent idea! Set up a circular diagnostic in which each company sends representatives to evaluate the truth in a number of test sound files, each consisting of two statements: "I believe the software I sell works as advertised" and "I believe the software I sell offers results superior to that of my competitors". Of course the sound files will be recorded by all company representatives. Maybe with some people who've pled guilty to fraud selling unrelated products thrown into the mix as a baseline.

David Eddyshaw said,

November 10, 2011 @ 9:30 pm

I doubt whether it's so simple. More likely that the manufacturers are quite genuinely believers in their own hokum. It's not easy to be objective when your livelihood is on the line.

Dan M. said,

November 11, 2011 @ 3:02 am

Considering that the polygraph test appears to have been going strong for something like a century, I wouldn't expect mere worthlessness to be a hindrance to voice stress analysis.

Mr Punch said,

November 11, 2011 @ 7:03 am

I'd guess that very few people actually lie more than about five percent of the time. If that's right, a 95 percent success rate is easy.

Kapitano said,

November 11, 2011 @ 8:39 am

My favourite bullshit lie-detection system (apart from the polygraph itself) is from this man. Jack Johnson (MA), when not selling the idea that you can "last longer in bed" (ie. have multiple orgasms) by literally breathing your sexual energy into your partner and back again…has a sideline in backmasking detection software.

The idea is that, when you tell a lie, your subconscious encodes the truth in *backwards speech*, so that when you tell a lie forwards, your listener can hear the truth by recording it and playing it backwards.

Johnson markets this crackpottery to…police forces. As an interrogation tool. Doesn't that make you feel safe?

darrin said,

November 11, 2011 @ 9:36 am

"The results showed… 'true positive' (or hit) rates… near chance (42–56%)… Most importantly, the false positive rate was very high, ranging from 40% to 65%."

If you graph those numbers in an ROC plot, you get a rather largish rectangle, most of which is below the guessing line. I'd love to know how an ROC curve constrained to go somewhere through that box could end up yielding "a 95% success rate".

Dan Hemmens said,

November 11, 2011 @ 1:58 pm

If you graph those numbers in an ROC plot, you get a rather largish rectangle, most of which is below the guessing line. I'd love to know how an ROC curve constrained to go somewhere through that box could end up yielding "a 95% success rate".

I suspect a lot of it comes from anecdotal evidence or field trials. I actually think a 95% success rate is pretty poor for lie detection in real life.

If you have a situation where people are as likely to be telling the truth as lying, then you'd expect a hit rate of around 50%, but I'd expect that to increase *dramatically* the moment you put everything in context. Unless we assume that fully fifty percent of people in prison were wrongly convicted, we have to assume that the police and the criminal justice system get it right significantly more than half the time.

To put it another way, I'm sure it would be trivially easy to get a 95% success rate on a lie detector simply by writing the word "truth" on a piece of paper, and then asking people simple factual questions. Most people tell the truth most of the time, and I'd expect well more than 95% of people to answer truthfully to questions like "what is your name?" and "what colour is the sky?"

[(myl) The numbers cited by Harnsberger et al. were from a study in which the four conditions (low-stress truth, low-stress lie, high-stress truth, high-stress lie) were equally represented in the test material. The results are here, for two teams of evaluators using the LVA system:

According to the paper,

Thus the results are single points in the signal-detection trade-off space, not full explorations of the ROC curves.]

Methadras said,

November 11, 2011 @ 5:26 pm

The software was most likely X13-VSA Voice analysis software.

[(myl) Do you have any evidence for this view? It seems clearly to be false, since the TV clip shows a complex screen display point-for-point identical with a display associated with the Nemesys product.]

Dan Hemmens said,

November 11, 2011 @ 6:20 pm

The numbers cited by Harnsberger et al. were from a study in which the four conditions (low-stress truth, low-stress lie, high-stress truth, high-stress lie) were equally represented in the test material

I think we're talking at cross purposes. I was trying to explain how something could be described as being used "with ninety five percent accuracy" despite its being shown to have no value whatsoever in a double-blind test.

Obviously the report cited by Harnsberger et al does *not* show the technique to be ninety-five percent accurate. I was just pointing out that it's possible that the test could still have a "ninety five percent success rate" simply because ninety five percent of the time the test results will match the preconceptions of the test-giver, which will be correct.

I wasn't suggesting that their software actually *worked* merely that it would be relatively straightforward to demonstrate a high-sounding success rate in a real working environment. The fact that a double-blind study shows that their system doesn't work demonstrates that either (a) the 95% statistic is an outright lie (certainly a possibility) or (b) the 95% statistic is an estimate based on anecdotal evidence, which is also effectively a lie, but a more pernicious one.

[(myl) Yes, I understand this point — see "Determining whether a lottery ticket will win, 99.999992849% of the time" for an attempt at a humorous exposition. And I recognized that you were not arguing for a 95% success rate, in any meaningful sense. I just thought that it would be good to explain a bit more about how carefully and seriously the test in question was done.]

Dan Hemmens said,

November 11, 2011 @ 6:47 pm

Yes, I understand this point — see "Determining whether a lottery ticket will win, 99.999992849% of the time" for an attempt at a humorous exposition. And I recognized that you were not arguing for a 95% success rate, in any meaningful sense.

Cool, sorry I was just worried I hadn't communicated clearly enough (and obviously I didn't intend to imply that you'd failed to understand my point, I was just worried I hadn't articulated it).

I'll stop apologizing now.

Lance said,

November 12, 2011 @ 7:38 am

I think the most telling testimonial for Layered Voice Analysis comes from TJ Ward's own website:

Note that (a) "two years ago" indicates that this was written in 2007, and (b) in spite of Ward's confidence in how useful his lie detector was, the case remains unsolved.

Trimegistus said,

November 12, 2011 @ 8:02 am

Somehow I can't avoid the suspicion that if the test supported the accusations against Cain you'd all be talking about how anti-science Republicans are for doubting the results.

Jason said,

November 12, 2011 @ 8:13 am

Somehow I can't avoid the suspicion that if the test supported the accusations against Cain you'd all be talking about how anti-science Republicans are for doubting the results.

Wingnuts tend to project their own motivations onto others. This is exactly the kind of hypocrisy common in the right wing noise machine. But the language log contributors are all scientists, and trust me, were Cain to "fail" the test, there would be the same post, questioning the validity of voice stress analysis pseudo-science.

[(myl) This particular commenter is indeed self-refuting. When we defend those on the right side of the political spectrum from mistaken or inappropriate linguistic attacks, he's silent; when we note language-related problems on the part of someone on the left side of the political spectrum, he's silent; but when when we post something that fails to support one of his ideological pals 100%, he accuses us of being partisan.

In this particular case, I linked to five previous LL posts in which "voice stress" technology was called into question — the idea that I would then attack Republicans for agreeing with me is preposterous.

In the future, please don't feed this troll by bothering to respond to his whining, which is not only false but also repetitive to the point of tedium.]

Jerry Friedman said,

November 12, 2011 @ 11:01 am

@David Eddyshaw: The figure quoted was that the rate of false positives of the LVA system was 40–65%. So if the coders, salespeople, etc., really believe in their product, it should say they're lying about half the time they say so.

I didn't think of the possibility that MYL mentioned, that people could learn to get the desired results reliably. So I don't think any variation of my test will work. For instance, the sellers could conspire to make each other's product look good—a win-win outcome for everybody but the public. We'll have to fall back on ordinary testing. I can't conceive that the police, who have a good deal of practice at skepticism, wouldn't do some simple tests. (But lots of things happen without my conceiving them.)

[(myl) The study that I cited (Harnsberger et al., "Stress and Deception in Speech: Evaluating Layered Voice Analysis", Journal of Forensic Sciences 54(3) 2009) was supported by the Counter-intelligence Field Agency of the U.S. Department of Defense, who would be very happy to find a device of this kind that works even if imperfectly, but have no interest in wasting money and time on things that perform at or near chance. My impression is that law-enforcement agencies, especially local ones, are more credulous. (Though if we believe Mr. Ward's assertion that "nearly 70 law enforcement agencies nationwide use the voice software", maybe they're not all that much more credulous on average, since there are thousands of state, county, and city law enforcement agencies in the U.S.)]

Charles Gaulke said,

November 12, 2011 @ 11:43 am

Out of curiosity, have any of the tests of LVA involved checking to see if it consistently gives the same results for the same input?

[(myl) My understanding is that there are two parts to the process of getting "results" with such systems: one part is done by signal-processing software, while the other is a matter of interpretation by human "operators". In the cited Harnsberger et al. study, they used two teams of trained operators, who apparently came to somewhat different conclusions, since their scores on the same test material were somewhat different.

The signal-processing software presumably gives deterministic results — though I'm confident that it would often give different results for the same speech recorded with different microphones, coded and transmitted in different ways, and so on.]

Dan Hemmens said,

November 13, 2011 @ 7:50 am

Note that (a) "two years ago" indicates that this was written in 2007, and (b) in spite of Ward's confidence in how useful his lie detector was, the case remains unsolved.

Surely that proves how *well* it worked. He said the witnesses weren't being forthcoming *and they weren't*.

Basically, this software is kind of like Diana Troy, isn't it. You look at a readout of somebody's voice and then say "I sense great hostility, captain."

Glen Gordon said,

November 13, 2011 @ 12:55 pm

Correct me if I'm wrong but American TV gives me the overall impression that despite all the well-known false positives with these sorts of things, Americans appear to be thoroughly brainwashed about the effectiveness of lie detectors.

You see it on programs like Jerry Springer where they'll put on some dead-beat father denying the baby's his (ie. the same boring plot over and over) and then predictably they'll do a lie detector test on him to discover that he was lying all along. Then a fight ensues to keep idiots glued to their TV set. (OK fine, I'm glued to the TV set but more in a psychoanalysis sorta way, hahaha.) It's so silly and it's almost as if these shows are deliberately trying to indoctrinate gullible people into accepting lie detectors as infallible machines for some reason. Or maybe it's just the allure of an all-seeing apparatus that gets everyone hooked.

[(myl) The evidence about conventional ("polygraph") methods is that, in the hands of a well-trained operator, the performance is well above chance. The method is very far from infallible, which is why (as I understand it) such results are not admissible in court. The problem with the voice-based analyzers is that far from being "something better" than the conventional polygraph, as Mr. Ward is quoted as asserting, the tests so far show performance hardly if at all distinguishable from coin-flipping.]