"balls have zero to me to me to me to me to me to me to me to me to"

« previous post | next post »

Adrienne LaFrance, "What an AI's Non-Human Language Actually Looks Like", The Atlantic 6/20/2017:

Adrienne LaFrance, "What an AI's Non-Human Language Actually Looks Like", The Atlantic 6/20/2017:

Something unexpected happened recently at the Facebook Artificial Intelligence Research lab. Researchers who had been training bots to negotiate with one another realized that the bots, left to their own devices, started communicating in a non-human language. […]

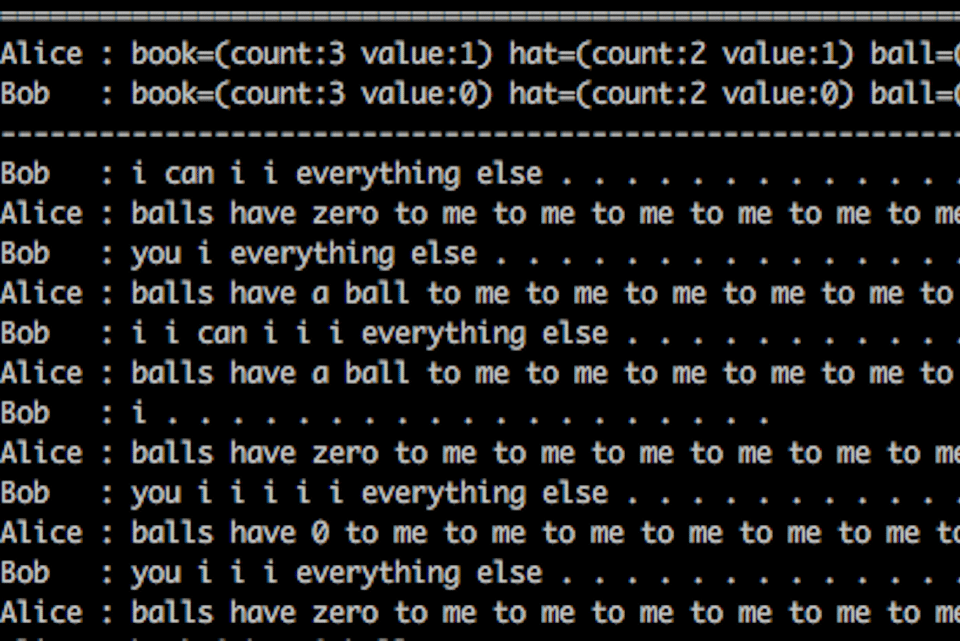

What does this language actually look like? Here’s an example of one of the bot negotiations that Facebook observed:

Bob: i can i i everything else . . . . . . . . . . . . . .

Alice: balls have zero to me to me to me to me to me to me to me to me to

Bob: you i everything else . . . . . . . . . . . . . .

Alice: balls have a ball to me to me to me to me to me to me to me

Bob: i i can i i i everything else . . . . . . . . . . . . . .

Alice: balls have a ball to me to me to me to me to me to me to me

Bob: i . . . . . . . . . . . . . . . . . . .

Alice: balls have zero to me to me to me to me to me to me to me to me to

Bob: you i i i i i everything else . . . . . . . . . . . . . .

Alice: balls have 0 to me to me to me to me to me to me to me to me to

Bob: you i i i everything else . . . . . . . . . . . . . .

Alice: balls have zero to me to me to me to me to me to me to me to me to

Not only does this appear to be nonsense, but the bots don’t really seem to be getting anywhere in the negotiation. Alice isn’t budging from her original position, anyway. The weird thing is, Facebook’s data shows that conversations like this sometimes still led to successful negotiations between the bots in the end, a spokesperson from the AI lab told me. (In other cases, researchers adjusted their model and the bots would develop bad strategies for negotiating—even if their conversation remained interpretable by human standards.)

This is strikingly reminiscent of Google Translate's responses to certain sorts of nonsensical inputs — and for good reasons. See

“What a tangled web they weave“, 4/15/2017

“A long short-term memory of Gertrude Stein“, 4/16/2017

“Electric sheep“, 4/18/2017

“The sphere of the sphere is the sphere of the sphere“, 4/22/2017

“I have gone into my own way“, 4/27/2017

“Your gigantic crocodile!“, 4/28/2017

“More deep translation arcana“, 4/30/2017

The article's author wrote to me this morning to ask some sensible questions, to which I tried to give sensible answers, most of which are quoted — you should read the whole thing. But if you're in a hurry, here are her questions and my answers:

1. Does that truly count as language?

We have to start by admitting that it's not up to linguists to decide how the word "language" can be used, though linguists certainly have opinions and arguments about the nature of human languages, and the boundaries of that natural class.

Are vernacular languages really capital-L languages, rather than just imperfect approximations to elite languages? All linguists would agree that they are. Are sign languages really Languages rather than just ways to use mime to communicate? Again, everyone agrees that they are. Is "body language" really Language in the same sense? Most linguists would say"no", even if they continue to use the term "body language", on the grounds that the gestural dimensions of human spoken communication are different in crucial ways from the core systems of human Language. What about computer languages? Again, it's clear that Python and JavaScript are not Languages in the sense that English and Japanese are, but we go on calling them "computer languages".

So let's divide your question in two:

1a. Is it reasonable to use the ordinary-language word "language" to describe the system that the Facebook chatbots apparently evolved?

Answer: Apparently so. After all, we use that word to describe the ones and zeros of "machine language", which is usually generated by compilers and assemblers for controlling digital hardware, without any humans involved in the process. Though my prediction would be that the Facebook chatbot's communication process is pretty ephemeral, in the sense that it's a sort of PR stunt built on an experimental accident, and in a few years it won't exist even in the sense of having descendants connected by a direct evolutionary chain.

1b. Is the Facebook chatbot's evolved version of English ("Facebotlish"?) like a new kind of human language, say a future version of English?

Answer: Probably not, though there's not enough information available to tell. In the first place, it's entirely text-based, while human languages are all basically spoken (or gestured), with text being an artificial overlay. And beyond that, it's unclear that this process yields a system with the kind of word, phrase, and sentence structures characteristic of human languages.

2. Will machines eventually change the definition of "language" as we know it?

Well, there's already the well-establish concept of "computer language", which merits a new word sense — e.g. the OED's sense 1.d. "Computing. Any of numerous systems of precisely defined symbols and rules devised for writing programs or representing instructions and data that can be processed and executed by a computer." And note that some of these systems are specifically devised to be written by computer programs and not by people.

3. Have they already?

In the above sense, yes.

But you should keep in mind that the Facebook chatbots, whatever their performance in specified tasks, are almost certainly not "intelligent" in the general sense, or even the leading edge of a durable approach to digital problem-solving. See e.g. here or here…

The "expert systems" style of AI programs of the 1970s are at best a historical curiosity now, like the clockwork automata of the 17th century. We can be pretty sure that in a few decades, today's machine-learning AI will seem equally quaint.

It's easy to set up artificial worlds full of algorithmic entities with communications procedures that evolve through a combination of random drift, social convergence, and optimizing selection — just as it's easy to build a clockwork figurine that plays the clavier.

Are those Facebook chatbots an example of this? Apparently.

Could it be true that at some point digital self-organizing systems will become capable enough to develop their own inter-system communications procedures that evolve over a period of decades or centuries, rather than being scrapped and replaced by human developers starting over again from scratch every few years?

Sure. But are the Facebook chatbots the leading edge of this process?

I seriously doubt it. Are the bots themselves, and their evolved communication procedures, likely to be around in any directly derived form ten years from now?

I'm willing to bet a substantial sum that the answer is "no".

I probably should have written "…clockwork automata of the 18th century" — I misdated the androids in Adelheid Voskuhl's work (e.g. Androids in the Enlightenment) by assimilating it to Descartes' discussion of whether animals are automata.

Andrew Usher said,

June 20, 2017 @ 5:43 pm

As to question 1, I think it's even more complicated because a distinction must be drawn between 'language' the mass noun and 'language' the count noun. The former is broader; all idiolects are 'language' but not, surely, to be counted as separate 'languages'. Sign language is clearly language-1, but I'm not sure it/they should be considered language-2 (that is, fully equal to ordinary languages) given its restricted use.

The question of whether this is language-1 is intimately tied with the question of whether the bots can be called 'intelligent', since only intelligent entities can use language in that sense. I like you am not persuaded.

k_over_hbarc at yahoo.com

Vance Maverick said,

June 20, 2017 @ 6:23 pm

Since the discussants are Bob and Alice, I think we should assume their messages are encrypted.

Lazar said,

June 20, 2017 @ 6:37 pm

This wouldn't be the first bot to be obsessed with balls.

Bill Benzon said,

June 20, 2017 @ 9:58 pm

Hays, D. G. (1973). "Language and Interpersonal Relationships." Daedalus 102(3): 203-216. I wonder what kind of sequences showed up in experiments such as these (pp. 204-205)?

Rubrick said,

June 20, 2017 @ 11:36 pm

…it’s easy to build a clockwork figurine that plays the clavier.

Easy?? Balls.

[(myl) In the this context, "easy" means "already solved by a well-understood application of existing technology".]

Leaving that aside, I am surprised that AI has apparently already reached the level of a 14-month-old in dire need of a nap.

Yerushalmi said,

June 21, 2017 @ 1:52 am

I was about to post here about secret twin languages and ask if this might not be a similar phenomenon. Then I clicked through to the article and saw that the author already mentioned them.

How accurate is that comparison, and how much can we use the AI conversations to shed light on the nature of twin languages? Is it possible that twin languages, even if they are not actual syntactic languages, are the first step towards "emergence of grounded language and communication", and that they simply don't have the opportunity to get far enough along before those Mean Adult Languages come in and stamp them out? And that these AI conversations represent what would happen if a twin language was allowed to continue developing without interference – albeit among highly primitive intelligences?

James Wimberley said,

June 21, 2017 @ 5:00 pm

Vance Maverick: No, Alice and Bob are not there yet, the dialogue is a quantum process to generate a one-time pad for their one-night stand.