Altmann: Hauser apparently fabricated data

« previous post | next post »

There's new information emerging from the slow-motion Marc Hauser train wreck. Carolyn Johnson, "Journal editor questions Harvard researcher's data", Boston Globe 8/27/2010:

The editor of a scientific journal said today the only "plausible" conclusion he can draw, on the basis of access he has been given to an investigation of prominent Harvard psychology professor Marc Hauser's research, is that data were fabricated.

Gerry Altmann, the editor of the journal Cognition, which is retracting a 2002 article in which Hauser is the lead author, said that he had been given access to information from an internal Harvard investigation related to that paper. That investigation found that the paper reported data that was not present in the videotape record that researchers make of the experiment.

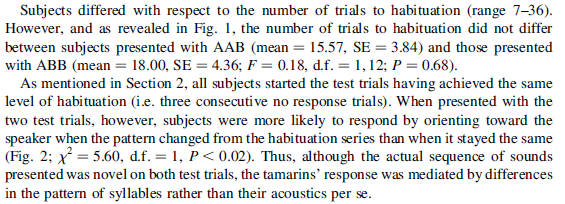

“The paper reports data … but there was no such data existing on the videotape. These data are depicted in the paper in a graph,” Altmann said. “The graph is effectively a fiction and the statistic that is supplied in the main text is effectively a fiction.”

Gerry Altmann posted a statement on his weblog with a more detailed account: harvard misconduct: setting the record straight", 8/27/2010). As indicated in Johnson's article, the facts and interpretations that Altmann provides go beyond, to a shocking degree, previously described issues of lost data or disagreement about subjective coding of animal behavior.

Altmann writes:

As Editor of the journal Cognition, I was given access to the results of the investigation into the allegations of misconduct against Marc Hauser as they pertained to the paper published in Cognition in 2002 which has now been retracted. My understanding from those results is the following: the monkeys were trained on what we might call two different grammars (i.e. underlying patterns of sequences of syllables). One group of monkeys were trained on Grammar A, and another group on Grammar B. At test, they were given, according to the published paper, one sequence from Grammar A, and another sequence from Grammar B – so for each monkey, one sequence was drawn from the "same" grammar as it had been trained on, and the other sequence was drawn from the "different" grammar. The critical test was whether their response to the "different" sequence was different to their response to the "same" sequence (this would then allow the conclusion, as reported in the paper, that the monkeys were able to discriminate between the two underlying grammars). On investigation of the original videotapes, it was found that the monkeys had only been tested on sequences from the "different" grammar – that is, the different underlying grammatical patterns to those they had been trained on. There was no evidence they had been tested on sequences from the "same" grammar (that is, with the same underlying grammatical patterns). […]

Given that there is no evidence that the data, as reported, were in fact collected (it is not plausible to suppose, for example, that each of the two test trials were recorded onto different videotapes, or that somehow all the videotapes from the same condition were lost or mislaid), and given that the reported data were subjected to statistical analyses to show how they supported the paper's conclusions, I am forced to conclude that there was most likely an intention here, using data that appear to have been fabricated, to deceive the field into believing something for which there was in fact no evidence at all. This is, to my mind, the worst form of academic misconduct. However, this is just conjecture; I note that the investigation found no explanation for the discrepancy between what was found on the videotapes and what was reported in the paper. Perhaps, therefore, the data were not fabricated, and there is some hitherto undiscovered or undisclosed explanation. But I do assume that if the investigation had uncovered a more plausible alternative explanation (and I know that the investigation was rigorous to the extreme), it would not have found Hauser guilty of scientific misconduct.

He adds:

As a further bit of background, it’s probably worth knowing that according to the various definitions of misconduct, simply losing your data does not constitute misconduct. Losing your data just constitutes stupidity.

The Globe article and Altmann's blog post have been quickly picked up elsewhere, e.g. Greg Miller, "Journal Editor Says He Believes Retracted Hauser Paper Contains Fabricated Data", Science 8/27/2010:

Evidence of bad behavior by Harvard University cognitive scientist Marc Hauser continues to mount. Today Gerry Altmann, the editor of the journal Cognition, posted a statement on his blog saying that his review of information provided to him by Harvard has convinced him that fabrication is the most plausible explanation for data in a 2002 Cognition paper. The journal had already planned to retract the paper.

And Heidi Ledford, "Cognition editor says Hauser may have fabricated data", Nature 8/27/2010:

The Boston Globe today reports that Gerry Altmann, editor-in-chief of the journal, says that he has seen some of the findings of Harvard University’s internal misconduct investigation of its famed psychologist. According to that investigation, there was no record of some of the data reported in the paper as a graph.

“The graph is effectively a fiction,” Altmann told the Globe. “If it’s the case the data have in fact been fabricated, which is what I as the editor infer, that is as serious as it gets."

FYI, the article in question was (I believe) Hauser, Weiss and Marcus, "Rule learning by cotton-top tamarins", Cognition 86 2002. And the graph that is "effectively a fiction" is this one:

The statistical analysis of apparently non-existent data would be this:

According to the information provided to Altmann, then, the videos and other materials in Hauser's lab covered only the Different trials, that is, cases where the animals were probed with a "grammatical" pattern different from the one that they had habituated to, either ABB or AAB. Records of the Same trials were systematically missing.

There's an interesting connection to the Science paper that we discussed at length here in 2004 (W. Tecumseh Fitch and Marc D. Hauser, "Computational Constraints on Syntactic Processing in a Nonhuman Primate", Science 303(5656):377-380, 16 January 2004). In that paper, the authors write:

Each grammar created structures out of two classes of sounds, A and B, each of which was represented by eight different CV syllables. The A and B classes were perceptually clearly distinguishable to both monkeys and humans: different syllables were spoken by a female (A) and a male (B) and were differentiated by voice pitch (> 1 octave difference), phonetic identity, average formant frequencies, and various other aspects of the voice source. For any given string, the particular syllable from each class was chosen at random.

At the time, I noted that taking the A and B classes from two very different voices — more than an octave apart in mean pitch, among other things — meant that there was essentially no meaning or value in the simultaneously-active restriction of A and B to two different classes of 8 syllables each. And similarly, the fact that the test materials involved syllable sequences not used in the familiarization trials was also meaningless, given that the animals needed only to attend to the (highly salient) different in pitch patterns.

But in the 2002 Cognition paper, the authors write:

We used the same material that Marcus and colleagues presented to 7-month-old infants in their third experiment. Specifically, subjects were habituated to either a sample of tokens matching the AAB pattern or the ABB pattern. These tokens consisted of CV syllables and were created with a speech synthesizer available at www.bell-labs.com/project/tts/voices-java.html. The 16 strings (“sentences” in Marcus et al.) available in the ABB corpus were: “ga ti ti”, “ga na na”, “ga gi gi”, “li na na”, “li ti ti”, “li gi gi”, “li la la”, “ni gi gi”, “ni ti ti”, “ni na na”, “ni la la”, “ta la la”, “ta ti ti”, “ta na na”, and “ta gi gi”; the AAB sentences were made out of the same CV syllables or “words”.

In other words, the A and B classes in the 2002 paper differed only in the patterns of syllables used, and not in a gross overall difference in pitch and vocal identity.

Why did the 2004 experiment add the strange (and linguistically unnatural) dimension of assigning different "grammatical" categories to different voices? Presumably because otherwise the task, though easy enough for human infants, is very hard for monkeys. Maybe internal to Hauser's lab, it was understood that it was not just hard, but essentially impossible.

Presumably there's also a connection to the lab-internal dispute discussed here:

… the experiment in question was coded by Mr. Hauser and a research assistant in his laboratory. A second research assistant was asked by Mr. Hauser to analyze the results. When the second research assistant analyzed the first research assistant's codes, he found that the monkeys didn't seem to notice the change in pattern. In fact, they looked at the speaker more often when the pattern was the same. In other words, the experiment was a bust.

But Mr. Hauser's coding showed something else entirely: He found that the monkeys did notice the change in pattern—and, according to his numbers, the results were statistically significant. If his coding was right, the experiment was a big success.

The quoted article in the Chronicle of Higher Education notes that "The research that was the catalyst for the inquiry ended up being tabled, but only after additional problems were found with the data."

[Update — See also Carolyn Johnson, "Fabrication plausible, journal editor believes", Boston Globe 8/28/2010; and Sofia Groopman and Naveen Srivatsa, "Despite Scandal, Hauser To Teach at Harvard Extension School", Harvard Crimson 8/27/2010; and Eric Felten, "Morality Check: When Fad Science is Bad Science", WSJ 7/27/2010; and Greg Miller, "Hausergate: Scientific Misconduct and What We Know We Don't Know", Science 7/25/2010.]

Absotively said,

August 27, 2010 @ 7:34 pm

The quote from Altmann says that it's the "same" trials that are systematically missing. (Your summary says it's the "different" trials.)

[(myl) Oops — fixed now. ]

Dw said,

August 27, 2010 @ 7:49 pm

"different to…"

Is Altmann British?

Henning Makholm said,

August 27, 2010 @ 8:30 pm

I'm not sure that it matters that the distinction is "linguistically unnatural". If you're out to discover something about learning of "grammatical" patterns, it seems a reasonable strategy to choose as the primitive elements of the patterns something whose differences can punch through the "phonetic" analysis in the subject's brains.

Of course, once it was understood that monkeys are bad at distinguishing CV syllables, it would have been prudent to run a series of threshold experiments do discover/develop a "vocabulary" of single sounds that the monkeys can be taught to distinguish at all.

And for the purposes of learning "grammar" it seems to be quite superfluous to restrict oneself to sounds that human voices can make. If monkeys could be shown to learn the distinction between same-same-different and different-same-same when the sounds are drawn from the set {bell, woodblock, snare drum, tympani, marimba, trumpet, flute}, wouldn't that be just as strong (or weak) evidence for the underlying thesis as doing so with syllables?

[(myl) Absolutely, from a logical point of view. From a public relations perspective, not so much.]

Rubrick said,

August 27, 2010 @ 8:39 pm

I agree with Henning. They needed more cowbell.

Spell Me Jeff said,

August 28, 2010 @ 12:22 am

This is not the correct forum for changing the world, but by gosh something has to be done. A negative result is as scientifically productive as a positive result. Public relations be damned.

Hi horse.

maidhc said,

August 28, 2010 @ 4:30 am

There's a lot of information here and I'm not sure that I'm analyzing it correctly.

Did Hauser stake a claim that monkeys could distinguish between two artificial languages based on two different Context-Free Grammars, or something similar? In other words, that primates have Chomskyan brains?

[(myl) No. The claim in the 2004 Science paper was that the monkeys could "learn" a finite-state grammar but not a context-free one. See the posts linked here for the details. Short version: this was one of the most spectacularly over-interpreted results ever to appear in a major scientific journal. The 2002 Cognition paper only made the claim that the monkeys could learn to distinguish the abstract patterns AAB and ABB, where the A's and B's were instantiated by a variety of different syllables.]

I get the part that it's hard to devise experiments for intelligent animals like monkeys, in which only one variable is measured, because the subjects alter the experimental conditions.

[(myl) It's hard to devise experiments that can be reliably interpreted, period. But scientists have learned to do it pretty well, as long as they actually follow the protocols that they claim to, and don't make up results.]

Samuel Baldwin said,

August 28, 2010 @ 6:31 am

Not quite as important, but this struck me as quite odd and unnatural:

"Perhaps, therefore, the data were not fabricated,"

However, a quick search on The Corpus of Contemporary American English says I'm in the minority here, with 3002 results for "data were" and only 625 for "data was". Is this a regional thing? I've always heard data being treated as uncountable rather than plural. I'm from the Northeast USA (Northeast MA, to be more specific) is this a regional thing?

Thor Lawrence said,

August 28, 2010 @ 7:28 am

"Data" is the plural of "datum", hence the — for me, operating in international-cum-UN Eglish from a British base — automatic use of the plural with "data".

Thor

Tom Saylor said,

August 28, 2010 @ 8:16 am

@ Samuel Baldwin:

For the reason Thor Lawrence suggests, "data are" is something of a shibboleth among educated Americans, who would no more say "data is" than "criteria is" or "phenomena is." I'd never before seen the suggestion that "data" might be interpreted as a mass noun. Aren't all mass nouns singular in form? The paradigmatic ones (e.g., "water," "air") certainly are.

[(myl) But even quantified plural nouns can take singular verb agreement when referring to amounts in a mass-noun kind of way: "Ten hours is too long"; "Two thousand votes isn't very much"; etc. Also, the form of data is not obviously plural except for those who know (and care about) the Latin etymology.]

Anyway, what struck me here is that in the post's opening quotation both journalist (Johnson) and scientist (Altmann) vacillate between singular and plural uses of the word.

michael ramscar said,

August 28, 2010 @ 12:44 pm

@ data / datum

During the course of a conversation about corpus analysis a while back, a grad student of mine launched into a sentence in which he would eventually have to use a plural form for corpus. As the sentence unfolded, the inevitability of this became clear to both of us. Wide eyed (and having stammered slowly through the last few words prior to the denouement), he finally blurted out "corpori."

I've noticed that I (and many of my colleagues) tend to use both corpora and corpuses as plurals for corpus, depending on the context, and that I too vacillate between the singular and plural uses of the word data. Since there seems to be a fair bit of systematicity to this behavior, I've tried to remain open to the idea that it may be evidence that grammar is not as context free (or categorical) as many theories might maintain. Other people's (and, for that matter, peoples') mileage may vary.

LISP machine said,

August 28, 2010 @ 2:09 pm

Dr. Hauser had sound theoretical reasons to believe that measurements, in an ideal, "noise-free" experiment, would turn out the way that bar graph shows. So it is not so much wild fabrication as it is a theory-based correction of experimental non-idealities.

LISP machine said,

August 28, 2010 @ 2:23 pm

What's more, a paper merely reporting banal experimental fact will be less thought-provoking and fruitful for the field than an innovative work than goes beyond the trivial idiosyncrasies of experimental data.

So the real issue is not whether Dr. Hauser stuck to the facts, is whether his work was thought-provoking and added excitement to the field.

Henning Makholm said,

August 28, 2010 @ 3:19 pm

@Tom Saylor: "Aren't all mass nouns singular in form?"

I'm not sure precisely what you demand for something to be a "mass noun", but how about mathematics, physics, and so forth?

John Cowan said,

August 28, 2010 @ 6:55 pm

"Data is" is normal to this educated American, and to most people in my field.

groki said,

August 28, 2010 @ 7:11 pm

LISP machine: theory-based correction of experimental non-idealities.

take that, you realiy-based losers! :) or as Paul Simon notes: "A man sees what he wants to see, And disregards the rest."

truly, thanks for that phrase, LISP machine: very handy in these wish-think times.

Mike Maxwell said,

August 28, 2010 @ 8:11 pm

Hauser was one of the authors of Hauser, Chomsky, and Fitch 2002 "The Faculty of Language: What is it, who has it, and how did it evolve" Science 298:1569-1579. (http://www.chomsky.info/articles/20021122.pdf) The Cotton-top Tamarin article is one of four cited in table 1 which address the problem of "Spontaneous and training methods designed to uncover constraints on rule learning." Two of the other three citations are also to work by Hauser. In the overall context of Hauser, Chomsky, and Fitch, I'm not sure how significant this is; the Cotton-top article is however the one that's cited in the text of the article as "demonstrating that the capacity to discover abstract rules at a local level is not unique to humans, and almost certainly did not evolve specifically for language" (1577-8). (The Fitch and Hauser article that Mark has critiqued is the subject of the following paragraph.)

[(myl) See "JP versus FHC+CHF versus PJ versus HCF", 8/25/2005, for the box score.]

Kyle Gorman said,

August 29, 2010 @ 1:34 pm

Is there any appropriate statistic that could evaluate the likelihood of fabrication given data of this structure (within subject, binary response, i think?). One that would seem to apply is the "too-few-zeros" problem, discussed in Paul Bakan, "Response-Tendencies in Attempts to Generate Random Binary Series", American Journal of Psychology 73(1):127-131 and some subsequent work (see for instance the discussions of the accusations against Research 2000 that have been in the news).

Richard Sproat said,

August 29, 2010 @ 3:58 pm

Interesting that in some miniscule way I (and my fellow ex-Bell Labs TTS researchers) am a contributor to this case of possible fraud.

http://www.bell-labs.com/project/tts/voices-java.html

mass-count fan said,

August 30, 2010 @ 12:20 am

Lovely to be distracted by "data is/ data are" in the midst of this misery.

1) Data is definitely a mass noun and thus takes singular verb agreement

a) It can't take plural count determiners:

*several data/two data/fewer data/many data

b) Or singular count determiners

*a data/one data/each data

c) But it seems pretty happy with mass determiners

(less data)

2) But if you are going to use it that way be prepared to defend yourself from reviewers and editors who think they know better….

richard howland-bolton said,

August 30, 2010 @ 8:17 am

The OED gives Datum as the headword but its quotations have 'data' as a mass (or at least heap) in 1646 almost a hundred years before 'datum' in 1737.

And when I was young and studying slash working in the chemical field in England (mainly in Herts and London) data always had a singular verb.

outeast said,

August 30, 2010 @ 8:36 am

@ mass-count fan

I generally use 'data' as a mass noun myself, but your claims are not well supported by the evidence. And your 'singular count determiners' objection is a bit irrelevant, since the correlating singular is 'datum'.

The Shorter Oxford notes that 'Historically and in specialized scientific fields "data" is treated as a p. in English, taking a pl. verb. In modern non-scientific use, however, it is often treated as a mass noun, like e.g. "information", and takes a sing. verb.'

That pretty much fits my experience and seems to allow pretty well for the clear fact that there are many educated and literate English speakers on either side of the usage fence.

As to 'be prepared to defend yourself from reviewers and editors who think they know better'… Obviously there are peevologists everywhere. Journals etc. also have house styles, however, and if you're 'defending' one use or another in the face of a house style then it's you who gets to wear the peevologist's hat.

outeast said,

August 30, 2010 @ 8:39 am

@ richard

studying slash working in the chemical field

I'd actually entered 'slash working' in teh google before I realized what you meant… :)

richard howland-bolton said,

August 30, 2010 @ 10:36 am

@outeast: Sorry 'bout that, I was foolishly trying to be clever, referring to http://languagelog.ldc.upenn.edu/nll/?p=2584

Jesse Tseng said,

August 30, 2010 @ 4:10 pm

@mass-count fan: "Data is definitely a mass noun" — yes; "… and thus takes singular verb agreement" — no. You might like to know that there are plural mass nouns, like entrails, victuals, oats, etc., and after years of training, this is where I put data. So these data sounds fine to me, but not *these two data. And on 37 occasions between 2003 and 2009, I wrote the phrase many data and immediately changed it to a large part of the data or many examples. However, I seem to have misplaced the videotapes of me doing this.

maidhc said,

September 2, 2010 @ 4:51 am

Thanks for the clarification.

Jesse Hochstadt said,

September 3, 2010 @ 7:11 pm

Since no one has answered the question: Yes, Gerry Altmann is British.

Some Links #16: Why I want to Falcon Punch (some) BBC Science Writers | Replicated Typo said,

November 16, 2011 @ 3:28 am

[…] Altmann: Hauser apparently fabricated data. In keeping with the shoddy science theme… I've been keeping a close eye on the Hauser story, ever since it first came to my attention early this month. I've refrained from saying too much on the subject because I didn't really know what was going on, other than a few of allegations being bandied about concerning said individual. Mark Liberman's post has, for me at least, clarified my position somewhat in that it appears Hauser fabricated data; it wasn't just some accidental coding mistake as previously suggested. So it's looking pretty bad, even though we still know very little about the ins and outs of the situation. Another good recent article on the topic: Hausergate: Scientific Misconduct and What We Know We Don't Know. […]