More on speech overlaps in meetings

« previous post | next post »

This post follows up on Mark Dingemanse's guest post, "Some constructive-critical notes on the informal overlap study", which in turn comments on Kieran Snyder's guest post, "Men interrupt more than women".

As part of a project on the application of speech and language technology to meetings, almost 15 years ago, researchers at the International Computer Science Institute (ICSI) recorded, transcribed and analyzed a large number of their regular technical meetings. The results were published by the Linguistic Data Corsortium as the ICSI Meeting speech and transcripts. As the publication's documentation explains:

75 meetings collected at the International Computer Science Institute in Berkeley during the years 2000-2002. The meetings included are "natural" meetings in the sense that they would have occurred anyway: they are generally regular weekly meetings of various ICSI working teams, including the team working on the ICSI Meeting Project. In recording meetings of this type, we hoped to capture meeting dynamics and speaking styles that are as natural as possible given that speakers are wearing close-talking microphones and are fully cognizant of the recording process. The speech files range in length from 17 to 103 minutes, but generally run just under an hour each.

There are a total of 53 unique speakers in the corpus. Meetings involved anywhere from three to 10 participants, averaging six. The corpus contains a significant proportion of non-native English speakers, varying in fluency from nearly-native to challenging-to-transcribe.

There's an extensive set of "dialogue act" annotations of this material, available from ICSI, and described in Elizabeth Shriberg et al., "The ICSI Meeting Recorder Dialog Act (MRDA) Corpus", HLT 2004.

I don't have a lot of time this morning. But, spurred by Mark Dingemanse's guest post, I thought I'd take a quick look at the gendered patterns of overlap in the ICSI Meeting Corpus.

The start and end times of each participant's contributions were registered in the transcription process, e.g.

<Segment StartTime="669.140" EndTime="670.895" Participant="fe016">

So <Emphasis> another </Emphasis> idea I w- t- had

</Segment>

<Segment StartTime="671.053" EndTime="673.050" Participant="fe016">

just now actually for the <Emphasis> demo </Emphasis> was

</Segment>

<Segment StartTime="673.700" EndTime="677.511" Participant="fe016">

whether it might be of interest to sh- to show some of the prosody uh <VocalSound Description="mouth"/>

</Segment>

<Segment StartTime="677.644" EndTime="679.466" Participant="fe016">

work that <Emphasis> Don's </Emphasis> been doing.

</Segment>

<Segment StartTime="679.476" EndTime="680.182" Participant="me013">

Mm-hmm.

</Segment>

<Segment StartTime="680.520" EndTime="688.950" Participant="fe016">

Um actually show some of the <Emphasis> features </Emphasis> and then show for instance a task like finding sentence boundaries or finding turn boundaries.

</Segment>

<Segment StartTime="689.290" EndTime="689.707" Participant="fe016">

Um,

</Segment>

<Segment StartTime="690.110" EndTime="696.221" Participant="fe016">

you know, you can show that <Emphasis> graphically, </Emphasis> sort of what the features are doing. It, you know, it doesn't work <Emphasis> great </Emphasis> but it's definitely giving us <Emphasis> something. </Emphasis>

</Segment>

<Segment StartTime="696.660" EndTime="698.160" Participant="me013">

Well I think at –

</Segment>

<Segment StartTime="696.689" EndTime="698.692" Participant="fe016">

I don't know if that would be of interest or not.

</Segment>

<Segment StartTime="698.160" EndTime="705.848" Participant="me013">

at the very least we're gonna want something <Emphasis> illustrative </Emphasis> with that cuz I'm gonna want to <Emphasis> talk </Emphasis> about it and so i- if there's something that shows it <Emphasis> graphically </Emphasis>

</Segment>

<Segment StartTime="702.830" EndTime="703.643" Participant="fe016">

Yeah.

</Segment>

<Segment StartTime="706.390" EndTime="708.699" Participant="me013">

it's much better than me just having a bullet point

</Segment>

<Segment StartTime="709.050" EndTime="711.262" Participant="me013">

pointing at something I don't <Emphasis> know </Emphasis> much about, so.

</Segment>

<Segment StartTime="709.650" EndTime="713.373" Participant="fe016">

I mean, you're looking at this now – Are you looking at Waves or Matlab?

</Segment>

As a result, it's easy to write a script to detect overlaps of various types and amounts. So for this morning's Breakfast Experiment™, that's what I did.

Contrary to what the documentation says, I found 59 (rather than 53) different participants identified across the 75 meetings — 43 male and 16 female. 43/(43+16) = 73% male.

Counting conversational segments (as divided in the transcripts) I found 101,739 segments by male speakers and 27,355 segments by female speakers — 101739/(101739+27355) = 79% of the segments were produced by male speakers.

Adding up the duration of the segments, I found 65,919.2 seconds in female-produced segments and 232,460 seconds in male-produced segments — 232460/(232460+65919.2) = 78% male speaking time.

This is quantitatively consistent with the males in these meetings being about 14% more talkative, on average, than the females were — (1.14*43)/(1.14*43 + 0.86*16) = 0.781. That's a substantially larger difference than I found in looking at a large collection of telephone conversations ("Gabby guys: The effect size", 9/23/2006), where "in conversations between the sexes, the men used about 6% more words on average than the women did".

What about overlaps? If we count only overlaps where someone started talking while someone else was talking, and continued after any talk segments that started earlier had finished, there were 8,299 cases where the overlapper was female, and 25,904 cases where the overlapper was male. Thus 25904/(25904+8299) = 75.7% of the overlappers were male. In this sense of "overlap", a larger percentage of female-produced segments overlapped earlier speech (8299/27355 = 30.3%) than male-produced segments (25904/101739 = 25.5%).

If we ask about who got overlapped instead of who did the overlapping, we find that there were 8316 cases where a female segment was overlapped, vs. 25888 cases where a male segment was overlapped. Thus 25888/(25888+8316) = 75.7% of the overlappees were male. Again, a larger percentage of female-produced segments were overlapped (8316/27355 = 30.4%) than male-produced segments (25888/101739 = 25.4%).

All of this is approximately consistent with what we found in Yuan et al. 2007, where we looked at overlaps in telephone conversations: "In general, females make more speech overlaps of both types than males; and both males and females make more overlaps when talking to females than talking to males. "

This is the crudest sort of beginning — to make real sense of what happened in these meetings, we'd need to look at aspects of the conversational dynamics that are not recorded in the transcripts, such as the roles of the speakers involved (e.g. meeting convener or principal speaker vs. other roles), differences among interrupting to support a point, interrupting to dispute a point, interrupting to change the subject, etc. We might also look at more elaborate models of overlap patterns, such as those described in Kornel Laskowski et al., "On the Dynamics of Overlap in Multi-Party Conversation", InterSpeech 2012.

There are also other meeting datasets to look at:

The ISL Meeting speech and transcripts (part 1): a first subset of the ISL Meeting Corpus (112 meetings). It contains 18 meetings collected at the Interactive Systems Laboratories at Carnegie Mellon University in Pittsburgh, PA during the years 2000-2001. The recorded meetings were either natural meetings where participants needed to meet in the real world, or artificial meetings, which were designed explicitly for the purposes of data collection but still had real topics and tasks. The duration of the meetings in this corpus ranges from eight to 64 minutes and averages at 34 minutes.

There are a total of 31 unique speakers in the corpus. Meetings involved anywhere from three to nine participants, averaging at five. The corpus contains a significant proportion of non-native English speakers, varying in fluency.

The AMI corpus, available from Edinburgh: 100 hours of meeting recordings. The recordings use a range of signals synchronized to a common timeline. These include close-talking and far-field microphones, individual and room-view video cameras, and output from a slide projector and an electronic whiteboard. During the meetings, the participants also have unsynchronized pens available to them that record what is written. The meetings were recorded in English using three different rooms with different acoustic properties, and include mostly non-native speakers. […]

[T]he AMI Meeting Corpus has a somewhat unusual design. Except for corpora set up to inform a spoken dialogue systems application by showing what the system needs to produce, dialogue corpus designers usually aim to capture completely natural, uncontrolled conversations. Around one-third of our data is like this; it consists of meetings from various groups that would have happened whether they were being recorded or not. However, the rest has been collected by having the participants play different roles in a fictitious design team that takes a new project from kick-off to completion over the course of a day. The day starts with training for the participants about what is involved in the roles they have been assigned (industrial designer, interface designer, marketing, or project manager) and then contains four meetings, plus individual work to prepare for them and to report on what happened. All of their work is embedded in a very mundane work environment that includes web pages, email, text processing, and slide presentations.

And there are probably others that I don't know about.

Because these recordings and transcripts have been published (and a fortiori because they were created in the first place), it's possible to contemplate the kind of analysis that Mark Dingemanse recommends. Short of undertaking the onerous task of classifying discourse dynamics by hand throughout the transcripts, we could classify a random sample. Or we could try to set up a plausible crowdsourcing method. But again, recording and publication make all things possible.

I agree with Mark that observation of behavior is simultaneously a study of the observer's perceptions and the experimental subjects' behaviors. But I would point out to him that merely shifting to observations of recordings rather than observations of unrecorded interactions just shifts this problem to a different domain. The shift can help, if the classificatory categories are well defined, and if the classification is done blind by annotators who are not aware of the experimental hypothesis, and if inter-annotator agreement is evaluated quantitatively, and if the recordings and annotations are published so that others can validate and extend them. But these conditions are all-too-rarely met — for example, in the extensive sociolinguistic literature on t/d deletion, the classificatory categories are not explicitly defined, inter-annotator agreement is not calculated, the coding is not done blind, and the datasets are not published.

There's no bigger target for the Open Data movement than this one, in my opinion.

Update — as requested by Jon Lennox in a comment below, here's the full 2×2 table of male/female overlap counts:

| Overlapper | |||

|---|---|---|---|

| Male | Female | ||

| Overlappee | Male | 19913 | 5973 |

| Female | 5990 | 2326 | |

Thus male speakers' overlappings were 19913/(19913+5990) = 76.9% on male overlappees, and 5990/(19913+5990) = 23.1% on female overlappees. Since female speakers took up 65929.2/(232460+65919.2) = 22.1% of the speaking time overall, this suggests that male speakers overlapped female speakers at a slightly higher rate than if they had intervened at chance times.

Female speakers' overlappings were 5973/(5973+2326) = 72.0% on male overlappees, and 2326/(5972+2326) = 28.0% on female overlappees. Since female speakers took up 22.1% of the speaking time overall, this suggests that female speakers had a somewhat greater tendency to overlap other female speakers as opposed to male speakers.

Jon Lennox said,

July 16, 2014 @ 10:43 am

A typo: in two cases, you forgot to shift the decimal point when converting to a percentage: "0.79% of the segments", "0.78% male speaking time".

[(myl) Oops — fixed now.]

And a question: Can you break down the genders of overlapper/overlapee into all four combinations? Keiran's initial post indicated that women were willing to interrupt other women, but not to interrupt men, so it'd be interesting to see if this data set bears that out.

[(myl) I'll add such a table to the original post — the WordPress comment software doesn't allow html table code…]

Kieran Snyder said,

July 16, 2014 @ 2:04 pm

Thanks to both Marks for the thoughtful comments. As it happens, I agree with most of them. I totally agree that a proper study would have been recorded; as I will note in the follow up that I will post tomorrow on corporate seniority level as a variable, any observational study can only be directional and needs rigorous validation. I am also certain that, like any observer, I missed some things.

Still, as directional numbers go, they are pretty striking, and they do point to the need for additional study. Mark L. highlights some corpora that would be fruitful to explore. Another group here where I work has also taken the directional results and is preparing a more rigorous (recorded) followup across a broader set of participants, including cross-culturally. If I get results from that I will share them.

The results on seniority that I will share are also directionally strong – if anything, stronger than what I reported in the post from earlier this week. There also appears to be a significant interaction between seniority and gender specifically where interruption behavior is concerned. More tomorrow!

Michael Watts said,

July 16, 2014 @ 4:08 pm

Adding up the duration of the segments, I found 65,919.2 seconds in female-produced segments and 232,460 seconds in male-produced segments – 232460/(232460+65919.2) = 78% male speaking time.

This is quantitatively consistent with the males in these meetings being about 14% more talkative, on average, than the females were – (1.14*43)/(1.14*43 + 0.86*16) = 0.781.

OK, there were 43 males and 16 females, and 78% is the share of speaking time occupied by the males. If the theory is that every male occupies speaking time as if he were 1.14 females ("14% more talkative"?), shouldn't that equation look like (1.14*43)/(1.14*43 + 1*16) = 0.754? Why do we simultaneously inflate the males 14% and discount the females a different 14%? Each female occupies speaking time as if she were exactly one female, right?

If I'm understanding the idea right, I get (1.32*43)/(1.32*43+1*16) = 0.780, which would make the males 32% more "talkative"?

Michael Watts said,

July 16, 2014 @ 4:15 pm

I should note that in my model, a group of double the size will talk for twice the amount of time. That doesn't seem realistic for corporate meetings; if MYL has a different model in mind I'm happy to be corrected.

[(myl) Here's a simpler calculation that comes close to your number:

(232360/43)/(65919.2/16) ≈ 1.312

That is, the average male participant produces about 31% more seconds of speech than the average female participant.]

Michael Watts said,

July 16, 2014 @ 6:19 pm

Yes, that's the calculation I did to produce 32% [well, actually I used (0.78*16)/(0.22*43), but it's the same thing]. But how does "men produce 31% more seconds of speech than women" translate into "men are 14% more talkative than women"? What exactly is "talkativity" measuring?

Suburbanbanshee said,

July 16, 2014 @ 8:01 pm

Not to be rude, but discussions by computer people with computer people are not generally considered as representative of normal human interaction, much less of normal patterns of interaction between the sexes.

This is part of the point of being geeky.

[(myl) Although it may not have been obvious, Kieran Snyder's original experiment dealt with meetings at amazon.com, in what I believe is a fairly geeky environment, and implicitly raised the question of whether gender interactions in that context might have something to do with maintaining the rather relatively low numbers of women in such jobs. (See this recent Guardian article, or this one from the Boston Globe, for some relevant background.)

Possible interruption imbalances are not as repugnant as some of the things discussed in those articles, but the issue is still worth looking at.

As for conversational overlaps in interactions among other sorts of people, see Yuan, Liberman & Cieri 2007 for one approach.]

Suburbanbanshee said,

July 16, 2014 @ 8:07 pm

Actually, now that I think about it, there have been informal studies about how geeky sf fans are more comfortable interrupting in order to share ideas or corrections of fact, with some question about whether that was connected with the common practice of not constantly staring at people's eyes or faces during conversations, or indeed looking at everything in the room except the people one is talking with.

Suburbanbanshee said,

July 16, 2014 @ 8:26 pm

Thinking a bit more about it, the classic pattern of a fannish conversation is to have a group of excited people who think faster than they can talk. Interruptions are basically not disruptive, because the speakers' own thoughts are also jostling the thread of discourse; other people are acting as supplemental brain parts.

chris said,

July 16, 2014 @ 9:26 pm

How susceptible are these numbers to, say, a small number of unusually quiet women? Given the low number of women overall in the data, my instinct would be to suspect "quite susceptible" and to not put much weight on modest effects like 73% of the speakers doing 78% of the speaking (which is only slightly more impressive when you write it as 27% of the speakers doing 22% of the speaking).

[(myl) Indeed — looking at speaker-by-speaker data would be the next step, I think.]

Also, how do these differences compare to the differences in talkativeness or propensity to interrupt among different individuals within each gender? Are we looking at another one of those graphs that consists of two bell curves that are almost completely superimposed?

[(myl) It's going to be hard to get a very well-defined distribution with the small number of speakers involved, unlike in the case of the English telephone-conversation data, where there were nearly a thousand speakers of each sex in mixed-sex conversations.]

Kieran Snyder said,

July 16, 2014 @ 11:59 pm

I don't think the issue is the geekiness. Geeks can have structured conversations and unstructured conversations, same as anyone else. I do believe there's some effect from the formal meeting context, which usually has a formal agenda and someone who sees it as his/her job to lead the meeting. I suspect the interruption patterns are different from what you'd see in less formal settings, but not that different from what you'd see in other non-tech corporate settings with a preponderance of men in leadership roles.

Mark D. said,

July 17, 2014 @ 3:02 am

Interesting breakfast experiment, Mark! We know that overlaps are not randomly distributed with regard to total speaking time — their occurrence is likely better described with reference to opportunities to take the floor, which has more to do with turns at talk than with speaking time. Does total speaking time correlate well with number of turns in this corpus?

Thanks for your comments, Kieran. I think we see eye to eye on this one — apparent trends point to the need for further study. As I pointed out on the other thread, I think there are unfortunate asymmetries in the workplace that definitely may have an influence on social interactional patterns, and the function of your work has been to call attention to this. Hopefully there will be further study of this.

Mark Liberman said,

July 17, 2014 @ 4:05 am

Mark D.: Does total speaking time correlate well with number of turns in this corpus?

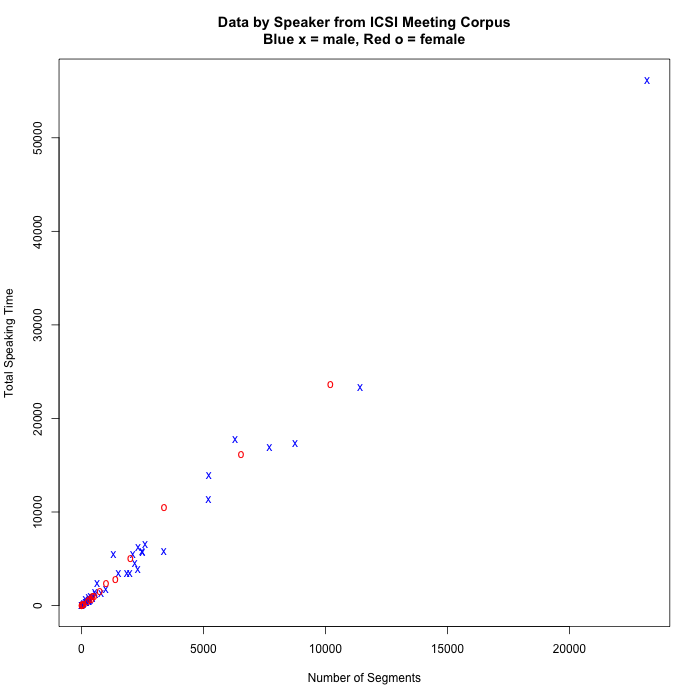

Yes, it does — r=0.993:

Or on a log scale:

Note that there are four males and three females with relatively little participation (fewer than 15 turns and 50 seconds of talk), and one male with an unusually large amount of participation (more than 20,000 segments and 50,000 seconds of talk), and then a core group in which the contributions of males and females are somewhat better balanced, relative to their numbers.

J. W. Brewer said,

July 17, 2014 @ 10:22 am

The full-time U.S. workforce is currently split something like 57/43 between males and females, but ratios for particular industries (or particular firms, or specialized subgroups within firms) vary all over the place. One could (and perhaps should) do a bunch of such studies in workplaces with varying ratios. Public K-12 schools might, for example, be one large segment of the economy where a certain amount of bureaucratic meetings occur and where the workforce is skewed female – more dramatically so than a few decades ago and now increasingly female at the higher end (building principals etc.) than it used to be. Does that change in ratio seem change the gender skew of different sorts of overlapping/turntaking? It might also be worth keeping in mind that different sorts of still-heavily-male worksites may reflect rather different subsets of the male population. The pre-existing stereotypes likely to be drawn upon to complain about software developers (as being typically male in a way that might exclude potential female co-workers) and those you would use to denigrate construction workers (as being ditto) would be rather different from each other.