Speech-To-Text not quite perfect yet….

« previous post | next post »

Yesterday on YouTube, "Former White House chief strategist Steve Bannon sits down with Dasha Burns, POLITICO's White House bureau chief". At the end of the interview, there's a conventional exchange of thank-yous. From Dasha Burns:

All right Steve, I know you got a show to record,

thank you so much for- for beaming in here

and uh sorry for the technical difficulties everyone.

Steve thanks so much.

And Steve Bannon's response:

Dasha thank you,

and thank Politico for having me.

Pretty much as expected. But Google's transcription generation system (along with its usual failure to divide segments by speaker) hears "Politico" as "polio":

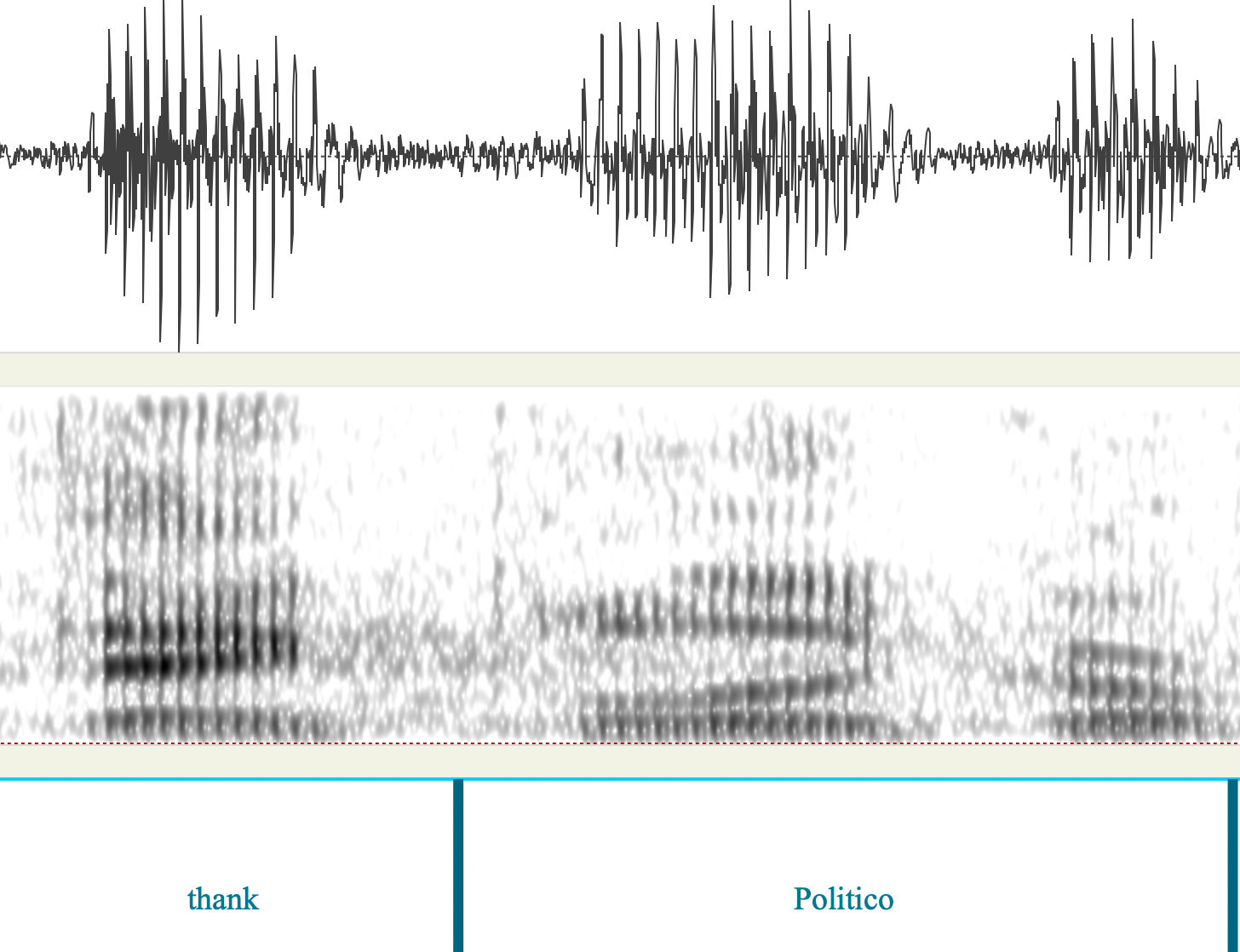

Polio has been in the new recently, because of RFKJr's hostility to the polio vaccine, which may be why Google was primed to hear it. But no vaccine- or polio-related stuff is mentioned in this interview. And although Bannon's response has various (standard) kinds of phonetic reduction, like flapping-unto-extinction the intervocalic /t/ in "Politico", the /k/ in his performance of that word remains clear:

In IPA, I guess he said something like [ˈplɪː.kou] …

David Marjanović said,

January 15, 2025 @ 3:32 pm

More likely, polio was in Google's dictionary, Politico was not.

I've watched presentations from scientific conferences with automatic captions (which the presenters included in an attempt to be helpful). Invariably, almost all technical terms were replaced by words that were much more common but only vaguely similar. Without context, few sentences in the captions that contained any such terms would have remained comprehensible.

Mark Liberman said,

January 15, 2025 @ 4:15 pm

@David Marjanović: "More likely, polio was in Google's dictionary, Politico was not."

Nope — from earlier in the same YouTube transcript:

And there are several other correct recognitions of "Politico" later in the same transcript.

Of course we need to note that the transcript renders "Dasha" as "Dasher" in the same sentence…

J.W. Brewer said,

January 15, 2025 @ 6:04 pm

Lyrics from a song credited jointly to M. Jagger & K. Richards, commercially released in December 1968 in the aftermath of the Nixon/Humphrey/Wallace contest in the U.S.:

"Spare a thought for the stay-at-home voter

His empty eyes gaze at strange beauty shows

And a parade of the gray-suited grafters

A choice of cancer or polio"

Richard Hershberger said,

January 16, 2025 @ 6:40 am

I am intimately away of the imperfect state of voice-to-text. Lawyers love this software. I am a paralegal. I beg my guy to let me edit the output of anything more important than a note to the file.

Andrew Usher said,

January 16, 2025 @ 9:05 am

Same phenomenon as AI hallucinations: the computer is programmed to produce _something_ rather than nothing, now matter how absurd, implausible, or useless. It's a serious phonetic error, and totally unjustified by context, to get from 'Politico' to 'polio'; but it's still more serious that the software can't catch such errors.

k_over_hbarc at yahoo.com

Mark Liberman said,

January 16, 2025 @ 11:40 am

@Andrew Usher: "It's a serious phonetic error, and totally unjustified by context, to get from 'Politico' to 'polio'; but it's still more serious that the software can't catch such errors.":

But it can! (or at least it should…)

The whole point of "language models", old and new, large and small, is to estimate probability distributions over likely symbol sequences — and in the case of speech-to-text, to integrate that a priori information with bottom-up phonetic information.

In this case, the context should be make "Politico" very probable, and "polio" very unlikely — and the clear /k/ in the audio should do the same. Why none of that worked in this case is mysterious — maybe the system is tuned to bring in recent news?

Rodger C said,

January 16, 2025 @ 12:32 pm

maybe the system is tuned to bring in recent news?

Like the news captioning I once saw turn "Texas" into "the accident"?

Robert Irelan said,

January 16, 2025 @ 6:14 pm

@Mark Liberman You shouldn't rely on YouTube automatic transcriptions to be anything near the state-of-the-art in speech-to-text. They are optimized to be run for every single video uploaded to YouTube at a low enough cost that YouTube doesn't need to (directly) charge people to upload or watch videos.

More specialized speech-to-text systems generate much better transcriptions. For example, OpenAI's Whisper model (https://openai.com/index/whisper/), which is already several years old, is at basically human performance for anything that's not extremely unusual – it handles accents, slurred or corrupted speech, and the like very well. You can run it completely for free on any YouTube video using this page: https://colab.research.google.com/github/ArthurFDLR/whisper-youtube/blob/main/whisper_youtube.ipynb. Or, ChatGPT's voice-to-text mode can be used if you play the video on your computer and listen to it with your phone.

Whisper doesn't directly separate speakers on its, but there are other systems that can separate speakers (called "speaker diarization" in the ML literature) and use Whisper to transcribe.

Robert Irelan said,

January 16, 2025 @ 6:17 pm

Whisper at the default settings of that notebook (using the "medium" size model) produces the correct transcription of Politico:

"""

[35:48.560 –> 35:52.720] Kushner. And Jared Kushner, I'm telling you, has done something to help this country that's very

[35:52.720 –> 35:56.960] significant. That is prison reform, which we need dramatically. All right, Steve, I know you got a

[35:56.960 –> 36:02.480] show to record. Thank you so much for beaming in here and sorry for the technical difficulties,

[36:02.480 –> 36:06.640] everyone. Steve, thanks so much. Dasha, thank you and thank Politico for having me.

"""

Andrew Usher said,

January 17, 2025 @ 8:48 am

Mark Liberman:

Yes, but my point was that their probability space should include "no interpretation". It would be better to print (unintelligible)</i. or ( … ) rather than a gross error, even if it theoretically shouldn't have happened.

Mark Liberman said,

January 18, 2025 @ 9:42 am

@Robert Irelan:

We've recently done a systematic comparison of human transcription and 10 different state-of-the-art speech-to-text systems, on 100 randomly-selected 5-minute passages from the PennSound archive. In that trial, Whisper's overall word error rate was 10.6%, which is good enough to be useful, but far from perfect. The results will soon be published.

As for diarization, the current state of the art is largely due to the impetus of the series of DIHARD evaluations, described in Ryant, Neville, Prachi Singh, Venkat Krishnamohan, Rajat Varma, Kenneth Church, Jun Du Cieri, Sriram Ganapathy, and Mark Liberman. "The Third DIHARD Diarization Challenge." (2021).

And in the PennSound trial, diarization error rates (for the systems that attempt diarization) range from 14.5% to 35.6%. The Google Cloud system's DER was 30.1%.