Cartoonist walks into a language lab…

« previous post | next post »

Bob Mankoff gave a talk here in Madison not long ago. You may recognize Mankoff as the cartoon editor for many years at the New Yorker magazine, who is now at Esquire. Mankoff’s job involved scanning about a thousand cartoons a week to find 15 or so to publish per issue. He did this for over 20 years, which is a lot of cartoons. More than 950 of his own appeared in the magazine as well. Mankoff has thought a lot about humor in general and cartoon humor in particular, and likes to talk and write about it too.

The Ted Talk

On “60 Minutes”

His Google talk

Documentary, "Very Semi-Serious"

What’s the Language Log connection? Humor often involves language? New Yorker cartoons are usually captioned these days, with fewer in the lovely mute style of a William Steig. A general theory of language use should be able to explain how cartoon captions, a genre of text, are understood. The cartoons illustrate (sic) the dependence of language comprehension on context (the one created by the drawing) and background knowledge (about, for example, rats running mazes, guys marooned on islands, St. Peter’s gate, corporate culture, New Yorkers). The popular Caption Contest is an image-labeling task, generating humorous labels for an incongruous scene.

But it’s Mankoff's excursions into research that are particularly interesting and Language Loggy. Mankoff is the leading figure in Cartoon Science (CartSci), the application of modern research methods to questions about the generation, selection, and evaluation of New Yorker cartoons.

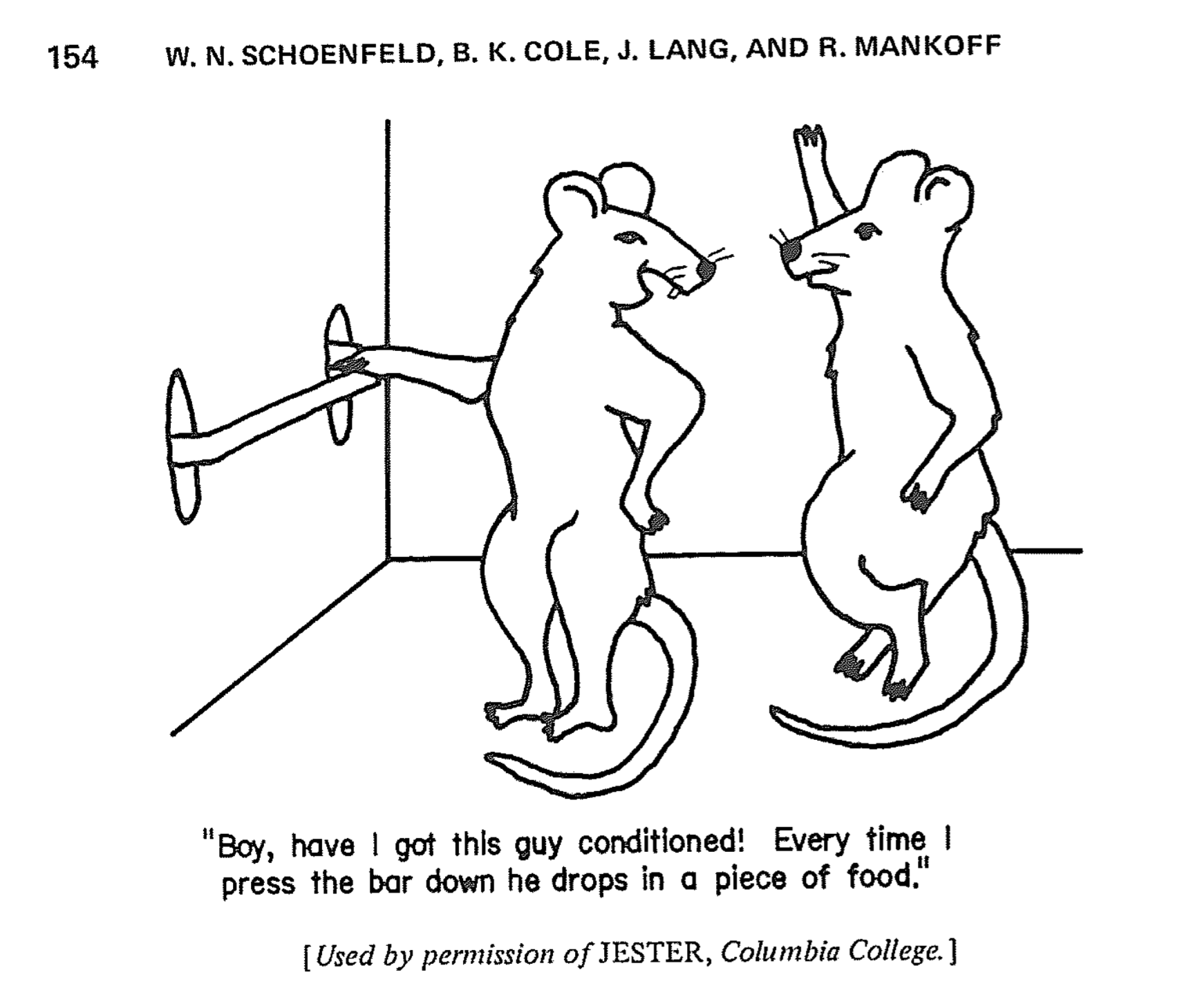

OK, I just invented Cartoon Science, but Mankoff’s involvement in humor research isn’t a joke. He almost completed a Ph.D. in experimental psychology back in the behaviorist era, which is pretty hard core. Before he left the field he co-authored a chapter called “Contingency in behavior theory”, as in contingencies of reinforcement in animal learning. The chapter included this cartoon:

It probably wasn’t a coincidence that the first of the weekly Caption Contests was a cartoon about a rat lab.*

Mankoff has worked with several research groups over the years, using various methods to investigate what makes something, especially a New Yorker cartoon, funny. He’s a co-author on several research articles. In the early 2000s, he worked with psychologists who collected eye-movements and evoked potentials while subjects read cartoons. A 2011 study (pdf) examined the stereotype that men are funnier than women. Men and women wrote captions for cartoons; the men’s captions were rated as funnier by independent raters—both men and women, though more so by men; in a memory experiment, both male and female subjects tended to misremember funny captions as having been written by men and unfunny ones by women. Interesting, though the number of participants was small and representative only of the population of UCSD students who participate in psychology experiments for credit.

The weekly caption contest has yielded a massive amount of data that is being analyzed using NLP and machine learning techniques. (The contest: Entrants submit captions for a cartoon; from the 5000 or so entries the editors pick three finalists; readers pick the winner by voting on-line.) Just think of the studies that can be done with this goldmine of a data set! Identify the linguistic properties that distinguish the winning captions from the two losers. Build a classifier that can estimate relative funniness from properties such as word choices, grammatical complexity, affective valence (“sentiment”), readability, structure of the joke, etc. Use the classifier to predict the winners on other weeks. Or the rated humorosity of other cartoons.

Heavy hitters from places like Microsoft, Google, Michigan, Columbia, Yale, and Yahoo have taken swings at this with Mankoff’s help. The results (from the few published studies I found) have been uninspiring. Classifiers used to pick the winning caption yielded the sorta-worked, better-than-chance-but-not-much results that one sees a lot in the classifier line of work. Several properties of winning captions have been identified but they aren’t specific enough to generate good ones. In one study, for example, negative sentiment captions did better than positive ones, a little.

Perhaps this is a job for deep learning. The methods I just described required pre-specifying a set of plausibly relevant cartoon characteristics. A deep learning model could simply discover what mattered based on exposure to examples. The magazine has published over 80,000 cartoons. Say that our multi-layer network gets drawing-caption pairs as input and learns to classify them as funny or unfunny. The training corpus includes the correct pairings (as published) but also drawings and captions that have been randomly re-paired (foils). During training the model gets feedback about whether the pair is real or fake. The weights on connections between units are adjusted using a suitable procedure (probably backpropagation). We train the model on 76,000 real cartoons and then test whether it can correctly classify the other 4000. We do this cross-validation many times, withholding different subsets of 4000. When a model generalizes accurately across validation sets, we declare victory.

Mankoff has spent time at Google world headquarters and at Google DeepMind. I certainly would not want to underestimate what these folks can achieve. (I have no inside knowledge of how far they got with cartoons.) DeepMind’s AlphaGo was retired having consistently and decisively beaten the greatest human Go player. Is labeling New Yorker cartoons harder than playing Go?

Well, yes.

Go has a conventionalized set-up and explicit rules. A captioning model has to figure out what game is being played. Captioning is a type of scene labeling but that requires recognizing what’s in the scene which in this case is, literally, ridiculous: exaggerated, crude, eccentric, stylized renderings of the world. Quite different from the naturalistic scenes that have been the focus of so much attention in AI.

OK, we give the model a break: pair descriptions of the cartoons with captions. Then what are the prospects for success? The humor turns on a vast amount of background knowledge. That feeling when you just don’t get it happens when we either don’t know the relevant stuff or can’t figure out what’s relevant to that cartoon. A deep learning network might well acquire the requisite knowledge of the world but not from 80,000 drawings: insufficient data. Same for analyzing the captions: it’s necessary to know the language. People have acquired most of the knowledge that's required by other means. A network that learned from pairs of drawings (or drawing descriptions) and captions would be acquiring several types of knowledge simultaneously: about pictures, about language, about funny. Set up this way, the model’s task is vastly more difficult than people’s.

That particular defect might be fixable, but training a network to discriminate funny from unfunny cartoons strikes me as a poorly posed problem in any case. Is “funny” even the relevant criterion for a good New Yorker cartoon (or winning caption)? Many are entertaining but hardly funny: they’re rueful, sardonic, or facetious; clever, enlightening; brutal, whimsical, or defiant commentary. “We will now observe a moment of silencing critics of gun violence” (10/4/2017) is a great cartoon but not funny at all. What about Mankoff’s most famous cartoon: “No, Thursday’s out. How about never—is never good for you?” How much do “trenchant” and “funny” overlap?

Mankoff’s conclusion from his explorations in Cartoon Science? “There is no algorithm for humor.” In Madison, as in other talks on YouTube, he said that only humans are capable of humor, in virtue of our special human hardware. Ergo there can’t be an algorithm running on a non-human machine that instantiates the experience. No program will pass the Plotzing Test, whereby an observer is unable to determine whether jokes were generated by computer or human. Mankoff says that humor is like consciousness. He’s a materialist about both. Research on the behavioral and neurophysiological correlates of humor doesn’t explain what humor is, and similarly for consciousness. I'm not endorsing these arguments, but that's the gist of the story.

Mankoff isn’t a philosopher—neither am I—and he certainly doesn’t owe anyone a rigorous analysis. He has concluded that humor is beyond human understanding. Thus there cannot be an effective procedure for being funny. That makes at least three things the brain isn’t powerful enough to understand: how the brain works, consciousness, and humor. [Discuss.]

A materialist might hold that the experiences we associate with consciousness are epiphenomenal and don't require further explanation, but that is where the analogy to humor seems to fail. Surely there is plenty to explain about the humor experience that is not “epiphenomenal”. It may be easier to explain away qualia than why I find a joke funny and you do not. Either equating humor with consciousness is a category error or the materialist account of consciousness has some ‘splaining to do. (Memo to self: read this.)

Where Mankoff is clearly wrong is about algorithms for humor, which have existed since the dawn of AI. Why, just a few months ago, a neural network generated hysterical names for paints, like “pubic gray”. Was that not AI humor, indistinguishable from human behavior? I’ll take up that question, which is really about when it can be said that an AI program has succeeded at simulating human behavior, in my next post.

* Like other behaviorists who studied animal learning, Mankoff actually worked with pigeons, not rats.

Gregory Kusnick said,

October 5, 2017 @ 11:48 pm

Yes, do read Inside Jokes, which at least attempts to articulate a coherent theory of humor. (Personally I found it fairly persuasive.)

As for the so-called "hard problem" of consciousness, my feeling is that this will eventually come to be seen as an uninteresting non-problem along the lines of "why is there something instead of nothing?"

Doreen said,

October 6, 2017 @ 6:00 am

When Family Circus cartoons are paired with random Nietzsche quotes, some might say the results are funnier than the originals.

Ralph Hickok said,

October 6, 2017 @ 7:17 am

I won't blame Mankoff, because he had only a finite number of cartoons to choose from, but it's sad how unfunny New Yorker cartoon are these days. I would guess that, in a year, only about 10 of them make me laugh, and that laughter includes very mild chuckles.

Bill Benzon said,

October 6, 2017 @ 7:35 am

This is terrific stuff, Mark. You bring up background knowledge. Yes. As you know, that's one of the problems that squelched classic symbolic processing several decades ago, where it was known as the common sense knowledge problem. Researchers realized that in dealing with even very simple language, we draw on a vast back of common sense knowledge. How are we doing to hand-code all that into a computer? And once we've done it, what of combinatorial explosion?

I've been thinking about puns recently, mainly in connection with my ongoing Twitter interaction w/ Adam Roberts. I met Adam at the now defunct group blog, The Valve. He's a Romanticist by training, but also a well-published science fiction writer. And he works hard at tweeting puns. In turn, I work hard at replying to his puns with a pun that builds on it. And much of that work involves summoning and evoking background information. FWIW, see one example here: https://twitter.com/bbenzon/status/907910072864473088

Jerry Seinfeld has talked about how he thinks of jokes as finely tuned machines. He develops his material on yellow pads and will work on one bit for days, weeks, months, even in some cases, years. I've got a short working paper on this (Jerry Seinfeld and the Craft of Comedy) where, in addition to some general remarks, I conduct a bit of light analysis. In one case it's part of his conversation with President Obama on Comedians in Cars Getting Coffee. The show is completely unscripted; they shoot 3 to 4 hours of footage and edit it down to 10 to 20 minutes. In this case I show how a remark toward the end of the show builds on one from early in the show. The other case is a bit about donuts and (their) holes. After a bit of set-up:

And the bit continues from there.

Rosie Redfield said,

October 6, 2017 @ 9:06 am

Might the male-female differences in the UCSD-students experiment result from the common bias that leads us to expect that, if something was done well it was probably done by a man?

Sergey said,

October 6, 2017 @ 12:06 pm

Contrasting the winner of 3 captions against the other 2 probably isn't the best strategy: all of them have been selected by the editors as the best ones, so the differences would be minor. Contrasting with the entries at the bottom of the editors' ranking would probably be more useful.

And I want to tell a Soviet-time joke about captions: There was a TV program that included this kind of caption contest. So, as the story goes, once they show a picture of a couple having sex. One of the captions is funniest by a wide margin. They ask the author of the caption how he managed it, and he answers: "I just took a headline from today's Pravda (the official newspaper of the communist party)". And then after telling this you'd take the Pravda and read aloud the headlines. The story is obviously fictional but the headlines were funny for real. Now that would be a great job for the AI: to discover how did they manage it.

Bob Michael said,

October 6, 2017 @ 10:11 pm

Fascinating article! It seems humor is very difficult to define, much less model and replicate. I wonder how humor might be used by a person to detect a machine in a Turing Test?

~flow said,

October 7, 2017 @ 2:52 am

The Sunnyboys: "Fifty-seven years in this business, you learn a few things. You know what words are funny and which words are not funny. Alka Seltzer is funny. You say 'Alka Seltzer' you get a laugh … Words with 'k' in them are funny. Casey Stengel, that's a funny name. Robert Taylor is not funny. Cupcake is funny. Tomato is not funny. Cookie is funny. Cucumber is funny. Car keys. Cleveland … Cleveland is funny. Maryland is not funny. Then, there's chicken. Chicken is funny. Pickle is funny. Cab is funny. Cockroach is funny – not if you get 'em, only if you say 'em."

Kartoon's funny. joKe's funny. Kaption's funny. Kat is funny. It's that simple.

bks said,

October 7, 2017 @ 5:39 am

I agree with Ralph Hickock that the cartoons are declining in funniness. Apparently, because I can't prove it.

Rubrick said,

October 8, 2017 @ 9:20 pm

ce n'est pas une blague.

Narmitaj said,

October 9, 2017 @ 6:21 pm

Comics I Don't Understand http://comicsidontunderstand.com/wordpress/ is an interesting website… the host posts individual cartoons and strips he doesn't get for various reasons (including lack of background info, like the dinosaurs eating "edibles" currently in second place on the scroll as I write [added link to that cartoon at bottom]).

Sometimes the host, Bill Bickel, sees perfectly well what the cartoonist is getting at, but can't understand why he/she thinks it is funny.

Not-understood cartoons have the CIDU tag (there are also LOL, Oy, Ewww and various other tags – like Arlo – for posted cartoons he does get and variously thinks are funny, groanworthy, lavatorial or have some sexual reference).

Scroll down a bit and you'll even find a linguistic discussion in the comments under a cartoon of a duck pondering the loss of his keys and figuring it has something to with his/him (actually me/my) being a duck. Here's the direct link:

http://comicsidontunderstand.com/wordpress/2017/10/05/duck-4/

"Edibles" cartoon direct link (meaning explained in comments):

http://comicsidontunderstand.com/wordpress/2017/10/09/edibles/