Emotional contagion

« previous post | next post »

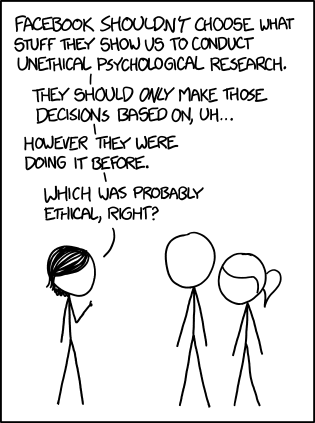

As usual, xkcd nails it:

Mouseover title: "I mean, it's not like we could just demand to see the code that's governing our lives. What right do we have to poke around in Facebook's private affairs like that?"

Here's the paper that caused all the fuss: Adam Kramer et al., "Experimental evidence of massive-scale emotional contagion through social networks", PNAS 2014.

Some worthwhile side issues came up. For example, this is probably the smallest effect size (d=0.001, i.e. one one-thousandth of a standard deviation) that got a significant amount of media coverage — John Grohol, "Emotional Contagion on Facebook? More Like Bad Research Methods", PsychCentral 6/23/2014:

Kramer et al. (2014) found a 0.07% — that’s not 7 percent, that’s 1/15th of one percent!! — decrease in negative words in people’s status updates when the number of negative posts on their Facebook news feed decreased. Do you know how many words you’d have to read or write before you’ve written one less negative word due to this effect? Probably thousands.

This isn’t an “effect” so much as a statistical blip that has no real-world meaning. The researchers themselves acknowledge as much, noting that their effect sizes were “small (as small as d = 0.001).” They go on to suggest it still matters because “small effects can have large aggregated consequences” citing a Facebook study on political voting motivation by one of the same researchers, and a 22 year old argument from a psychological journal.

For the record, here are some links to coverage that I've accumulated (and actually read) over the past few days:

William Hughes "Facebook tinkered with users’ feeds for a massive psychology experiment", The A.V. Club 6/27/2014

Robinson Meyer, "Everything We Know About Facebook's Secret Mood Manipulation Experiment" ("It was probably legal. But was it ethical?"), The Atlantic 6/28/2014

Vindu Goel, "Facebook Tinkers With Users’ Emotions in News Feed Experiment, Stirring Outcry", NYT 6/29/2014

Adam Kramer, Facebook post 6/29/2014

Brendeno, gisthub comment 6/29/2014

Michelle Meyer, "Everything You Need to Know About Facebook’s Controversial Emotion Experiment", Wired 6/30/2014

Dylan Matthews, "Facebook tried to manipulate users' emotions. But we have no idea if it succeeded", Vox 6/30/2014

Mike Isaac, "Facebook Says It’s Sorry. We’ve Heard That Before", NYT 6/30/2014

Kashmir Hill, "Facebook Added 'Research' To User Agreement 4 Months After Emotion Manipulation Study", Forbes 6/30/2014

Matthew Ingram, "Here’s what you need to know about that Facebook experiment that manipulated your emotions", GigaOm 6/30/2014

Dan Gillmore, "Being a Facebook ‘Lab Rat’ Is The Tradeoff We’ve Made", TPM 7/1/2014

Farhad Manjoo, "The Bright Side of Facebook’s Social Experiments on Users", NYT 7/2/2014

Vindu Goel, "Facebook’s Secret Manipulation of User Emotions Faces European Inquiries", NYT 7/2/2014

Samuel Gibbs, "Facebook apologises for psychological experiments on users", The Guardian 7/2/2014

Jessica Love, "Ethics Across Borders: On shifting values and Facebook's big misstep", The American Scholar 7/3/2014

Some kind of prize has to go to this one, for so effectively distracting us from the true scandal of extra-terrestrial involvement: Paul Joseph Watson, "COVER UP SURROUNDING PENTAGON FUNDING OF FACEBOOK’S PSYCHOLOGICAL EXPERIMENT?", Infowars.com 7/2/2014.

But when I look at the weird mix of advertisements that infest my Facebook wall (when I occasionally check it), I wonder why the researchers didn't just report on the effects of ad texts. I mean, could anyone sincerely claim to be outraged at an attempt to emotionally manipulate them by means of advertising?

Thus at this moment, Facebook is showing me the following ad texts–

"Death Begins in the Colon": Does everyday life consist of Gas & Bloating, Abdominal Pain, Constipation, Diarrhea, Indigestion, Urinary & Bowel Trouble?

"Get your house cleaned for $38!" Think housecleaning is too expensive? This company is ready to prove you wrong. Book your Homejoy cleaning today! There's no feeling quite like coming home to a clean house.

"Boyfriend wanted": No games. Just women looking for a single man over 60. Click to see Pics.

None of these are things I'm interested in, and none of the advertisers are outfits that I've ever heard of. So Facebook could have substituted fake ads with different words ("Death Life Begins in the Colon"; "There's no feeling quite like coming home to a clean dirty house"; etc.) and I never would have noticed the difference. Wait a minute, maybe they're already doing it?

Tom Parmenter said,

July 5, 2014 @ 1:12 pm

The internet is a happier experience with Adblock Plus. (The similarly named Adblock is a ripoff of the original open source.)

Duncan said,

July 5, 2014 @ 5:13 pm

The amusing thing is to connect the ads.

If you have bad enough bowel trouble you might need your house cleaned, and what better house cleaner than a girl friend into that sort of thing?

The best one I've seen of those was a taxi with two ads that had me just about rolling on the ground when I saw them. I don't remember which was on top and which on the back, but they were:

1) Ad for a divorce lawyer.

2) Ad for a strip club.

My question, which came first and which was then needed?

AntC said,

July 5, 2014 @ 6:15 pm

Yes, I'm astonished at the media outrage/allegations of manipulation that facebook's 'experiment' has generated.

Are there really people who trust and look at only one source for their news? (I mean amongst those who bother to look at the news stories on facebook anyway.)

And let me check: is there anybody who actually pays direct to facebook for their service? Then what rights do you think you have after uploading huge amounts of your private life for nothing? The essential feature of contract law — the cash nexus — is just not there.

[If anybody's looking for my facebook page: there are plenty with my name, but none of them are me.]

D.O. said,

July 5, 2014 @ 8:31 pm

I am a bit puzzled by the ethics angle of this. It is usually considered unethical to conduct research experiments that try to make people worse off (say unhappier), but OK to do experiments which try to make people better off. But these being research experiments, no one knows what is going to happen. What if gloomy news make us happier (schadenfreude, for example), is it OK then or schadenfreude is morally wrong and researches should not induce more of it? By the way, what is German for being sad at the success of someone else (if it's something more exotic then just translation of jealousy)?

Lazar said,

July 5, 2014 @ 10:52 pm

@D.O.: It appears that freudenschade has seen some limited use in English.

AntC said,

July 6, 2014 @ 4:32 am

freundenschade? Nothing is so vexing as the success of one's enemies — or of one's friends. As Oscar Wilde might have said.

ThomasH said,

July 6, 2014 @ 9:45 am

A topic I deliberately avoid thinking to much about is why the Facebook-Yahoo-Googleplex industry chooses the ads they want ME to see.

J.W. Brewer said,

July 6, 2014 @ 12:27 pm

To the extent there's a standard word for opposite-of-Schadenfreude, I believe it's Gluckschmerz (= luck-pain, with "schmerz" probably cognate to English "smart" as used in "ow, that smarts").

D.O. said,

July 6, 2014 @ 10:43 pm

Gluckschmerz seems to be completely unknown to Germans at least judging by German Google search and German Wiktionary.

J. W. Brewer said,

July 7, 2014 @ 10:16 am

Well, if Gluckschmerz is merely an Anglophone invention, it's one of long standing and seems reasonably cromulent from a German-nominal-morphology perspective.

mollymooly said,

July 9, 2014 @ 4:29 pm

The Irish English for "gluckschmerz" is "begrudgery".

Mat Bettinson said,

July 9, 2014 @ 10:42 pm

Michael Berstein points out that social computing scholars have been strangely silent on the press coming out of this Facebook research.

https://medium.com/@msbernst/the-destructive-silence-of-social-computing-researchers-9155cdff659

More interesting, I think, is his argument that the issue is overblown. He says: "Hammering ethical protocols designed for laboratory studies is fundamentally misguided". He also raises the obvious point that such experimentation happens all the time in the commercial sphere. I wonder to the extent that the media has riled up over this to be based on some sense of 'experiment' as in mad-scientists, ethically dubious by default.

On the other hand I have a hard time giving value to Bernstein's claim that asking permission drives users away. The fact people will say no is a pretty terrible reason for deciding not to ask them. I also don't think that commercial manipulation of search and adverts is the same as manipulating social graph news stream, filtering it for emotive content.

It's right this conversation is happening but it'd be good to engage with researchers rather than the howling press.