Nicholas Wade's DNA decoded

« previous post | next post »

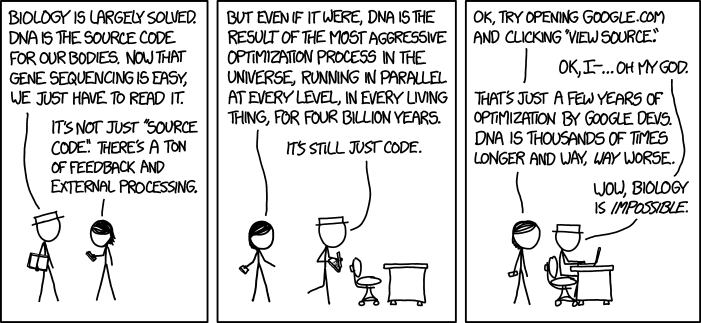

Today's xkcd:

Mouseover title: "Researchers just found the gene responsible for mistakenly thinking we've found the gene for specific things. It's the region between the start and the end of every chromosome, plus a few segments in our mitochondria."

For background, see "The hunt for the Hat Gene", 11/15/2009.

Evan Harper said,

November 18, 2015 @ 5:36 pm

i like comics that have drawings and jokes

Rubrick said,

November 18, 2015 @ 10:49 pm

I took the bait and looked at the source for http://www.google.com. Lord help us.

Here's a teeny tiny excerpt from one inline javascript function, just to convey the flavor. (I've manually removed a couple of angle brackets that were breaking WordPress).

var Hk=function(a,c,d,e {this.b=e;this.P=c;this.J=d;this.B=_.H(_.kk(a),.001);this.G=_.F(_.C(a,1))&&Math.random() this.B;c=null!=_.C(a,3)?_.C(a,3):1;this.D=_.H(c,1);this.A=0;a=null!=_.C(a,4)?_.C(a,4):!0;this.F=_.F(a,!0)};_.y(Hk,Gk);Hk.prototype.log=function(a,c){Hk.H.log.call(this,a,c);if(this.b&&this.F)throw a;};Hk.prototype.C=function(){return this.b||this.G&&this.A this.D};Chris C. said,

November 18, 2015 @ 11:25 pm

You can have angle brackets; you just have to use HTML entities. <example> becomes <example> (And I hope it comes out live the same as it does in the preview so I look like I know what I'm talking about.)

The code doesn't make sense without the brackets, but (without taking the time to analyze it, because I'm lazy) it's probably not QUITE as bad as it looks. They've removed functionally useless doodads like newlines and descriptive variable names that would make the code more readable. I doubt they actually work on it in this form. They probably write it using a more human-readable form, and then put it through a compressor to take out everything not syntactically necessary. Even if it makes the code only 10% less voluminous, given the number of times Google must serve up that page that's a significant amount of bandwidth saved.

gribley said,

November 19, 2015 @ 7:06 am

whoa… the source for google.com is ~180k?! That's nuts. It used to be a model of minimalist coding for fast loading on all connections. Apparently the math on connection speeds has changed to favor feature creep rather than speed… but I am shocked.

flow said,

November 19, 2015 @ 9:10 am

To web developers this find is not news—the Google homepage has been looking like this under the hood for many years. And yes, it is a surprising amount of code, especially when you think that in its source form—unobfuscated and with more humane variable names—it could easily be twice that size of 180k or more.

Mr Munroe hits the nail one more time. The image of DNA in the minds of the general public is much like a check list or a restaurant menu, maybe a cooking recipe.

But if you assume that DNA encodes part of a Turing-complete process, including loops, parameters, branches and jumps inside the code (which I do not know whether true or false, but I strongly suspect DNA code to be like that), then you can readily deduce that it may prove impossible to say some things about a given strand of DNA without actually running the program, i.e. grow things with it.

In software, the keywords are "halting problem" and "the limits of static code analysis". Some things are obvious from the source, some are much less obvious, but still deducible, and some facts of some programs cannot be decided if you don't put the program into a suitable environment (i.e. inside a computer with all dependencies installed) and run it.

Wikipedia (https://en.wikipedia.org/wiki/Static_program_analysis) says: "By a straightforward reduction to the halting problem, it is possible to prove that (for any Turing complete language), finding all possible run-time errors in an arbitrary program (or more generally any kind of violation of a specification on the final result of a program) is undecidable: there is no mechanical method that can always answer truthfully whether an arbitrary program may or may not exhibit runtime errors. This result dates from the works of Church, Gödel and Turing in the 1930s (see: Halting problem and Rice's theorem). As with many undecidable questions, one can still attempt to give useful approximate solutions."

A practical consequence of this state of affairs is that you can only do so much to prove that a given piece of executable code is or is not malware / a virus when you limit yourself to static analysis (and of course you'd like to do that because you don't want to accidentally activate the code just in case it *is* malware). The same could apply to the detection of health problems from DNA sequencing.

Another practical consequence should be well-known to anyone who submit their papers in TeX / LaTeX format: D. E. Knuth's brainchild is fairly uncommon for a programming language in that it relies on metamorphism, i.e. the ability of the running program to modify its own code in order to produce the desired output. Which, among other things, makes it very hard or impossible to build code analysis tools to do e.g. reliable syntax highlighting.

I've always found it funny and interesting that shortly before the advent of the modern computer people had already proven what it will not be able to.

JW Mason said,

November 19, 2015 @ 1:34 pm

This comic is great, as was his earlier one on the same subject: http://www.xkcd.com/1588/.

Here's my question: If you're a nonspecialist looking to get a real understanding of the connections between genotype and phenotype instead of all the genes-for-X nonsense, what are the best things to read? I liked Dawkins' Extended Phenotype and Carroll's Endless Forms Most Beautiful and loved West-Eberhard's Developmental Plasticity and Evolution. But I have no idea where else to go to get deeper sense of how working biologists think about evolution and genes. Maybe someone here has recommendations?

flow said,

November 19, 2015 @ 1:37 pm

@gribley just saying, with the whole world using Google these days, the whole world also has cached the Google start page on their devices and PCs. And judging a website by this single house number is not very informative.

When I look at the numbers the Firefox dev tools print out, the entire start page is actually a little bit over 1000KB, not the 180KB you quote. To put that into perspective: http://httparchive.org/interesting.php?a=All&l=Feb%2015%202015 estimates that the average web page clocks in at almost 2000KB as of 2015 (5 years ago that would have been 700KB), so google.com is actually sort of lean.

Moreover, Google can afford a lot of server-side power, ensuring small latency for the (only) around 12 HTTP requests that are needed to load the page. Of these, maybe 50% cause actual downloads (disclosure: anecdotal evidence) with a volume of a few hundred kilobytes.

In other words: the average web page you visit for the first time will easily tax your wires ten times what Google needs (but of course, using Google all day will add up).

I'm not going into any more detail because it's OT, but the takeaway is that while it still *is* surprising to see how big and convoluted a source file lands in your browser behind the scene for such a spartan home page, it is, in comparison, rather few than many bytes.