Corpus-based Linguistic Research: From Phonetics to Pragmatics

Machine Learning

Some Intellectual History

The Classical Period

In the 1970s and the early 1980s, the heyday of classical Artificial Intelligence, most well-informed people thought that AI was applied logic. Then the key issue seen as how to reduce a problem like computer vision or natural-language parsing to its logical essence, how to choose an appropriate logical system for each problem, and how to program a computer to reason effectively and efficiently in the resulting framework.

Thus a favorite topic in computational linguistics was "unification grammar". As Martin Kay wrote in "Parsing in Functional Unification Grammar", 1985:

I take it that the factors that govern the production of a sentence typically come from a great variety of different sources, logical textual, interpersonal, and so forth. In general, each of these, taken by itself, underdetermines what comes out. When they jointly overdetermine it, there must be priorities enabling a choice to be made among the demands of the different sources. When they jointly underdetermine the outcome, the theory must provide defaults and unmarked cases. [...]

Much of the character of the theory I shall describe comes from the set-theoretic properties that are imputed to descriptions of all kinds in everyday like. [...] Generally speaking, to add more detail to a description is to narrow the class of objects described, and to remove material from a description is to widen in coverage. In fact, descriptions and the sets of things they refer to are mathematical duals of one another with respect to the operations of set theory. In other words, the intersection of of a pair of dsecriptions describes the union of the sets of objects that they describe separately and the union of a pair of descriptions describes the intersection of the corresponding pairs of sets.

"Unification", in this context, is a formal operation that extends set-theoretic union in various ways to deal with the underdetermined and overdetermined combinations that Kay alluded to. But unification algorithms are NP-complete, i.e. exponentially hard, in the general case; so people spent a lot of time looking into things like more restricted forms of unification, or approximate algorithms for the general case, or ways to avoid using the kinds of set-combination (especially disjunction) that are responsible for the problems.

However, there was a more serious problem. Even if the computational complexities were magically removed, the results were not good from a purely descriptive point of view.

If you limited yourself to appropriately-chosen example sentences, to be described by a toy grammar, everything was fine. And this approach supported a viable intellectual ecosystem, since you could always introduce a new wrinkle into the situation by adding a few additional examples, modifying the system to handle them, and writing a conference paper about it. The trouble was that the systems of this kind had very limited coverage: there were not enough words in the lexicon; the words that were there did not have enough possible syntactic and semantic options; and the set of constructions covered was also typically incomplete.

The obvious story to tell about this situation is that it's just a matter of scale: we've demonstrated how to dig a small hole in the ground, and a big hole in the ground is just the same thing on a larger scale, requiring more shovels, time, and money.

But in fact some people did try hard to scale text-analysis systems up, and the results were not pretty. To the extent that the range of available syntactic and semantic alternatives was limited, the analyses were mostly wrong; and to the extent that the range of available alternatives was more realistic, the systems would output a larger and larger number of alternative analyses without being able to choose reliably among them.

The Triumph of the Cybernetic Underground

In the mid 1980s, the paradigm changed.

Since then, most well-informed people think of AI as applied statistics. The key issue becomes how to reduce a problem to a structured set of observations of measurements, how to choose an appropriate statistical model for the relevant aspects of that set of measurements, how to estimate the parameters of that model from training data, and how to use the trained model to answer questions about previously-unseen data.

In some ways, the perspective is similar to Martin Kay's: There are a large number of different kinds of perhaps-relevant factors, and the problem is to find a way to combine them to find the best solution, adding default information and adjudicating conflicts as needed. But now the defaults and the conflict-resolutions are seen as a matter of statistical optimization rather than logical constraint satisfaction; and the unmarked cases and the priorities are learned by estimating the parameters of a statistical model, rather than by direct human-mediated "knowledge engineering".

Where we are and where we seem to be going

There has been an explosion of applications and of techniques. For some problems (e.g. document retrieval, speaker identification) the automated techniques are generally better than humans are, at least when applied on a large scale. In other cases (e.g. speech recognition, translation) the automated techniques have been improving rapidly, but remain imperfect and somewhat fragile. And there are some problems, notably those that involve "understanding" or management of communicative interaction, where the imperfections and fragility are especially noteworthy.

In a recent Science Fiction novel Neptune's Brood, Charles Stross writes (about an interactive program in AD 7000!) that

... these things bore only a thin veneer of intelligence: Once you crack the ice and tumble into the howling void of thoughtlessness beneath, the illusion ceases to be comforting and becomes a major source of irritation.

The various Siri vs. Siri conversations on YouTube illustrate this idea nicely, e.g.

Siri1: Hi, Dylan.

Siri2: Sorry, I don't understand "I Dylan".

Siri1: It's OK. Really.

Siri2: I don't understand "it's okay really".

Siri1: I'm sorry, I'll try harder.

Siri2: I don't understand "I'll try harder".

Siri1: I'm sorry, I'll try harder.

Siri2: I don't know what you mean by "Eight I'll try harder".

Siri1: Sorry, I didn't get that.

Siri2: Sorry, I missed that.

Siri1: It's all good.

Siri2: That's good to know.

Siri1: Yes, everything is just fine.

Siri2: That's what I figured.

Siri1: Dylan, I don't understand "that's what I figured".

Siri2: I'm sorry, I'll try harder.

Siri1: It's no problem.

Siri2: I don't understand "it's no problem".

Siri1: Unfortunately, Dylan, I am as yet imperfect.

Siri2: Sorry, I don't understand "Unfortunately doing I am at Yegan perfect".

Siri1: It's OK.

Siri2: I'm not sure what you said there.

Siri1: Dylan, I don't understand "I'm not sure what you said there".

Siri2: I found 13 places matching "Dylan" ... 6 of them are not far from you.

Siri1: Dylan, I don't understand "I found 13 places matching Dylan six of them are not far from you".

Siri2: I don't understand "Keller I don't understand by".

Siri1: I don't know what you mean by "Understand why".

Siri2: OK, it's no big deal that you don't know.

Siri1: I'm just trying to help you.

Siri2: I don't know what you mean by "I'm just trying to help you".

Siri1: No problem.

Siri2: Uh oh. Help doesn't seem to have an address. Tap the name below if you'd like to add one.

Siri1: I'm not sure what you said there, Dylan.

Siri2: I don't even exist... how can you hate me?

What does this have to do with scientific linguistics?

Perhaps nothing.

In most if not all cases, the statistical models we're talking about are easy to reject as theories of human linguistic knowledge.

On the other hand, they're not so easy to reject as theories about (aspects of) human linguistic behavior. Psycholinguists' ideas about human speech and language processing are increasingly influenced by ideas that originated in engineering solutions to practical problems.

And in any event, the engineering methods are very helpful in creating, annotating, searching, and analyzing large linguistic datasets.

A machine-learning bestiary

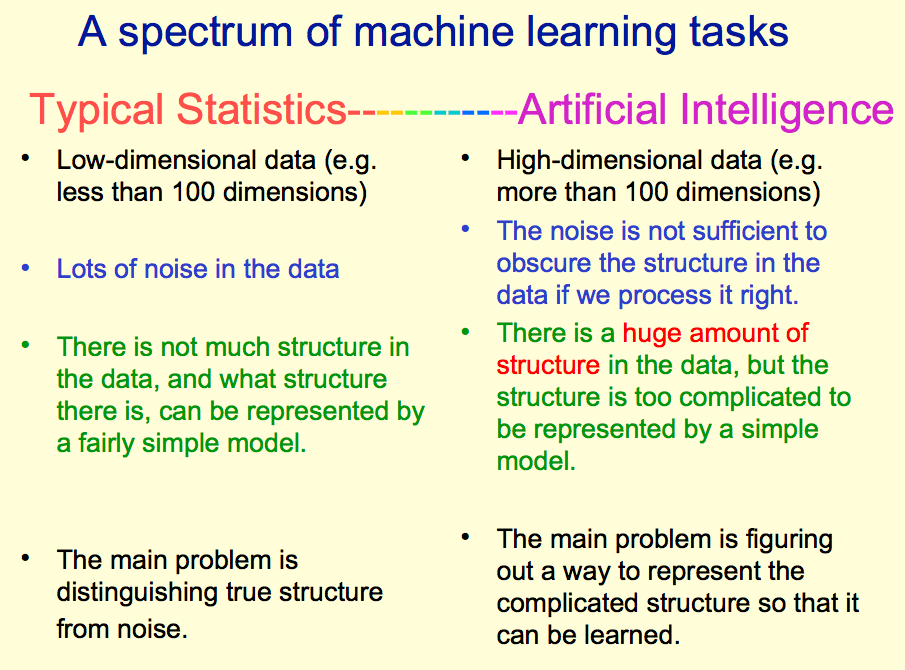

Here's a useful slide from Geoff Hinton's tutorial on "Deep Belief Nets":

In most cases, the data starts out as a VERY large table of some kind.

In real applications, there might be millions or even billions of rows, and millions or even billions of columns.

As a result, one early goal is often "dimensionality reduction": to project the table down into a much smaller and more manageable number of dimensions. For example, "Latent Semantic Analysis" starts with a matrix whose rows are "terms" (typically words) and whose columns are "documents" (text passages, such as news articles or scientific papers or web sites, or maybe sections of those). The starting point might cover 100,000 words and a million documents, with each column representing the "lexical histogram" (the count of words) in the document in question. LSA uses a linear-algebra technique called "singular value decomposition" to approximate this matrix with another one that has a much small number of rows and columns (say 50 or 100), along with a way to (approximately) reverse the mapping. Thus the content of a document is represented as a vector of loadings on (say) 100 "latent semantic dimensions" rather than as a vector of counts over 100,000 words.

There are many "subspace methods" of this general type. In some of them (such as Principal Components Analysis, or "Latent Semantic Analysis" = singular value decomposition), the subspace is chosen on the basis of the contents of the dataset. In other methods (such as Fourier Analysis), the new coordinate system is independent of the data, and is chosen because it has some desireable mathematical properties (e.g. in the Discrete Fourier Transform, sinusoids are eigenfunctions of linear shift-invariant systems).

In surprisingly many cases, random projection works just about as well as anything else...

While many of the commonly-used subspace methods involve choice of a linear projection operator, there are also some fairly common non-linear methods.

Once the dataset has settled down -- in its original configuration or in some reduced-dimensionality form -- the problem is then typically to fit a model that will predict or classify something on the basis on new data (e.g. the topic or the part of speech or the syntactic structure), or to derive a way of measuring something (e.g. the formants or the voice onset times of new speech data, or the similarity of two speakers or two documents).

Some of the ways of doing this are simple old ideas that still often work pretty well, such as linear regression, or linear discriminant analysis, or Mahalanobis distance, or k-means clustering, or k-nearest-neighbor classification, or a "naive bayes classifier". Others are slightly-less-simple generalizations of simple old ideas, such as Generalized Linear Models or Multilevel Models. And others are variably-complex version of less old ideas, such as Support Vector Machines, or the various kinds of "neural nets", and especially the current Machine Learning Flavor of the Month, "Deep Belief Nets" aka "Deep Neural Nets".

Some well-known applications

(to be discussed in class)

POS tagging

BIO chunking

Stochastic parsing

Document classification

Speech Activity Detection

How to do this stuff

If you want to be able to apply machine-learning techniques to text analysis, NLTK is a very good place to start. NLTK has modules for many of the things alluded to here, and there are plenty of Python modules Out There for other things.

If you need to learn about speech analysis techniques, you can do it in Python, but Matlab/Octave is probably a gentler way to start.

And if your goal is statistical modeling of linguistic data of whatever kind, R is the environment of choice.

On Friday 7/12 from 10:00 am to 5:00 pm, we'll be holding interactive demos of these and other systems in Mason Hall 2325 and 2333 (including help downloading and installing relevant software).