JSALT 2017

Speech Analysis in the Real World

We want to do a better job of analyzing audio recordings in which two or more people are talking, with overlaps and gaps and other sounds as well. We expect to make progress on key aspects of this problem. But an even more important result will be to document the remaining difficulties, and to lay out a roadmap for further research, including new task definitions and metrics.

One core aspect of the problem is “diarization”: Who spoke when, including accurate identification of overlaps when two or more people are speaking at the same time.

A parallel (and harder) problem is source separation: How to extract a version of the separate audio streams of interest, removing or ignoring signals that we don’t care about.

Members of the workshop team will be working on several specific applications in several different kinds of data, including the analysis of clinical interviews and of extended recordings from sources such as Lena wearable devices and police body cams.

The particular kinds of clinical interviews that team members have been working on include mood evaluation for patients with rapidly-cycling bipolar disorder, ADOS (“Autism Diagnosis Observation Schedule”) interviews, picture-description task recordings for patients with various neurodegenerative disorders, and patient conversations with doctors in training.

In some of this research, there is diagnostically valuable information in purely phonetic measures such as latency to respond, the distribution of speech and non-speech segment durations, the nature and distribution of filled pauses, syllable-production rates as a function of speech segment length, properties of f0 and amplitude functions, and so forth. These measures can be automatically extracted -- as long as diarization is accurate.

Other features depend on accurate recognition and analysis of the words used -- but of course the interpretation of these features also depends on which words were spoken by whom. “Meeting summarization” has been a long-standing goal of automatic speech processing research. In the case of clinical conversations, we’d like to analyze automatically how doctors interact with patients, which information ends up in the medical note, and if automatically extracted indicators correlate with how well a patient follows up on the doctor’s recommendations. This would allow us to improve training of caregivers, save doctors time, and thus improve patient care.

Finally, we plan to explore the application to these conversations of the kind of simple functional analysis that identifies questions and answers, backchannels, interruptions, and so forth.

Another data type of central interest to the workshop team is extended audio streams of the type that are becoming more and more common. As one particular example, we’ll be looking at the HomeBank archive at CMU, featuring 24/7 recordings of children’s linguistic experience and linguistic development. These recordings are challenging because they exhibit a wide range of acoustic conditions, with sometimes sparse and sometimes intense vocal activity. Typical tasks include identifying child-directed speech, identifying conversations among family members and others, locating and classifying the child’s vocalizations, identifying TV and radio streams, and so on. Team members have also addressed analogous problems of large-scale stream analysis in the case of police body-cam recordings.

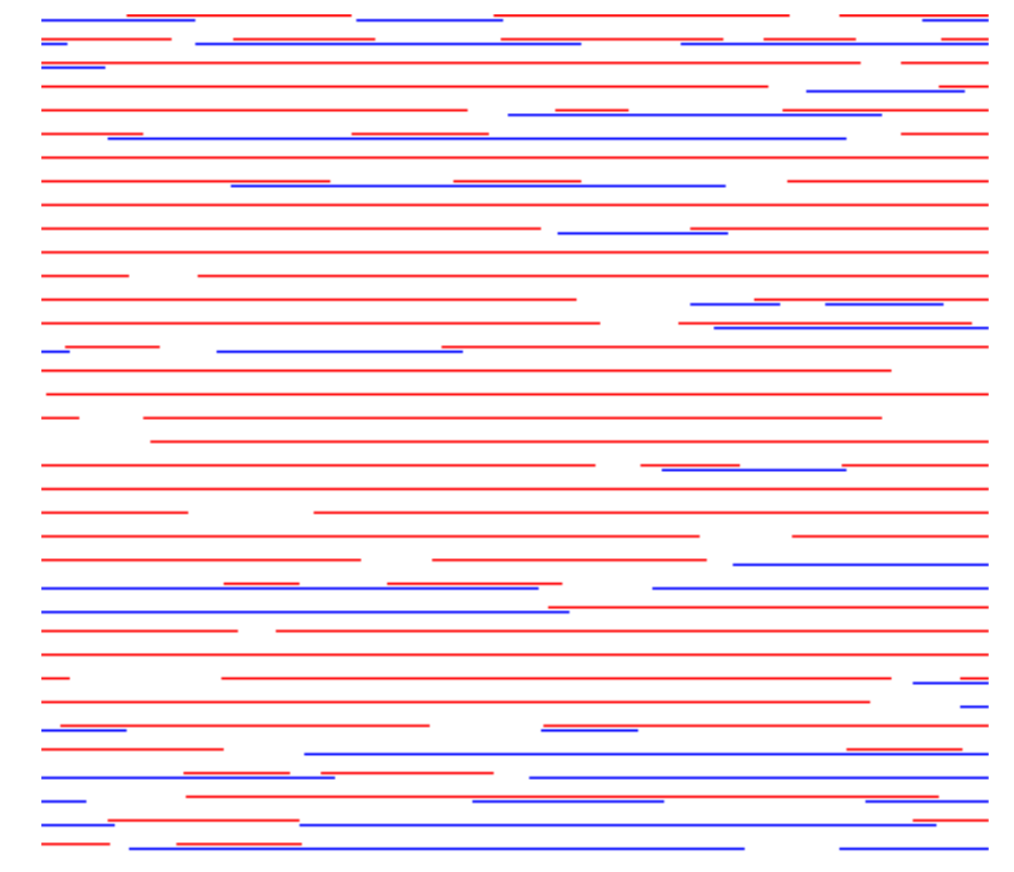

Our experience is that existing diarization algorithms can’t be depended on to work well enough, across the range of recordings we have been working with. We also find that existing metrics for diarization give a misleadingly rosy picture of the state of the art, because of the size of “collars” used and the treatment of overlaps (which are basically ignored). Lower-quality recordings and larger numbers of speakers can make things much worse, as can data in which an individual speaker uses a wide range of levels of vocal effort. And in recordings with high levels of overlap, the ability to detect the overlaps and to extract relevant features from the separate streams becomes increasingly important.

The identification of rare occurrences in recordings is another problem that existing metrics are not sensitive to. Particularly where the number of speakers is unknown, clustering methods struggle to identify speakers who only appear for one or two utterances within a recording dominated by other speakers. Similarly, short utterances as small as one syllable can be difficult to find while hidden among both other speech or non-speech audio, and they can be too short to effectively extract an i-vector as a means of determining identity. By definition, the impact of these rare events on existing overall diarization metrics will be small, but in some applications, they may be extremely important.

In previous work and in specific preparation for the workshop, team members have explored a variety of techniques to improve overlap detection in both artificial and naturally-occurring datasets; to explore new features, new methods, and new metrics for the overall problem of diarization; and to design and test various approaches to model-based source separation. One promising line of research looks at the use of multiple-channel recordings, including cases where the microphone properties and placement are not known in advance. And since we aim to develop methods that are not dependent on particular recording characteristics or interactional contexts, the workshop team will have access to a large number of diverse datasets, as well as techniques for creating artificial data by adding noise, imposing distortion, etc,

As a result, we’re confident that the workshop will achieve both of our goals: we’ll be able to document progress on overlap detection, on robust diarization, and on analysis of overlapping segments; and we’ll use our failures to characterize the remaining difficulties and to lay out a path for further research.